Introduction

Infrared (IR) technology has undergone a remarkable transformation over the last century. Rooted in 19th and 20th century developments in photometry, colorimetry, and radiometry and then driven by the military’s ongoing desire to “own the night,” IR technology now plays a critical role in U.S. defense capability, as it provides our combat personnel with the “eyes” to see and target our adversaries both in daylight and darkness. As with any historical development, the path leading up to today’s IR technology holds important lessons for the path leading toward tomorrow’s. Perhaps even more important are the transformative capabilities and trends that are currently emerging and that will shed light on what will likely be even more transformative capabilities in the future. Accordingly, this article provides a brief history of IR sensors and systems, as well as current trends and future projections for this important technology.

Historical Perspective

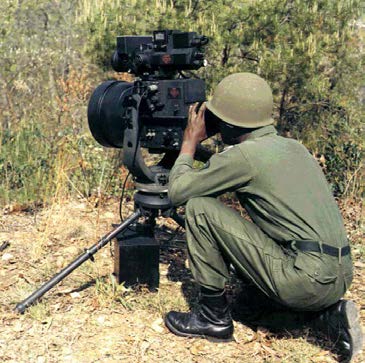

World War II was the motivation for the development of the first practical, though crude, IR imaging devices [1]. These devices fell into the two categories: (1) viewers that used ambient light-amplifying image converter tubes, and (2) devices that we refer to today as IR Search and Track (IRST) systems. Interestingly, while precision-guided munitions were developed and used in World War II, IR variants were not developed in time to be used in combat. However, image converter tubes were used in combat by the United States, Germany, and Russia and therefore were of greater interest. These converter tubes used a photoemissive detector that was only capable of responding to about 1.3 µm [2] and not capable of seeing object self-emissions. In principle, they could see and amplify ambient light at night, such as starlight. In practice, however, their sensitivity was so poor that they almost always had to be paired with a covert source of artificial IR illumination, such as a searchlight, with a visible light blocking filter. Their main application was for rifle/sniper scopes, as pictured in Figure 1.

Figure 1: 1940s U.S. Army Sniper Scope (U.S. Army Photo).

These image converter tubes were the forerunner of what are today called image intensifiers. Modern image intensifiers are sufficiently compact to be used in goggles and are sensitive enough to see reflected ambient light without the need for artificial illumination.

The development of IR imaging devices has always depended on the availability of suitable detectors. From that standpoint, the Germans developed a type of detector that had the most importance for modern IR systems. In 1933, Edgar Kutzscher [3] at the University of Berlin discovered that lead sulfide (PbS) could be made into a photoconducting detector. Lead sulfide photoconductors had the advantage of being able to respond to longer IR wavelengths (e.g., out to 2.5 µm) so they could detect self-emissions from hot objects, such as engine exhaust pipes and ship stacks. When coupled with optics, scanners, and a cathode ray tube for display, these photoconductors were made into IRST systems, although they were not called by that name at the time. Prototypes were tested on German night fighter aircraft for the detection and tracking of Allied bombers as well as on shore to detect ships in the English Channel. The detectors were sufficiently mature to be transitioned to relatively high-volume production, but the war ended before systems using them could be manufactured.

After the war, Kutzscher immigrated to the United States and assisted with the transfer of PbS technology. This transfer ushered in the beginning of a slow but ultimately productive domestic detector development process. Greater sensitivity was needed, and this sensitivity could most directly be provided by developing detectors that responded in the 8–12-µm-long wavelength IR (LWIR) band. The LWIR band is a highly desired operating band because it provides the most signal for a given difference in temperature between an object and its background (e.g., when imaging terrestrial objects). Unfortunately, that band is also one of the most difficult for detectors to work in because long-wavelength photons have lower energy than short-wavelength photons. So detecting LWIR photons also means detecting other low-energy products, such as latent heat-generated dark current and its associated noise. The first practical LWIR detector material discovered was mercury-doped germanium (Hg:Ge), but it had to be cooled to 30 K with large, heavy, and expensive multistage cryocoolers to mitigate the dark current. Nevertheless, systems equipped with Hg:Ge detectors demonstrated a significant increase in sensitivity.

The technology lingered until 1959 when W. D. Lawson [3] of the U.K. Royal Radar Establishment, Malvern, discovered benefits of the alloy Mercury Cadmium Telluride (HgCdTe or MCT). This innovative material could detect LWIR radiation at the significantly higher temperature of 80 K because of lower dark current. The result was a dramatic decrease in cryocooler size, weight, and cost, with similar dramatic decreases in the respective support equipment. The weight of some systems, for example, was reduced from 600 lbs to less than 200 lbs, although some of that weight reduction can be attributed to the detectors also being made smaller.

According to Lucian “Luc” Biberman [4], a keen-eyed witness in the early 1950s and co-developer of the Sidewinder IR missile seeker, the principal hardware focus of that era was on simple radiometric instruments and air-to-air missile seekers. This focus resulted in the highly successful Sidewinder missile, which was largely the beneficiary of uncooled PbS detector technology. But perhaps more importantly, it led to organized methods to share information in the fledgling community of interest. First came the government-industry co-sponsored Guided Missile IR Conference (GMIR). That information-sharing, in turn, led to the establishment of the Infrared Information Symposium (IRIS) in 1956, which later became known as the Military Sensing Symposium (MSS). The MSS continues today in its extended charter to hold meetings and publish proceedings as a way to foster IR information exchange. The symposium is widely regarded in the community as an indispensable tool for workers in the field to stay abreast of important programs, technological advances, and marketing opportunities.

Developers, of course, walk a fine line between wanting to get their products exposed while simultaneously wanting to avoid giving away too much information to their competitors. However, most participants in government and industry agree that they all have more to gain than to lose from this forum, which is now nearing its 60th anniversary. Arguably, the military users have had the most to gain, as they have leveraged this forum to describe their needs, as well as the effectiveness of products they have tested and fielded. Thus, one import by-product of the MSS has been the creation of healthy competition to develop and field better solutions for the military user.

In the 1960s, the Vietnam War continued to have a major impact on IR imaging system development. The need to interdict supplies and troops infiltrating down the Ho Chi Minh Trail at night to avoid detection was a high priority. Early systems were relatively crude IR mappers, which initially were single detectors that were swiped one scan line at a time across the ground with a scan mirror. The signal output was fed into a glow bulb illuminating a spot on a photographic film carriage. The forward motion of the aircraft resulted in successive scan lines being imposed on a film strip fed by a reel that was synchronized to the speed of the aircraft (as illustrated in Figure 2).

![Figure 2: Illustration of an IR Mapper, Where an Image Scanned by a Rotating Mirror Is Transferred to Film via a Synchronously Scanning Glow Tube Modulated by the IR Detector Output. The Aircraft’s Forward Motion Adds the Second Dimension to the Raster Scan [5, 6].](/wp-content/uploads/2019/11/page25-900x335-800x298.png)

At the time, these systems were highly successful for reconnaissance but not much use for providing direct fire support. Nevertheless, they demonstrated the utility of IR imaging and soon led to directable real-time imaging systems, which we now call Forward-Looking IR (FLIR) systems.

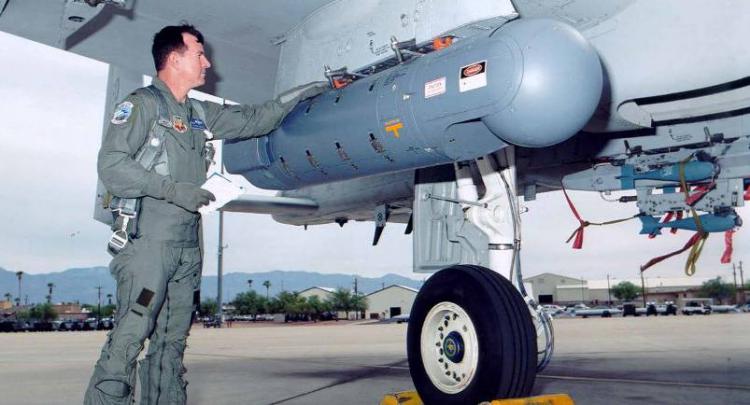

The curious name of FLIR was derived from the first directable sensors adding a vertical scan mirror so the detectors could be scanned in two directions. This feature enabled the system to look forward instead of down. Moreover, the resulting signal was fed through a scan converter so it could then be viewed in real time on a standard cathode ray tube. This development was a major advance, and systems were soon integrated into pod-like targeting systems that are still in use today on fighter bombers, gunships, and drones.

In the late 1960s and early 1970s, efforts were made to standardize IR technology to reduce cost and improve reliability. The resulting devices were referred to as “first generation” items. Accordingly, first-generation linear arrays of intrinsic MCT photoconductive (PC) detectors were developed that responded in the LWIR band. All services were required to adopt the standard “common module” building blocks pioneered by Texas Instruments and developed under Army supervision. Standardization and improved detectors facilitated high-volume production and dramatic cost reductions. As a result, the 1970s witnessed a mushrooming of IR applications. IR systems were mounted on all manner of platforms, ranging from ground armor, including tanks and armored personnel carriers, to aircraft and ships.

During this era, most of the world’s advanced militaries had image intensifiers, but their use required ambient illumination and a clear atmosphere. In overcast or smoke and dust conditions, image intensifiers were effectively blind. Nonetheless, the Army’s capability was effectively expanded from day warfare to day and night warfare. The Army’s motto became “we own the night,” and indeed it did. The FLIR systems built in this era from standardized components were later referred to as generation 1 or simply “Gen 1” systems.

The invention of charge transfer devices such as charge coupled devices (CCDs) and complementary metal-oxide-semiconductor (CMOS) switches in the late 1960s was another major breakthrough that led to the next generation of FLIRs. Detector arrays could now be coupled with on-focal-plane electronic analog signal readouts, which could multiplex the signal from a large array of detectors. These multiplexers were called readout integrated circuits (ROICs). They made it possible to eliminate the need for a separate dedicated wire to address each detector as well as the need for each detector to have its own dedicated amplifier circuit. They essentially decoupled the number of wires needed from the number of detectors used. This breakthrough enabled the fabrication of high-density arrays, which increased sensitivity and permitted building large arrays. Now, scanning line arrays with many columns and staring focal plane arrays (FPAs) were, in principle, no longer limited by the number of wires, preamplifiers, post amplifiers, etc., that could be packaged in a practical size.

FLIRs that used this advance technology were referred to as “Gen 2” (although the Army used the term only for its scanned FPA approach). And of course, integrating Gen 2 detectors to readout electronics was not straightforward. Early assessments of this concept showed that photovoltaic (PV) detectors made from InSb, PtSi, and HgCdTe detectors were essential because their high impedances were crucial for interfacing with the readout multiplexers. Alternatively, lower impedance detectors would have drawn more current and power, which would have heated the focal plane and required correspondingly higher power and larger cryocoolers.

While HgCdTe PC detectors were the workhorses of Gen 1 FLIRs, it was not easy to make them work as PV devices. PV detectors require a delicate pn junction, which is much more susceptible to material defects and dark current. Nevertheless, the material itself had other qualities few other materials could match, such as a narrow bandgap with low dark current, which allowed it to be operated at 80 K in the LWIR band, the highly desired band for ground combat. Accordingly, in the late 1970s through the 1980s, MCT technology efforts focused almost exclusively on PV-device configurations.

This effort paid off in the 1990s with the birth of second-generation IR detectors, which provided large two-dimensional (2-D) scanning arrays for the Army. And by this time, the Air Force and the Navy were no longer constrained to adopt the Army’s standard. Subsequently, these Services developed staring arrays around InSb, which were less expensive, responded in the 3–5-µm band, and were better suited to their operating environment.

The 1990s also saw both military and civilian applications of IR technology receive a boost in interest when room temperature thermal detectors were perfected in the form of staring focal plane arrays. Recall that thermal detectors differ from the photon detectors described previously in that thermal detectors act like tiny thermometers and exhibit a change of temperature, which is then sensed. Generally, these detectors are much less sensitive than cooled photon detectors, but when large numbers are used in a staring focal plane array, they become sensitive enough to be used in important roles in driver’s night viewers, rifle scopes, and missile seekers. That ability, combined with their low cost, low power, and room temperature operation, made them extremely attractive.

In 1994, Honeywell patented a microbolometer thermal detection approach using vanadium oxide (VOx) that was developed under the government’s HIgh-Density Array Development (HIDAD) program. The patent was subsequently licensed to many other U.S. aerospace companies and to some foreign countries under rigid export control restrictions.

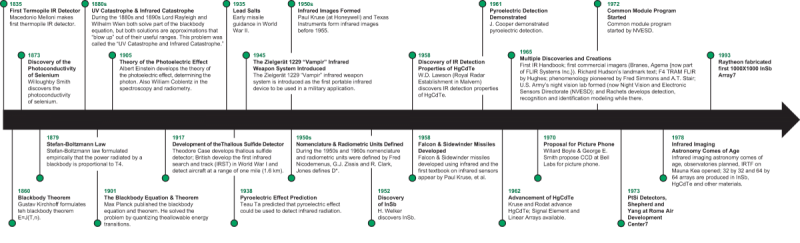

In addition, developments in FPA technology have revolutionized IR imaging. Progress in integrated circuit design and fabrication techniques has resulted in continued rapid growth in the size and performance of these solid-state arrays. The timeline illustrated in Figure 3 lists some significant events in the history of U.S. IR technology development leading up to the early 2000s.

- 1800: Sir William Herschel, an astronomer, discovered IR.

- 1950–1960: Single-element detectors first produced line scan images of scenes.

- 1970: Philips and English Electronic Valve (EEV) developed Pyro-Electric tubes. The English Royal Navy used the first naval thermal imager for shipboard firefighting.

- Mid-1970s: MCT technology efforts focused on Common Module (Gen 1) devices.

- 1970s–1980s: MCT and InSb technology efforts focused on PV and producibility of devices.

- 1978: Raytheon patented ferroelectric IR detectors using Barium Strontium Titanate (BST).

- Late 1980s: Microbolometer technology developed.

- 1980s–1990s: Significant focus on 2D InSb and uncooled device technologies. MCT technology efforts focused on Gen 2 scanning devices.

- 1990s–2000s: Initial technology development on MCT dual-band devices; MCT, InSb, and uncooled 2-D staring devices used widely in applications such as targeting and surveillance systems, missile seekers, driver aids, and weapon sights.

Significant efforts have been undertaken to insert these now-proven IR devices into military payloads and missile seekers, and later into commercial products. As a result of the success of military research and development programs, new applications were identified and products were moved into production. Thermal imaging technology provided the ability to see and target opposing forces through the dark of night or across a smoke-covered battleground. Not surprisingly, these properties validated the Army’s claim of owning the night, at least for the better part of two decades (from 1970 to about 1990).

Current Trends, Needs, and Future Projections

The reality today is that the U.S. military “shares the night” with its adversaries. But our future defense posture depends upon making sure we corner the greatest share of that night. Currently, threats often come from adversaries that employ unconventional tactics, but that fact doesn’t mean we can ignore adversaries that employ conventional tactics as well. Furthermore, we have traditionally chosen to avoid close combat in cities, preferring instead to use our superiority in long-range-standoff weapons to defeat conventional forces. However, evolving world demographics coupled with political turmoil have increasingly drawn conflict and warfare into urban areas, especially in parts of the world that have become increasingly unstable for various reasons. These urban populations provide a large, ideal environment for enemy combatants to hide and operate in as well as a challenge for U.S. forces to try to deploy conventional weapons and tactics. Hence, there is a great need for an extensive strategizing, reequipping, and retraining of the U.S. military to successfully cope with urban warfare. New high-performance IR imaging systems already play a critical role in this type of warfare, and an even bigger role will likely be played by more advanced systems currently in development.

Ultimately, success in urban warfare largely depends upon one’s ability to accomplish the following (as adapted from Carson [7]):

- Find and track enemy dismounted forces, even when their appearance is brief or mixed with the civilian population.

- Locate the enemy’s centers of strength (e.g., leadership, weapons caches, fortified positions, communication nodes, etc.), even when camouflaged or hidden in buildings.

- Attack both light and heavy targets with precision, with only seconds of latency and little risk to civilian populations and infrastructure.

- Protect U.S. forces from individual and crew-served weapons, mines, and booby traps.

- Employ robots in the form of drones, such as unmanned air vehicles (UAVs) and unmanned ground vehicles (UGVs) as well as unattended sensors.

- Protect our own forces and homeland infrastructure from these same drones, which, in miniature, can fly in undetected while carrying miniature IR sensors that allow for precise day/night delivery of explosives.

![Figure 4: The ARGUS-IS Persistent Surveillance System [8–11]. Figure 4: The ARGUS-IS Persistent Surveillance System [8–11].](/wp-content/uploads/2019/11/ms-ir-figure-4b.jpg)

To meet these requirements, imaging systems must provide persistent surveillance from platforms located almost directly overhead and from small, stationary, and maneuverable platforms on the ground. Also needed are imaging systems that perform targeting and fire control through haze, smoke, and dust. Overhead systems must have the resolution to recognize differences between civilian and military dismounts. Some of them must have the ability to perform change detection based on shape and spectral features. Others must have the ability to quickly detect and locate enemy weapons by their gun flash and missile launch signatures.

Near-ground systems must have the resolution and sensitivity to identify individuals at relatively short ranges from their facial and clothing features and from what they are carrying. These systems must also be able to accomplish this identification through windows and under all weather and lighting conditions. Some systems must also be able to see through obscurations, such as foliage and camouflage netting. In addition, in most cases, collected imagery will be transmitted to humans who are under pressure to examine it and make quick, accurate decisions. As such, it is important that imagery be of a quality that is highly intuitive and easily interpretable. This persistent “up close and personal” sensing strategy requires many and varied platform types. Cost is also an important factor in this equation, due to ongoing budget constraints. Both the sensors and the platforms that carry them must be smaller, lighter, and more affordable.

Solutions to some of the surveillance requirements are being addressed with advanced persistent surveillance systems, such as ARGUS-IS and ARGUS-IR (illustrated in Figure 4). ARGUS-IS has an enormous array of 368 optically butted FPAs using 4 co-boresighted cameras. They combine for a total of 1.8 gigapixels that can provide separate images of 640×480 pixels to as many as 65 operators. The operators can independently track separate ground objects or persons of interest within the ground footprint of the combined sensors with a ground resolution of approximately 4 inches at a 15-kft platform altitude. ARGUS-IS operates in the visible/near IR (V/NIR) spectral band and requires daylight, but the Department of Defense (DoD) is developing the more advanced MWIR version (ARGUS-IR) to field comparable capability at night. ARGUS-IR will provide more than 100 cooled FPAs each with 18 megapixels [8–11].

On the opposite side of the IR sensor form factor spectrum are miniature IR surveillance technologies, such as the uncooled LWIR microbolometer pictured in Figure 5.

Figure 5: The FLIR Inc. Lepton Microbolometer.

These types of sensors are being used in both commercial and military applications. Because of their small size, they have an inherently low size-weight-power-cost (SWaPC) and can be deployed by a variety of means in a variety of terrains and urban environments. They can run unattended for a long time on batteries, are capable of taking pictures that can be recorded or transmitted, and, in general, are so inexpensive that they can be considered expendable.

While the emergence of small surveillance drones has driven the need for lower weight and lower volume payloads, in some cases, performance cannot be sacrificed, and microbolometers cannot meet all of these needs. In these instances, there is on an ongoing drive for small-pitch FPAs that operate at higher temperatures, often above 150 K. Overall sensor size, for equal performance, scales with detector pitch as long as the aperture size is maintained. Smaller pixels allow for a reduction in the dewar and cooler size as well as reductions in weight and size of the optics. Accordingly, package size and, to a large degree, package weight can be reduced in proportion to detector pitch. The current trend appears to be moving to 10–8-µm pitch for MWIR sensors, but some LWIR FPAs are being made with a pitch as small as 5 µm.

Readily available commercial microbolometers are a potential security threat for U.S. forces even though these imagers have lower resolution and sensitivity than what advanced technology can provide. Ironically, their presence requires even more advanced technology because some of our adversaries are uninhibited by protocols and will typically fire on our forces even with cheap, low-resolution sensors and poor-resolution images. U.S. forces, on the other hand, fire only when the enemy can be positively identified. Thus, it is hoped that the emerging systems currently under development will have an answer for the proliferation of IR imagers in the hands of our adversaries.

The Army’s desire for increased standoff range resulted in the emergence of a third generation (Gen 3) of staring sensors with both MWIR and LWIR capability. The shorter MWIR wavelength offers nearly twice the range of the LWIR band in good weather, but the LWIR band excels in battlefield smoke and dust and provides greater range in cold climates. One of the particular challenges for the Gen 3 systems is reducing cost. The high cost is generally associated with both low detector yield and complex optics. Reducing the detector cost is being explored on two fronts: alternate substrates and new detector materials. A Gen 3 detector is made by placing an MWIR detector material behind LWIR material so the two bands occupy the same space in the focal plane. Only two materials currently offer the potential to accomplish this effect: MCT and superlattices. MCT is most easily made on CdZnTe substrates because both material’s crystal lattices match well, thus providing higher yield. However, lower cost GaAs and Si substrates are also being explored with considerable success.

The other front exploits the potential for a radically different material type called a superlattice. Superlattices exploit nanotechnology to engineer materials from the III–V columns of the periodic table to make alloys such as InAsSb and InAs. In principle, superlattices have many favorable characteristics, such as being strong, stable, and inexpensive. However, they have wide band gaps. So, to detect low-energy MWIR and LWIR photons, they have to be fabricated in thin alternating layers to form quantum wells. These structures have the additional benefit of being compatible with another breakthrough in detector design, that of negative-barrier-negative (nbn) junctions. These junctions have an advantage over traditional positive-negative (pn) junctions (such as are commonly used in commercial solar cells) in that they can better suppress the dark current that arises from latent heat in the material. This, in turn, offers the potential for higher temperature operation. Current success so far has largely been in the MWIR region, but the expectation is that success will eventually be attained in the LWIR region as well. It remains to be seen if it will offer a better solution than MCT detectors.

![Figure 6: A Passive/Active Targeting System, With the FLIR Providing Passive Target Detection and the LADAR Providing Active Identification [12] (Copyright BAE, UK, All Rights Reserved). Figure 6: A Passive/Active Targeting System, With the FLIR Providing Passive Target Detection and the LADAR Providing Active Identification [12] (Copyright BAE, UK, All Rights Reserved).](/wp-content/uploads/2019/11/ms-figure6.png)

Figure 6: A Passive/Active Targeting System, With the FLIR Providing Passive Target Detection and the LADAR Providing Active Identification [12] (Copyright BAE, UK, All Rights Reserved).

The dual-band Gen 3 approach is actually a subset of multispectral and hyperspectral imaging. These imaging types offer additional modalities and are often best exploited through the use of sensor fusion techniques. But they face several technological challenges and are still in development. Multi-spectral images must be displayed or processed simultaneously in each band to extract target information. Additionally, for operator viewing, they must be combined into a single composite image using a color vision fusion approach. The best approach for accomplishing the image fusion and operator display is currently being investigated. However, initial results have shown impressive reductions in false alarm rate and probability of missed detections when, for instance, searching for targets hidden in deep tree canopies and/or under camouflaged nets.

Airborne and naval platforms have taken an entirely different approach to gaining extended range target identification. Their approach can, in principle, triple the range of existing targeting FLIRs. These platforms are adopting passive/active hybrid systems consisting of passive IR imaging for target detection in combination with active LADAR (a combination of the words “laser” and “radar”) for high-resolution identification. Pictured in Figure 6 are example images provided by BAE. In principle, LADARs can image with much shorter wavelengths, 1.54–1.57 µm, to greatly reduce the diffraction blur diameter of the optics with a corresponding increase in range. Moreover, this choice of wavelengths is eyesafe. These systems have just recently been fielded on aircraft and ships. Figure 7 shows a picture of the Air Force’s Laser Target Imaging Program (LTIP) pod.

Perhaps the biggest breakthrough in this area, however, is about to be achieved. The “Holy Grail” of imaging systems has long been to provide their own ability not only to see but to understand what they are seeing. For instance, drones are merely flying platforms that are useless without their data link. And in future combat, data link survival is not assured. Soon, LADARs are expected to solve the challenge of image understanding in autonomous systems by advancing to 3-D shape profiling of targets. Current 2-D “automatic target recognition” technology has yet to accomplish that advancement in spite of millions of dollars and more three decades of research [10]. But if targets can be profiled in 3-D and then compared to a stored library of 3-D wireframe target models, the goal might finally be achieved. That is because it would be highly unlikely to mistake an object for a false target when it is accurately compared in three dimensions and when it is presented with an appropriate FLIR thermal signature as well. And one can only wonder if hybrid 3-D LADARs/FLIRs might one day even open up the battlefield to the real Holy Grail—the replacement of a human warrior on the battlefield with a robot warrior.

Passive/active fused sensors also promise to provide a solution to one of the more ominous threats facing our nation from domestic terrorism—the wide availability of cheap micro-drones equipped with thermal cameras and capable of carrying explosives. Threats such as these, which could be flown out of the trunk of a car at night, could ostensibly be made with radar cross sections too small for expensive and bulky conventional air defense radars to detect, thus potentially holding hostage the entire infrastructure of a nation. Moreover, radars might have to be placed on almost every corner to avoid structural masks.

On the other hand, small, compact, and inexpensive laser scanners, such as those used on some self-driving automobiles today, could be widely deployed around critical infrastructure, such as military installations, financial centers, and the power grid. They could establish a 3-D reference space of known objects and then cue off of change detection whenever new objects enter that space. Their high resolution could permit object identification; and ultimately, when equipped with higher power adjunct lasers, they could be able to dazzle or otherwise blind the drone’s sensors, if not destroy the drone outright. Such a defense would be far preferable to using gun fire or missile interceptors in densely populated urban areas. Thus, passive/active IR sensors are not only transformative on the battlefield they offer the promise of being transformative everywhere.

Finally, there is at least one more transformative emerging IR technology, and it is already being tested. This involves digital readout integrated circuits (DROICs) now in development [14]. Recall that all Gen 2 and Gen 3 FLIRs, as well as many LADARs, are enabled by analog ROICs. These devices provide the critical capability required to multiplex millions of parallel detector signals into a serial output signal placed onto a single wire. A major problem they have, however, is the lack of charge storage capacity. IR scenes produce enormous “background” flux, and the desired signal is only a small percentage of that flux. Existing ROICs cannot store the resulting charge in their pixels and must instead shorten their integration time to discard the additional charge. Of course, the signal also gets discarded at the expense of sensitivity.

However, DROICs are redefining the paradigm because they “count” the photoelectrons as they are being generated before they are discarded. This breakthrough capability is the result of Moore’s law in microelectronics. It is projected that an entire Intel 8086 microprocessor will fit within a single 30-µm-square pixel by the year 2018, when 7-µm feature sizes are expected to become available! And it isn’t just sensitivity that stands to improve, but signal processing as well. With so much processing power embedded in each IR pixel, it will be possible to implement such space- and power-hungry off-chip tasks as image stabilization, change detection, passive ranging from optical flow calculations, super-resolution, and time-delay-and-integration. LADARs will be able to perform range measurements within each pixel to high accuracy, which will enable them to measure the shape of even small objects and thus improve their ability to identify hand-held threats, such as handguns. Inarguably, such capabilities are on the verge of yielding still more transformative changes in IR technology.

Conclusions

IR technology remains a key component in the U.S. defense posture, and it is hard to imagine how the country would defend itself without the benefit of IR surveillance and targeting systems. However, as with most technologies, IR technology is also diffusing throughout the world, making our current advantage but an instant in time. And if we consider the implications of the historian’s famed adage—“those who ignore the lessons of history are condemned to repeat them”—we recognize how truly ephemeral this advantage is. Thus, it is not the current advantage that is key; rather, it is the rate of technical advancement that is important.

To be sure, the U.S. IR advantage can be sustained if we retain some of the policies and pathways that got us to where we are today. These policies include continuing to find and secure adequate DoD funding. Admittedly, these funds are chronically limited, so we must also continue to leverage and optimize them as much as possible. In the past, this leverage and optimization have been achieved via close working relationships between government laboratories, industry, and academia. Particularly important have been the role of IRIS and the MSS in sponsoring regular meetings. Those meetings promote technical exchanges at a level that helps all while not undermining the benefits of healthy competition.

Technology leverage and optimization have also been successfully achieved through large, collaborative programs, such as the DoD-funded Vital Infrared Sensor Technology Acceleration (VISTA) program, which seeks to develop a baseline of shared technical knowledge and fabrication infrastructure. Through such programs, each participating company does not have to make a separate, redundant investment in critical underpinning capabilities, yet it can add value by the way it manages the products and innovates beyond that framework.

Finally, advances in IR technology have been, and will continue to be, largely driven by advances in materials and in microelectronics. The latter area is advancing exponentially by Moore’s Law. Thus, one can expect the already breathless pace of advancements to be even more rapid going forward.

References:

- Johnston, S. F. A History of Light and Colour Measurement: Science in the Shadows. Boca Raton, FL: CRC Press, Taylor & Francis Group, 2001.

- Arnquist, W. N. “Survey of Early Infrared Developments.” Proceedings of the IRE, September 1959.

- Rogolski, A. “History of Infrared Detectors.” Opto-Electronics Review, vol. 20, no.3, 2012.

- Biberman, L. M., and R. L. Sendall. “Introduction: A Brief History of Imaging Devices for Night Vision.” Chapter 1 in Electro-Optical Imaging: System Performance and Modeling, Bellingham: SPIE Press, 2000.

- Hunt, G. R., J. W. Salisbury, and W. E. Alexander. “Thermal Enhancement Techniques: Application to Remote Sensing of Thermal Targets.” In Res. Appl. Conf., 2d, Washington, DC, Proceedings: Office of Aerospace Research, pp. 113–20, March 1967.

- Harris, D. E., and C. L. Woodbridge. National Electronics Conference. Vol. 18, 1962.

- Carson, Kent R., and Charles E. Myers. “Global Technology Assessment of High-Performance Imaging Infra-Red Focal Plane Arrays.” Unpublished draft, Institute for Defense Analysis, October 2004.

- Maenner, Paul F. “Emerging Standards Suite for Wide-Area ISR.” Proceedings of the Society of Photo-Optical Instrumentation Engineers (SPIE), Vol. 8386, May 2012.

- Defense Advanced Research Projects Agency. “Autonomous Real-Time Ground Ubiquitous Surveillance – Infrared (ARGUS-IR) System.” DARPA-BAA-10-02, 2010.

- Maxtech International. “Infrared Imaging News.” July 2010.

- Schmieder, D. E. Infrared Technology & Applications. Course Notes, Georgia Tech, April 2015.

- Baker, I., S. Duncan, and J. Copley. “A Low Noise, Laser-Gated Imaging System for Long Range Target Identification.” Proceedings of the Society of Photo-Optical Instrumentation Engineers (SPIE), vol. 5406, pp. 133–144, 2004.

- Ratches, J. A. “Review of Current Aided/Automatic Target Acquisition Technology for Military Target Acquisition Tasks.” Optical Engineering, vol. 50, no. 7, July 2011.

- Schultz, K. I., M. W. Kelly, J. J. Baker, M. H. Blackwell, M. G. Brown, C. B. Colonero, C. L. David, and D. B. Tyrrell. “Digital-Pixel Focal Plane Array Technology.” Lincoln Laboratory Journal, vol. 20, no. 2, 2014.