Multiple factors limit the potential of modern autonomous systems (e.g., self-driving vehicles and uncrewed aircraft and watercraft).

Autonomy is learned through modeling and simulation, given the expense of training in the real world. Generally, it goes like this:

- A model of the intended platform requiring autonomy is created.

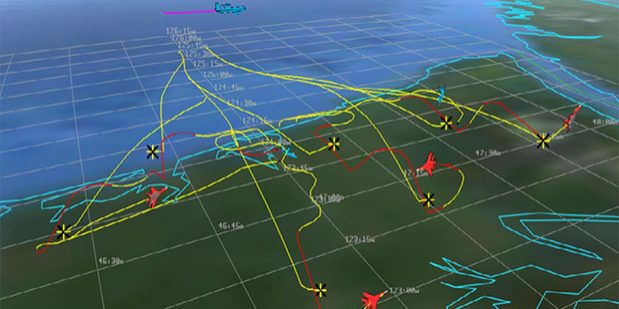

- The model goes through various simulations in an environment as realistic as possible to generate the data that trains the autonomous system to make the right decisions.

- After sufficiently training the model, those learnings are transferred to a physical system and tested to ensure the training works.

Training models in high-fidelity environments for Defense Department platforms can sometimes take months to even years. Furthermore, autonomy becomes vulnerable when faced with unknown situations/observations in the real world. This brittleness is known as the simulation-to-real (sim-to-real) gap. For example, a drone moving from a dense city to a coastal environment would encounter a dramatically different observation space.

Unlike commercial autonomous systems, such as warehouse robotics or autonomous vehicles operating in a controlled environment using geofencing, military systems have far more unknown variables. For instance, flight dynamics could be off, the lighting conditions are likely to vary, and it’s often impossible to model an adversary precisely as they act in the real world.