Introduction

The 2022 National Defense Strategy directs the U.S. Department of Defense (DoD) to urgently act to strengthen the U.S. military against its pacing challenge—the People’s Republic of China (PRC) [1]. The PRC challenges the U.S. military’s information advantage in the operational environment, undermining kinetic maneuver across all domains of the Joint Force [2]. Within the air domain, the PRC’s ability to disrupt, deny, and degrade the data informing air operations will threaten the U.S. Air Force’s Operational Imperative of tactical air dominance and the Air Force Future Operating Concept of the successful fight for air superiority at the forward edge of the battlespace. Human-machine teaming (HMT) with artificial intelligence (AI)-driven air power platforms, such as the uncrewed, autonomous, “robotic wingmen” aircraft known as collaborative combat aircraft (CCA), will thwart the PRC’s pursuit of information advantage. CCA employment will shrink the kill chain and expand the air domain’s lethality by providing qualitative and quantitative optimization of Air Force operators’ observe, orient, decide, and act (OODA) loop and targeting cycle.

However, HMT optimization depends upon human-data accountability, which is the human ownership, understanding, and implementation of the data that the CCAs use to execute tactical decisions autonomously. In the future operating environment, data will be the critical pivot between human command of CCAs and CCA algorithmic effects on the battlespace. To best equip the Joint Force for future CCA employment against the PRC threat, the DoD must consider new criteria to determine the next generation of its readiness reporting for robotic wingmen—the combat readiness of CCA data.

This article will recommend a CCA data readiness tool that quickly informs commanders of their level of risk assumption based on data criteria rankings and using the tool’s application to cognitive electronic warfare (CEW) CCAs as a vignette. It will also suggest the personnel, policy, and technology investments to optimize each data readiness criterion.

Autonomous Weapons System’s (AWS’s) “Black Box” Challenge

AWSs are transformational technologies for future warfare. The incremental development of AWSs that “once activated, can select and engage targets without further human intervention” has led to human operators moving further from the immediate decision-making on the use of force [3]. This human-machine interaction weaponizes AI by distributing an agency to an AWS that is inaccessible to human reasoning. While many computational techniques are summarized under the “AI” term, autonomous systems that the military are currently developing fall under the “narrow AI” category.

At the heart of narrow AI applications are machine-learning (ML) algorithms that are data-hungry and data-dependent [3]. AWS’s reliance on data processed by ML algorithms presents a fundamental problem for commanders using it on the battlefield. Today’s ML algorithms are in a black box that cannot explain or guarantee certain behaviors. This problem raises an important question—how can commanders be reasonably responsible for using an AWS if they do not know the system’s decision-making process? Fortunately, AWS’s current black box behavior is not a foregone conclusion that commanders must reluctantly accept as necessary for future Warfighting and information dominance.

The question of trust in machines for risk calibration and the extent of meaningful human control (MHC) for the ethical employment of autonomous weapons systems are not novel. As weapons systems have become technologically sophisticated, research regarding machine trust and MHC has grown in practical application. The lessons gained from past automatic weapon employment and MHC research form a valuable foundation to consider future commander trust and risk assessment of autonomous systems on the battlefield.

MHC Applied to AWSs

Autonomy and automation have “long been integrated into the critical functions of air defense systems to detect, track, prioritize, select, and potentially engage incoming air threats” [3]. In their “automatic” mode, air defense systems autonomously deploy countermeasures if they detect a threat; however, human operators are “on-the-loop,” allowing them to supervise the system’s actions and abort the attack. In this case, human operators retain situational awareness and have sufficient insights into the parameters under which the command module selects and prioritizes targets. However, to break down the noteworthy problems with autonomous air defense operations, it is helpful to understand three dimensions of MHC discovered through human factors research—a technological dimension through weapon design, a conditional dimension that limits weapons use, and a decision-making dimension that defines acceptable human-machine interaction. All three dimensions must be considered when employing autonomous systems to reach an ethically responsible level of MHC.

In the case of air defense systems, the compromise of MHC has led to many severe incidents of friendly fire, specifically in the human-machine interaction dimension. For example, a series of fratricides involving the Patriot system, a human-in-the-loop air defense system, attributed to excess trust that made the system a de facto fully autonomous weapon [4]. A thorough analysis of these friendly fire incidents by autonomous air defense systems identified the following challenges: automation bias or overtrust, lack of system understanding, lack of situational awareness, lack of time for deliberation, lack of human expertise, inadequate training, and operating under high-pressure combat situations [3].

As autonomy increases, the loss of user alertness is proportional to the system’s enhanced automation and perceived reliability, leading to the “automation conundrum” [4]. Despite this identified issue of MHC over Patriot equipment in its “automatic” mode during the fratricide incidents, the Army’s readiness assessment of Patriot units continues to be exclusively tied to its maintenance requirements and equipment replacement rates as part of its Patriot recapitalization program [5]. Autonomous operations have unfortunately increased without proper risk considerations and readiness evaluations of its data-driven autonomy algorithms, leading to operations with meaningless human control as an unfortunate yet still appropriate use of force.

Pairing HMT With MHC

This state of reluctant risk acceptance is not only incompatible with the future ethical employment of AWS but challenges optimal HMT during operational employment. The rise in automation necessitates a reconceptualization of trust between humans and automated systems. Undertrust in a system can lead to its lack of use, and overtrust can lead to complacency and poor monitoring [6]. Since under- and overtrust are problematic, appropriate trust calibration is critical to effective HMT and risk assessment. Increasing automation has led to the advent of a new HMT paradigm [7]. Within this paradigm, the machine is a teammate of the human, who has innovative abilities to be exploited rather than liabilities for which to be compensated. To determine how humans can appropriately calibrate trust in future AWSs to best facilitate HMT, it is helpful to examine how to apply MHC models to commander trust calibration in operational weapons systems with autonomous features [3].

Human factors research has shown three key variables influencing HMT with automated systems: (1) the human trustor, (2) the automated machine trustee, and (3) the context in which the interaction occurs [6]. These variables nest within MHC’s three technological, conditional, and human-machine interaction dimensions in autonomous weapons systems. The technological dimension of MHC represents the machine’s system factors, which include physical system attributes and performance factors. The conditional dimension of MHC includes environment and context-related factors, which involve team collaboration and task-based factors like type and complexity. MHC’s final human-machine interaction dimension represents the human trustor, which clarifies the human’s understanding of his or her role in the shared work with an automated system.

For human operators to regain meaningful control of autonomous systems, which enables the appropriate calibration of the trust in employing combat-ready robotic wingmen, the following three prerequisite conditions must be met that apply human factors variables and MHC dimensions to CCAs [3]:

- A functional understanding of how the targeting system operates (automated machine trustee variable with the technological dimension).

- Sufficient situational understanding (context variable with the conditional dimension).

- The capacity to scrutinize machine targeting decision-making (human trustor variable with the human-machine interaction dimension).

Optimized teaming between Warfighters and AWSs begins with optimized human-data accountability that hinges on these three HMT human factors variables combined with the three dimensions of MHC—technological, conditional, and human-machine interaction.

Applying CCA With the CEW Mission

Within the Air Force, CCAs will be the future air domain AWS that flies autonomously alongside crewed platforms, testing HMT concepts against the PRC threat [8]. CCAs will harness autonomy, AI, and ML to present formidable airpower capacity against hostile air threats in highly contested environments. These loyal, robotic wingmen can team with human operators by offloading data analysis tasks such as suggesting flight corridors, mapping targets, and appropriate courses of action [4]. Additionally, to increase the survivability of crewed platforms within the PRC’s highly contested and lethal environment, dense with anti-access/area denial (A2/AD) capabilities, CCAs can also saturate China’s People’s Liberation Army (PLA) defenses or autonomously deliver kinetic effects.

Although CCAs will be designed to perform various mission sets, this article will recommend CCA data readiness criteria for evaluating cognitive electronic warfare (CEW)-designated CCAs. It will use tactical, forward-edge CEW CCAs as a case study to address the most fundamental threat CCAs could face to challenge optimized HMT—effects against its data. Electronic warfare (EW) uses the electromagnetic spectrum (EMS) to deliver effects against the enemy’s use of the EMS. The next generation of EW is CEW weapons systems that use AI and ML to automate EW decisions involved in the detection, signal classification, prediction of enemy EW tactics, and countermeasure execution. Since freedom of EMS maneuver provides kinetic maneuver, future wars will be won or lost in the EMS, making CEW CCAs critical in potential conflict against the PRC.

Until recently, EMS threats did not change quickly, so the EW integrated reprogramming (EWIR) process could take months to reconfigure operational flight programs [9]. However, PRC EW assets have rapidly advanced, and responding to these assets requires faster updates than the EWIR enterprise can accomplish. The PLA’s strategists insist on establishing EMS dominance through EW against U.S. assets through the deception strategy of “hide the real and inject the false,” affecting data to mislead U.S. operators [10].

Consequently, the new CEW CCA capability that the United States is developing must have data that is accessible, secure, and appropriately configured to deliver intended battlefield effects. Despite this need, commanders lack a readiness reporting tool to assess a CEW CCA’s readiness to fulfill its intended capability based on the combat readiness of the data driving its behavior. Furthermore, commanders have neither a means of qualifying how much risk they assume by employing robotic wingmen nor a means of ethical accountability for their CCA employment decision.

The operational concept of combat-ready data must be crafted to optimize HMT with future CEW CCAs to rapidly generate EW effects at the forward edge of the battlespace. Since Joint Force commanders currently lack the ability to appropriately determine if data that will drive CEW CCAs can achieve their intended effects of denying adversary objectives without being compromised or introducing ambiguity to friendly forces, the following seven criteria should be used to evaluate the combat readiness of data using both MHC dimensions and HMT factors:

- Data security within the technological dimension.

- Data trust within the technological dimension.

- Data architecture within the technological dimension.

- Data understanding within the conditional dimension.

- Data accessibility within the human-machine interaction dimension.

- Data visibility within the human-machine interaction dimension.

- Data interoperability within the human-machine interaction dimension.

These seven criteria are not only the enabling objectives of the 2022 DoD Data Strategy, as illustrated in Figure 1, but they can also be uniquely understood in their application to data-driven CEW CCAs [11].

![Figure 1. DoD Data Strategy of Vision, Principles, Capabilities, and Goals (Source: U.S. DoD [11]).](https://dsiac.dtic.mil/wp-content/uploads/2025/08/hayhurst-figure-1.png)

Figure 1. DoD Data Strategy of Vision, Principles, Capabilities, and Goals (Source: U.S. DoD [11]).

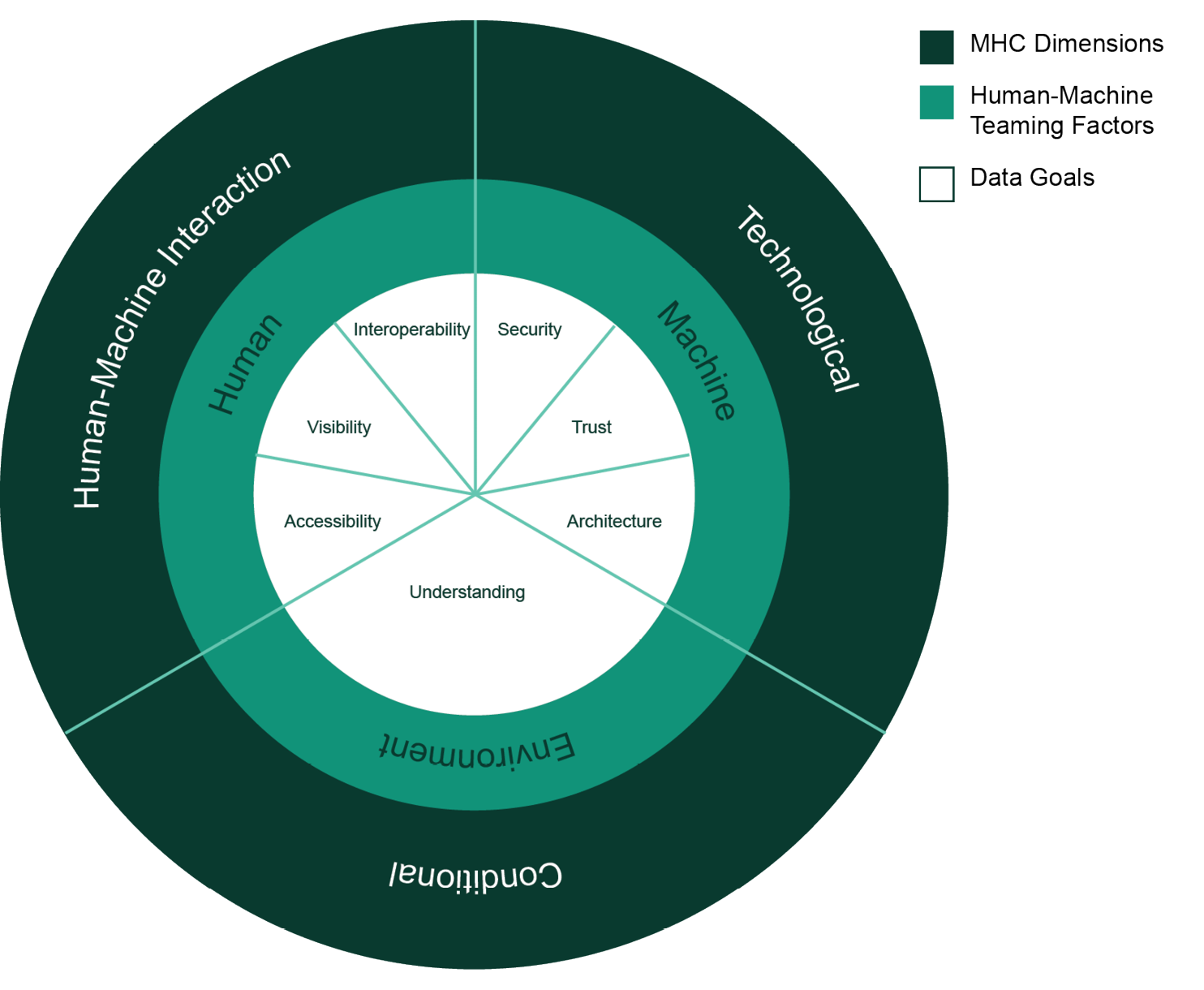

Figure 2 shows the proposed interrelationship between the MHC dimensions for ethical AWS employment, HMT factors for optimal AWS operations, and the 2022 DoD Data Strategy’s data goals for CCA data readiness. These concepts must be applied to future CCA use to ensure commanders are ethically accountable and risk-informed regarding CCA employment’s cost to achieving military objectives [12].

Figure 2. Interrelationship Diagram of MHC, HMT, and Data Goals (Source: C. Hayhurst, C. Covas-Smith, and P. Harris).

Dimensions of MHC

Three dimensions characterize MHC—technological, conditional, and human-machine interaction. Figure 2 highlights how specific HMT factors and CCA data readiness goals from the 2022 DoD Data Strategy nest within these three dimensions.

Technological

The first dimension of MHC is the technological dimension, defined by data security, trust, and architecture. These factors are critical to characterizing the machine variable influencing human trust in automated systems.

Data Security

Within the 2020 DoD Data Strategy, the DoD’s Chief Data Officer (CDO) governs the DoD’s data management efforts to ensure data security standards are met across the entire department [11]. The data security approach recommended in the 2020 DoD Data Strategy is granular privilege management, which uses identity, attributes, and permissions to govern access to data through public key cryptography. However, once cryptanalytically relevant quantum computer capabilities are available, public key algorithms will be vulnerable to adversary attacks [13].

Quantum computing systems threaten current encryption mechanisms that provide the basis of internet commerce and communication. The PRC has surged in its quantum technology research and investments. Chinese companies dominate in quantum cryptography patents, and China has taken the lead in the largest demonstrated network with quantum key distribution [14]. If nothing is done now to protect data streams, any encrypted data the PLA intercepts will be vulnerable to decryption in the future. CCAs with data protected by post-quantum cryptography (PQC) provide the best data security assurance [15]. Consequently, CCA data characterized by PQC security standards gives a commander the lowest risk to the mission within the data security criterion.

Data Trust

Data trust is the next factor that informs the technological dimension of MHC of CCAs. The 2020 DoD Data Strategy describes trustworthy data as having proper tags and pedigree metadata throughout its life cycle [11]. These actions build user confidence in the data due to enhanced data quality, which is critical to support operational decision-making. The Chief Digital and Artificial Intelligence Officer (CDAO) further clarifies metadata governance in the 2023 DoD Metadata Guidance [16]. The guidance assumes that DoD organizations “will apply metadata at the most appropriate time between creation and storage and maintain the tagging through the data assets’ life cycles” [16]. Trustworthy data is tagged data that supports the metadata functions of search and discovery, access control, correlation, audit, records management, and protection. CCAs that use data in compliance with the DoD Metadata Guidance give commanders the lowest risk in data trust.

Data Architecture

The third element of the technological dimension is data architecture. For CEW CCAs, a robust data architecture would enable the rapid assessment of CCA employment to determine the effectiveness of its adversary signal translations and countermeasure decisions. The data architecture that the Air Force will field that satisfies this element is the Advanced Battle Management System (ABMS), which allows data to be shared across multiple platforms as part of the DoD’s Joint All Domain Command and Control effort [17].

The success of the ABMS data architecture depends on rigorous adherence to data standards that provide a common application environment and a set of flexible protocols [18]. This architecture would provide CCAs and their human operators with a totality of data to be used at the strategic level and tactical edge so the commander’s intent can be met with relevant data at the location most applicable to CCA operation. Furthermore, a robust ABMS architecture would sense and synthesize data through AI/ML-based analytics at expected intervals, provisioning CCAs with as relevant and accurate a data threat picture as possible before reaching degraded or denied A2/AD environments.

To improve the speed and quality of its own information processing, the PLA is also pursuing a “system of systems” network under its informatized and intelligentized warfare concepts [2]. Both senior DoD leaders and PLA officials anticipate victory as ultimately belonging to the side with decision superiority through sensing and analyzing data more rapidly and accurately than their opponents. Data immediately tagged with a common data standard, cataloged, and securely stored within ABMS optimizes CCAs’ potential in the HMT construct, giving commanders the lowest risk in the data architecture criterion.

Conditional

The second dimension of MHC is the conditional dimension, defined by data understanding within its operating environment. Data understanding manifests as physical limitations embedded with CCA algorithms that constrain the timing, locality, and targeting of CEW operations. The success of a CEW CCA depends on its interpretation of the conditions of its operational environment, which will inevitably be mired in the fog of war.

Although the range of CCA’s actions is bound by its algorithmic output, it must be capable of employing in environments lacking well-defined contexts. The critical question regarding how the CCA interprets its operating environment is, How can a machine be trained to suspect the truthfulness of its input and even its own training?

The concept of deception is antithetical to AI as a method of rapidly compiling and analyzing data. Data-saturated environments with true and false inputs must be considered when developing CCAs’ data examination and processing cycles. Robust data understanding that detects deception is critical against AI-advanced adversaries like the PLA; standardized countermeasure EW tactics by CCAs will inevitably create opportunities for the PLA to use deception once discovered [19]. In other words, CEW CCAs must recognize when corrupted sensors introduced by the PLA are feeding them poisoned data.

One way to mitigate this vulnerability is through the CEW CCA’s internalized AI adjudication to determine what deception is and is not through variable validity testing. However, standardizing these tests would provide opportunities for the PLA to deceive the test mechanisms.

Another medium-risk option to enhance the CCA’s data understanding is by having committed, reachback EW experts, including engineers, EW officers, combat systems officers, and weapons systems officers, to analyze when, where, and how quickly the CEW CCA detected EW changes in the environment and whether its waveform countermeasures were successful. Deployed EW experts, as opposed to in-garrison, are more beneficial due to their proximity to the forward edge of the battlespace. This would allow them to deliver tactically relevant updates directly to the CCAs without relying on vulnerable datalinks. The closer human operators are to CCAs, the easier the logistics needed to adjust the CCAs’ algorithms to act on the most accurate information, providing commanders the lowest risk in the data understanding criterion.

Human-Machine Interaction

The third dimension of MHC is the human-machine interaction dimension, defined by data accessibility, visibility, and interoperability.

Data Accessibility

Data accessibility entails the ability to access data for CCA mission execution. Data must be visible and accessible in a timely and relevant manner, at a minimum, to the authorizing commander. By having the authority to access the data driving the CCAs, the commander can assess the data’s reliability in driving CEW CCA systems. Data accessibility risk is lowered when data is accessible to both the theater commander employing the CCAs and the communities of interest (COIs) engaged in delivering the CCA data and assessing its data use in after-action studies. These COIs range from the in-garrison service intelligence agencies to the deployed EW professionals in theater. Data accessibility to all command echelons and stakeholders provides the lowest risk within the data availability criterion.

Data Visibility

The second factor of the human-machine interaction dimension, data visibility through data interfaces, significantly influences the quality of HMT. Current demonstrations of pilot interactions with CCA prototypes leverage handheld tablets to send and receive operational data between the pilot and the machine [4]. Data that feeds CCA algorithms and manifests through CCA mission execution should ideally integrate with the Air Force’s future ABMS infrastructure [20]. However, creating an effective user interface for ABMS data that feeds CCAs is a monumental challenge due to the massive amounts of sensor-to-shooter data expected to be collected [21].

Furthermore, as the amount of data-fueling CCA operations grows, the massive data influx to the human operator can lead to cognitive overload, complacency, and loss of alertness [4]. Even though data interfaces with monitorable dashboards or a graphical user interface can be designed to alleviate this information overload, the traditional screen interfaces on tactical, 14-inch tablets and computers have physical restraints regarding their ability to display extensive real-time data to CCA human monitors.

One way to relieve humans from user interface limitations is by converging CCAs with neurotechnology, allowing bidirectional interaction between the human nervous system and the autonomous machine. Brain-computer interfaces (BCIs) would integrate the control of loyal wingmen into the human decision-making processes, accelerating their OODA loop and removing the task of designing CCA interfaces [4]. The U.S. Defense Advanced Research Projects Agency (DARPA) invests millions of dollars annually in BCI projects. DARPA’s most recent noninvasive BCI program is its Next-Generation Nonsurgical Neurotechnology, which “aims to develop high-performance, bi-directional brain-machine interfaces for able-bodied service members” [22]. This interface enables technology to “control unmanned aerial vehicles and active cyber defense systems or teaming with computer systems to successfully multitask during complex military missions” [22]. The practical application of BCI research to CCA user interfaces provides the lowest risk of the data visibility criterion to authorizing commanders.

Data Interoperability

The third criterion to consider when evaluating a CCA’s human-machine interaction dimension is its data interoperability. A critical concern for effective HMT is the ability of the data driving the weapons system to be interoperable within the larger system-of-systems context of ABMS. Data interoperability will ensure data flow and connectivity as ABMS evolves and expands, allowing data to fuel dynamic reassignment between humans and CCAs on the battlefield [7]. Data interoperability is enabled through common data standards used across not just the Air Force but also the other services, allies, and partners, with appropriately labeled releasability caveats on the data. All of these elements of data interoperability collectively provide commanders with the lowest risk of the data interoperability criterion.

Data Readiness Reporting Tool

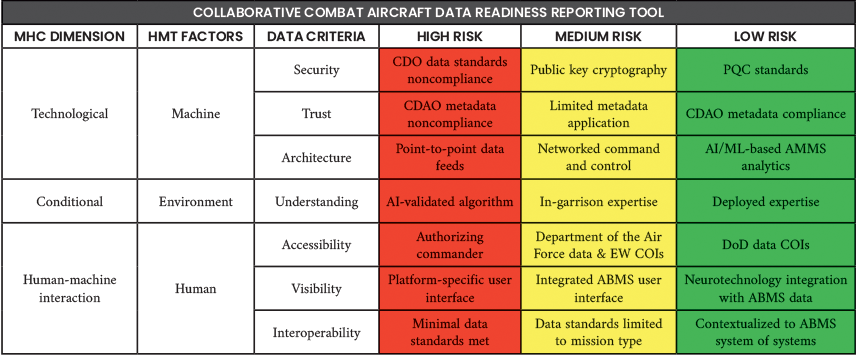

A summary of all seven data criteria and the respective standards that must be met to assign high, medium, or low risk for CEW CCA data readiness is shown in Table 1. Commanders should use this tool to assess how much risk to mission they assume when employing AWS on the battlefield and understand how to best mitigate that risk with the proposed personnel, policy, and technology recommendations included in each risk level description. This tool provides ethical accountability of commander decisions by incorporating MHC over AWS in all the criterion assessments.

Table 1. Proposed Robotic Wingmen Readiness Tool (Source: J. Siewert).

The proposed robotic wingmen readiness tool helps to ensure that CCA operations are not opaque to authorizing commanders. Rather than viewing CCA algorithm-driven behavior as a black box, data readiness ratings based on MHC dimensions position commanders to take responsibility for the AWS’s actions. The “low risk” data criteria column provides a strategic direction for the DoD to optimize HMT through the ethical balance of autonomy and human interaction.

Although this article uses CEW CCAs as a vignette to explore the combat readiness of data, the seven proposed data criteria also apply to other robotic wingmen the DoD is fielding. The option for human control and verification is paramount in offensive autonomous weapons that can deliver lethal kinetic effects.

Conclusions

Legacy readiness reporting criteria are insufficient to assess the combat readiness of the DoD’s next generation of robotic wingmen. An appropriate framework to evaluate future robotic wingman readiness is through the three dimensions of MHC of autonomous systems, all of which characterize the data ultimately driving the AWS operations. Robotic wingmen assigned the lowest risk of each data criterion within the proposed CCA data readiness tool position CCAs to achieve optimal HMT with their human wingmen.

To realize the vision of this data readiness tool, future commanders and their staff will need to be data literate to accurately assess each data criterion. Data literacy and digital talent must no longer be siloed to specific Air Force Specialty Codes but rather foundational to all future airmen. The Air Force must adopt a new readiness tool to reasonably hold commanders accountable for employing CCAs on the battlefield, and the DoD can use the Air Force’s tool as its benchmark for assessing AWS across all services. As the DoD embraces AWS to meet the pacing challenge of China, service leaders must examine new ways to organize, train, and equip their members to best team with its future robotic wingmen. Like the 26th Secretary of the Air Force Frank Kendall stated in his 2022 Congressional hearing addressing Air and Space Force modernization efforts, “Change is hard, but losing is unacceptable” [23].

References

- U.S. DoD. “National Defense Strategy.” Washington, DC: Office of the Secretary of Defense, p. 127, October 2022.

- U.S. DoD. “Military and Security Developments Involving the People’s Republic of China.” 2023 Annual Report to Congress, Washington, DC: Office of the Secretary of Defense, pp. 40 and 95, 26 October 2022.

- Bode, I., and H. Huelss. Autonomous Weapons Systems and International Norms. London, UK: McGill-Queen’s University Press, 2022.

- Rickli, J.-M. “Human-Machine Teaming in Artificial Intelligence-Driven Air Power: Future Challenges and Opportunities for the Air Force.” The Air Power Journal, Fall 2022, vol. 8, https://www.diacc.ae/resources/2022_Jean_Marc_Rickli_ Federico_Mantellassi_Human-Machine_Teaming_Air_Power.pdf, accessed on 12 March 2024.

- United States Government Accountability Office (GAO). “Analysis of Maintenance Delays Needed to Improve Availability of Patriot Equipment for Training.” GAO Report 18-447, Washington, DC, p. 7, June 2018.

- Sanders, T. L., et al. The Neurobiology of Trust. Cambridge University Press, chapter 4, pp. 78 and 79, https://www.cambridge.org/core/books/abs/ neurobiology-of-trust/trust-and-human-factors/D51AD892F20EDA9404108BD66 49489E0#, 9 December 2021.

- Madni, A. M., and C. C. Madni. “Architectural Framework for Exploring Adaptive Human-Machine Teaming Options in Simulated Dynamic Environments.” Systems 6, no. 4, Spring 2018, pp. 3 and 15, https://www.mdpi.com/2079-8954/6/4/44, accessed on 5 January 2024.

- Air Force Technology. “Collaborative Combat Aircraft.” https://www.airforce-technology.com/projects/collaborative-combat-aircraft-cca-usa/?cf-view&cf-closed, accessed on 7 January 2024.

- Vedula, P., et al. “Outsmarting Agile Adversaries in the Electromagnetic Spectrum.” RAND Report A981-1, Santa Monica, CA: RAND Corporation, p. 5, 19 January 2023.

- Clay, M. “To Rule the Invisible Battlefield: The Electromagnetic Spectrum and Chinese Military Power.” War on the Rocks, https://warontherocks.com/2021/01/to-rule-the-invisible-battlefield-the-electromagnetic-spectrum-and-chinese-military-power/, 22 January 2021.

- U.S. DoD. “DoD Data Strategy.” Washington, DC: Office of the Secretary of Defense, pp. 1, 5, and 8, 30 September 2020.

- Joint Chiefs of Staff. Joint Publication (JP) 5-0. “Joint Planning,” p. xiv, 1 December 2020.

- National Cybersecurity Center of Excellence. “Migration to Post-Quantum Cryptography.” NIST, https://www.nccoe.nist.gov/sites/default/files/2023-08/mpqc-fact-sheet.pdf, August 2023.

- Stefanick, T. “The State of U.S.-China Quantum Data Security Competition.” Brookings Institution, https://www.brookings.edu/articles/the-state-of-u-s-china-quantum-data-security-competition/, 18 September 2020.

- Cybersecurity and Infrastructure Security Agency. “Quantum-Readiness: Migration to Post-Quantum Cryptography.” Washington, DC, 21 August 2023.

- U.S. DoD. “DoD Metadata Guidance, Version 1.0.” Washington, DC: Chief Digital and Artificial Intelligence Officer, pp. 4 and 5, January 2023.

- Hoehn, J. R. “Advanced Battle Management System.” Congressional Research Service Report IF11866, vol. 5, 15 February 2022.

- National Academies of Sciences. Engineering, and Medicine, Advanced Battle Management System: Needs, Progress, Challenges, and Opportunities Facing the Department of the Air Force. Washington, DC: The National Academies Press, p. 37, 2022.

- Tangredi, S. J., and G. Galdorisi. AI at War: How Big Data, Artificial Intelligence, and Machine Learning Are Changing Naval Warfare. Annapolis, MD: Naval Institute Press, pp. 300, 301, 310, and 311, 2021.

- Johnson, T. R. “Emerging Tanker Roles and Risks in the Advanced Battle Management System Era.” Wild Blue Yonder, https://www.airuniversity.af.edu/ Wild-Blue-Yonder/Article-Display/Article/2652095/emerging-tanker-roles-and-risks-in-the-advanced-battle-management-system-era/, accessed on 15 January 2024.

- Wolfe, F. “Effective User Interfaces for ABMS a ‘Momentous Challenge,’ U.S. Space Force Says.” Defense Daily, https://www.defensedaily.com/effective-user-interfaces-abms-momentous-challenge-u-s-space-force-says/space/, accessed on 14 October 2023.

- Willis, A. “Next-Generation Nonsurgical Neurotechnology.” Defense Advanced Research Projects Agency, https://www.darpa.mil/program/next-generation-nonsurgical-neurotechnology, accessed on 16 February 2024.

- Secretary of the Air Force Public Affairs. “Kendall, Brown, Raymond Tell Congress $194 Billion Budget Request Balances Risks, Quickens Transformation.” U.S. Air Force News, https://www.af.mil/News/Article-Display/Article/3012814/kendall-brown-raymond-tell-congress-194-billion-budget-request-balances-risks/, accessed on 13 December 2023.

Biographies

Christina Hayhurst is an active-duty U.S. Air Force (USAF) intelligence officer and instructor at the Squadron Officer School, with a follow-on assignment at the School of Advanced Air and Space Studies. Her operational assignments have provided intelligence support to Air Force bomber missions, Warfighter customers of the National Air and Space Intelligence Center, acquisition professionals within the Air Force Life Cycle Management Center, and the Air Force Special Tactics enterprise. Maj. Hayhurst holds a bachelor’s degree in biochemistry from the USAF Academy, a master’s degree in international security and economic policy from the University of Maryland, and a master’s degree in military operational art and science from the Air Command and Staff College.

Christine Covas-Smith is the director of the Air Education and Training Command’s Enterprise Learning Engineering (ELE) Center of Excellence at Joint Base San Antonio, Randolph Air Force Base, TX. She is leading the implementation of ELE as a sense-making framework for USAF development, including increasing competency-based learning through systematic application of evidence-based principles, scientific methods, and practices from the learning sciences and education research and systems thinking to modernize learning. Dr. Covas-Smith holds a Ph.D. in applied psychology and cognitive action perception from Arizona State University.

Patricia Harris serves as the Writing Center Program lead at Air University and is a member of a core research group at the University of Bergen that focuses on the intersections of learning design, memory formation, and AI. She tested integrated perception ML models for Creative Synthetic and was a former professor, associate dean, technology director, and co-instructor for the Future Ideas and Weapons Research Task Forces at the Air War College. She wrote a book chapter focused on feedback strategies for Enlisted Professional Military Education that will be published by the U.S. Army Upgrade Program in 2025. Ms. Harris holds a master’s degree in English literature and a Ph.D. in rhetoric and media studies.