Summary

The composition of different types of terrains and presence of a variety of objects and artifacts in a real environment are always evolving. Not every aspect of the environment can be learned, modeled, and synthesized a priori in an artificial intelligence (AI)-enabled, decision-making pipeline. If collaboration can be enabled among several deployed robots in different remote zones, it is possible to develop a generalized terrain and object detection model capturing greater uncertainty and variability in an environment. Conventionally, to realize this, data sharing between agents and servers is warranted; however, that may introduce a higher risk of an adversarial attack.

Federated learning can be a useful approach to mitigate this issue. The object detection model can be trained using federated learning in which training data will not be explicitly shared between the robots performing terrain reconnaissance in various geolocations. The robots can learn generalized features while training the model onboard and share the model updates from one location to another to support the collaborative training for distributed learning and real-time situational awareness. Also, simulating physical autonomous systems with virtual entities allows exploring complex interactions between collaborating agents at scale. Virtual-physical co-simulation mitigates costly environments populated by large numbers of autonomous entities. A high-level overview of these approaches and a case study to overcome challenges of real-time adaptability and experiment scalability in multiagent teaming are presented in this article.

Introduction

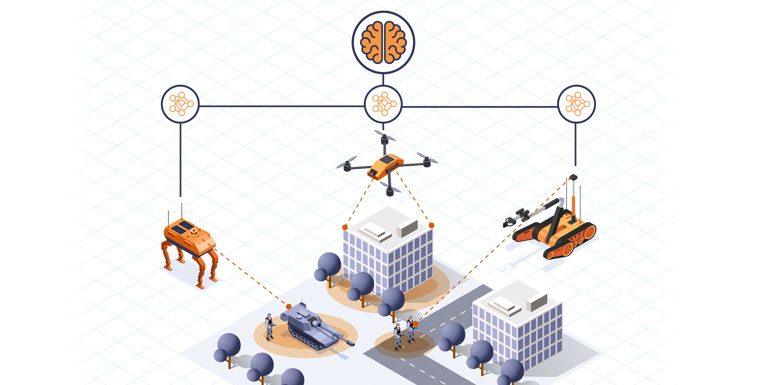

Current conflicts in Eastern Europe and the Middle East have demonstrated a new reliance on autonomous assets to augment various tasks in highly lethal battlefield environments. Therefore, it is imperative to understand how such robotic agents can efficiently and effectively communicate and collaborate among themselves as well as with human decision-makers to ensure battlefield dominance in real time. For example, the Ukrainian military has employed a hunter-killer style drone team where one unmanned aerial vehicle (UAV) is used to find enemy positions and another UAV drops a munition or the UAV itself is used as a disposable kinetic munition.

The research on multiagent teaming (MAT) is an area that remains less explored due to expensive resources to properly investigate on a realistic scale. Optimizing asset deployment in a vast area of interest (AOI) will require proper simulation in comparable scales and environments (e.g., open pastures and dense urban settings) or terrain features (e.g., vegetation, forests, and deserts) to the real-world scenario. To that end, virtual reality can bridge the gap in scalability by augmenting real-world assets and physical environments in determining the resources required for desired effects.

To achieve the maximum effect of MAT, it is imperative to understand how these collaborating autonomous agents can communicate with each other and complete tasks in an efficient manner. Emulating this scenario in the virtual world and combining it with the information obtained from various physical sources of intelligence can help to learn and understand the perception capabilities and collaborative behaviors of autonomous assets for mission success in the real world. Simulating physical autonomous systems with virtual entities allows exploring complex interactions between collaborating agents at scale. Furthermore, virtual-physical co-simulation mitigates costly environments populated by large numbers of autonomous entities.

Collaborative Situational Awareness with Multiagent Federated Learning

Without sharing all the data, an object detection model learned in one environment (e.g., Site 1) can be leveraged to transfer to another remote location (e.g., Site 2). If collaboration can be enabled among several deployed robots in different zones, it is possible to develop a generalized terrain and object detection model that captures greater uncertainty and variability in an environment. Conventionally, to realize this, data sharing between agents and servers is warranted; however, this may introduce a security risk.

Federated class-incremental learning can be a useful approach to mitigate this issue. It allows multiple clients in a distributed environment to learn models collaboratively from evolving data streams where new classes arrive continually at each client. This technique helps accelerate the machine-learning (ML) model training process without directly sharing the raw data and pretrained models across multiple clients and only sharing the weighted average of the model parameters between them. This aids in collaborative training by sharing knowledge from one geolocation to another while preserving privacy, minimizing the opportunities for data breaches, increasing the robustness of ML models, and reducing the training overhead and time substantially.

Investigating a remote zone reconnaissance scenario by relying on multiple distributed virtual remote sites using federated and continual learning is a novel research direction. The object detection model can be trained using federated learning in which training data will not be explicitly shared between robots performing terrain reconnaissance in various geolocations. The robots should learn generalized features while training the model onboard and share the model updates from one location to another to support the collaborative training for distributed situational awareness.

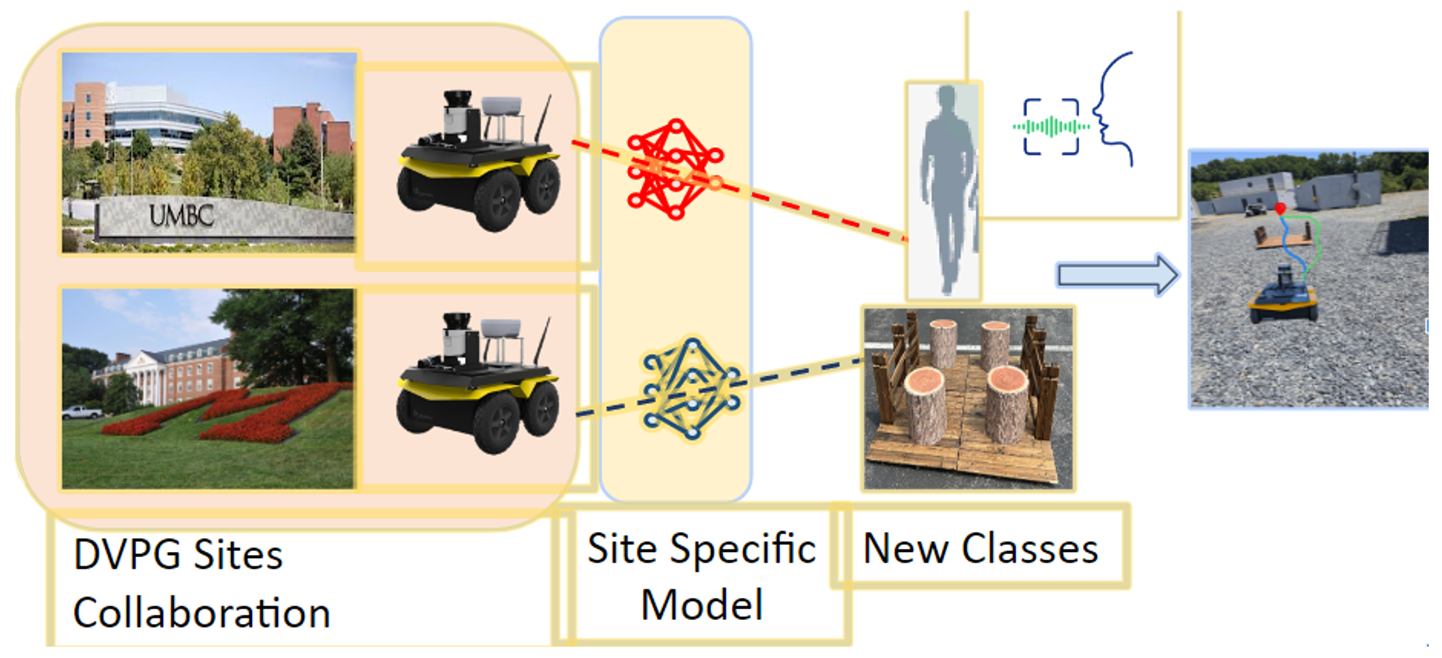

For example, an object such as a bridge seen at a location can be learned by the autonomous agents there and transferred with minimal model parameters using federated learning to another location where the autonomous agents have not been trained to detect such a bridge, as shown in Figure 1. Collaborative training detects it in both locations by extending the learning securely to another autonomous agent in real time. This approach enables real-time collective situational awareness in an environment with minimal computing and training overhead, facilitating distributed and collaborative model training and remote learning.

Figure 1. Secure Collaborative Training for ML Model Building (Source: N. Roy).

Seamless Interoperability and Scalability Across Heterogeneous Assets

Heterogeneity across robotic assets presents challenges for collaborative tasks. In addition to the inherent differences between unmanned ground vehicles (UGVs) and UAVs, unmanned X vehicles are manufactured by different vendors and have different versions of autonomy stacks and software packages. For example, the simultaneous localization and mapping (SLAM) packages of UGV Jackals from Clearpath Robotics use Google’s Robot Operating System (ROS) Cartographer; ROSbots from Husarion Inc. employ Hector SLAM packages; and modal AI RB5/Seeker drones rely on visual inertial odometry to support localization capabilities and volumetric pixels (voxels) for the mapping elements in their SLAM implementation. Interoperability across these packages while successfully transmitting the packets from one agent to another is a nontrivial research and development task.

A collaborative SLAM infrastructure involves data from multiple heterogeneous interoperable agents with different views of the same environment [1, 2]. The local views from multiple different agents are combined to create a global view of the sensing environment [3]. Moreover, calibrating and tuning SLAM parameters and sensitivity factors during the global map creation of an area are very important to successfully navigate a terrain using multiple robots. Therefore, software interoperability across ROS in virtual and physical environments is achieved, resulting in seamless connectivity and communication between multiple heterogeneous agents.

Virtual-Physical Co-simulation of Autonomous Navigation

Understanding the nature of terrain even before the deployment of autonomous robotic assets in the environment is important. Virtual-physical co-simulation allows optimal path planning to be determined in an AOI. Such a priori knowledge minimizes the risks and resources for allocating expensive autonomy assets in vast regions prior to deployment.

The first step in the simulation is to deploy all the autonomous agents in the virtual setting to simulate the path planning and coverage of sensing needed in the AOIs. The specific models of the UGVs/UASs in Gazebo and Unity can be deployed and the synthetic data generated from all the sensors, such as light detection and ranging (LiDAR); red, green, and blue; and inertial measurement units for mapping and navigation.

Second, a mixed version of the prior setup is emulated with a few agents in the virtual environment covering a specific side of the terrain and some agents in the physical environment covering the other side. This designs the optimal path planning and minimizes the sensing overlap (and maximizes coverage) while detecting the objects, obstacles, adversaries, and other artifacts for making intelligent collaborative decisions using a swarm of UGVs and UASs. The interactions of robotic assets with virtual-physical environments are accurately modeled to represent behavior in the real world. The multiagent perception-action-communication loop cross-cutting between virtual and physical agents is being executed in the virtual-physical environment. Considering these factors, the robotic agent deployment planner can be designed while achieving the optimal path planning, sensing, and coverage of an area in the presence of adversaries.

An adaptive learning system within autonomous robotic agents is developed and integrated to enable real-time strategy adjustment in response to dynamic environmental changes and unforeseen challenges. With a focus on advancing the intelligence and adaptability of autonomous systems, a framework is created where UGVs/UASs can not only follow preplanned paths and strategies but also learn from the environment and adjust their actions in real time. This adaptive learning is crucial in unpredictable environments where conditions can change rapidly or new obstacles and threats can emerge unexpectedly. Therefore, a key step is to develop a hierarchical objective-driven navigation system based on topological maps and novel learning algorithms to enable efficient path-finding and decision-making in environments with sparse rewards where feedback (rewards or penalties) for autonomous agents is minimal.

Reducing Topic Dissemination Overhead

In a virtual-physical co-simulation environment, information communication and exchange from physical and virtual space are established in multiagent scenarios for performing collaborative tasks such as object detection, scene perception, navigation, and route planning. Virtual and physical autonomous agents share the common world model as if they are collaborating in the real environment. In data sharing, it is imperative to reduce the overhead during ROS message passing and control with maximal information gain, minimal communication overhead, and maximum computing efficiency, as the communication network in the real world can be brittle and scarce.

One of the main challenges in the virtual-physical environment co-simulations is handling the underlying packet delays between simulators (Unity and Gazebo) and physical autonomous assets. Consider multiagent SLAM tasks where several autonomous agents explore and map an environment. Various ROS topics are being generated from each agent and transmitted over the wireless channels in the virtual and physical space as robots move around and scan an area to navigate. Even with a single agent, it is expensive to send all the ROS messages/topics from the robot’s two-dimensional or three-dimensional (3-D) LiDAR, photographic imagery, or laser scanning to the master node. How can the agent send only essential information, metadata, or semantic knowledge of the environment? A solution to this question will reduce communication and computing overheads and reduce the network payload and delay in the simulation environment.

SLAM algorithms rely on flat representations of point cloud data and do not explore semantic relationships between objects, agents, structure, and their spatial arrangements [4]. To that end, a lightweight version of SLAM can be implemented, such as a spatial perception engine using dynamic scene graphs that capture the hierarchical relationships between the artifacts in the environment. This version can represent the high-level spatial concepts and relations rather than just lines, planes, points, and voxels.

Moreover, novel packet-filtering schemes and skip-window strategies can be employed to intelligently disseminate ROS topics from one agent to another, either situated in virtual or physical space, to reduce the number of messages being published and subscribed. This, in effect, will help improve the network’s quality of service (QoS). An additional solution is leveraging the ROS2-based framework (masterless) in combination with topic aggregation and a selective unicast packet dissemination strategy instead of broadcasting all the ROS topics randomly from one agent to another or to a master node [1, 5].

Case Study

In this section, a case study performed as part of the August 2024 Summer Field Experiments at the U.S. Army Research Laboratory (ARL) Robotics Research Collaboration Campus (R2C2) in Graces Quarters (GQ), MD, will be discussed. AI-enabled decision making in multiple domains within complex and dynamic environments will be addressed. Army capabilities like integrating data from all domains, reasoning across explicit and tacit knowledge, and supporting forces in both physical and information spaces will be covered.

The Army-relevant scenario of this field experiment involved a route reconnaissance, operational scenario representing challenges associated with autonomous navigation with seen and unseen object detection. It also involved avoidance in complex and noisy environments for which a commander conducted maneuver and intelligence decisions across multiple agents and modalities from different geolocations. The experiment showcased execution of remote voice command, obstacle avoidance, and a bridge-crossing task that was utilized to tie the specific elements of the research and contribute to aspects along the AI-enabled, intelligent decision-making cycle. The aspects of the following key research and development thrusts were addressed:

- Digital twin with photogrammetry rendering via Unity and synthetic data collection and annotation (Figure 2).

- Collaborative training with virtual-physical ML model building and minimal real data collection and annotation (Figure 2).

- Building privacy-aware new classes with learning from distant Distributed Virtual Proving Ground (DVPG) sites and federated class incremental learning.

- Autonomous navigation with object detection and avoidance and LiDAR semantic-segmentation-based navigation.

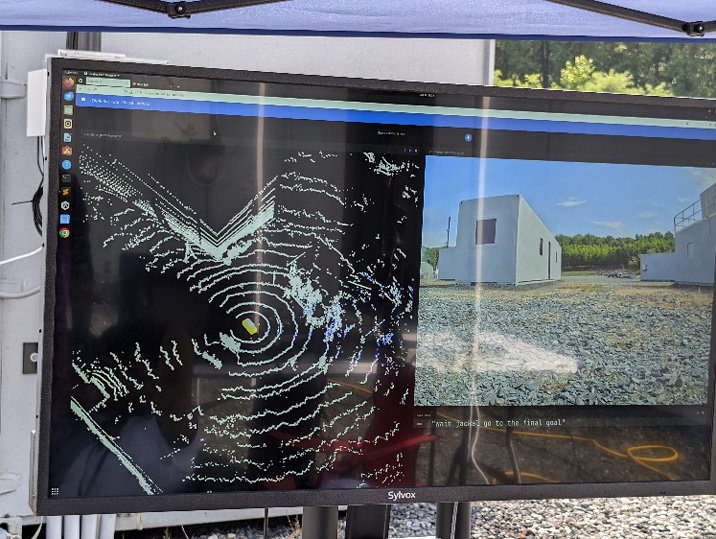

- Remote voice command with robot goal initialization using the DVPG network and voice enhancement with battleground noise.

Figure 2. Digital Twin for Virtual-Physical ML Model Building (Source: N. Roy).

As part of this collaborative research effort, components leveraging ARL’s DVPG infrastructure to geographically distributed facilities and capabilities to perform joint mission and experiments across simulation and physical environments with robots were illustrated, as shown in Figure 2. The ability to interpret spoken instructions across the DVPG from a remote commander amidst noisy environmental conditions was also demonstrated.

In this collaborative remote robotics experiment, a UGV called Jackal was stationed at ARL’s R2C2 in GQ’s physical and virtual environments. Two other Jackals were stationed in two different geolocations—one in Maryland Robotics Center (MRC) at the University of Maryland College Park (UMCP) and the other one in the Center for Real-Time Distributed Sensing and Autonomy (CARDS) at the University of Maryland Baltimore County (UMBC). Student commanders were present at UMCP and UMBC campuses to showcase how the remote learning, collaborative training, and automatic speech recognition (ASR) relying on multiple virtual and physical sites could help enhance the situational awareness in contested environments.

Three research contributions were the focus—ASR, virtual physical collaborative training, and new class learning via Federated Class Incremental Learning (FCIL). The virtual physical collaborative training was shown first, with ASR in a virtual GQ environment. UMCP student commanders were then asked to give the voice command to invoke the ARL ROS Unity Simulator at GQ.

A large set of photogrammetry data of the broken car and bridge in GQ was collected to integrate these objects with the ARL’s existing digital twin version of the GQ environment. Moreover, synthetic data about the broken car and bridge was collected. The LiDAR semantic segmentation in a virtual world was implemented to detect the broken car and bridge as well as autonomous navigation to avoid cars and successfully cross the bridge in a virtual GQ environment. The objective of the virtual experiment was to reinforce that meaningful synthetic data could be collected and annotated properly to implement collaborative training between virtual and physical sites. As part of the GQ virtual experiment, as shown in Figure 3, the rendering of the physical site of GQ inside Unity through the remote command and navigating the robot in physical (GQ) and virtual (Unity) spaces were executed by the student commander from UMCP using the DVPG network.

Figure 3. Remote Voice Command in a Virtual GQ Environment (Source: N. Roy).

Next, the experiments showed autonomous navigation while avoiding the broken car and successfully crossing the bridge at the physical GQ site through collaborative training and remote learning. An ROS board interface was implemented for publishing and subscribing all the ROS topics across multiple physical and virtual sites, as well as a domain adaptation technique between virtual and physical sites using synthetic and real datasets. As part of this experiment, student commanders at the UMBC DVPG site were asked to give the voice command to achieve the mission of bridge crossing at the physical GQ site while avoiding obstacles like a broken car. This case study represented the first contribution of the ASR and collaborative training using real and synthetic data collected from multiple virtual and physical sites to improve situational awareness.

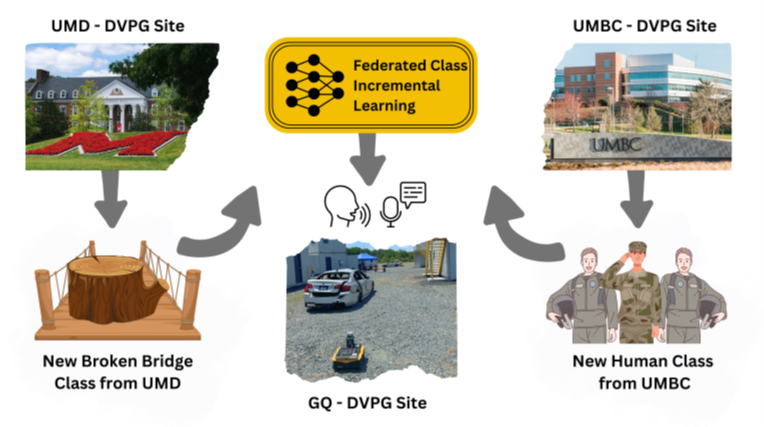

The second contribution was based on new class learning using the FCIL technique, as shown in Figure 4. In this part of the experiment, data was collected from the two remote physical sites of GQ DVPG—MRC at UMCP and CARDS at UMBC. Data of the new class bridge was also collected with tree logs at UMCP and another new class human at UMBC, as shown in Figure 5. (Note that the physical and virtual GQ agents were not trained with these two new classes during the first collaborative training experiment.)

Figure 4. Building Privacy-Aware Machine Learning Model From Multiple Remote DVPG Sites (Source: N. Roy).

Figure 5. Sharing Model Parameters for Unseen Classes From Remote DVPG Sites (Source: N. Roy).

Using the federated class incremental learning, the weighted average of the model parameters from UMCP and UMBC to the GQ physical site was transferred to see if the new class human and bridge with tree logs were being detected and avoided. This enabled the UGV Jackal in GQ to navigate autonomously—this time, the Jackal in GQ did not cross the bridge due to the fallen tree logs.

This case study depicted the second contribution. This field experiment attested the value of automatic speech recognition, collaborative training, and distributed remote learning from different geolocations in the presence of a digital twin, which has many potential applications for a multitude of civilian and military applications.

Research Areas of Opportunity

Based on preliminary investigations presented here, there are many real-world challenges to explore in a virtual-physical co-simulation framework. The autonomous agents’ interoperability, calibration, and parameter tuning issues from software integration perspectives must be considered first. For path planning, navigation, and sensing with a minimal number of autonomous agents, the optimal allocation of robotic assets is necessary where there are no unlimited autonomous assets available in the real world. Furthermore, universal data models that are compact, accessible across many programming languages, and efficient on the network need to be developed. Such models need to also support future scalability.

Conclusions

Increased incorporation of autonomous-system interactions is anticipated. Autonomous drone swarms, unmanned ground vehicles, and packs of quadrupedal robots are no longer novel technologies. To ensure the use of these robots is effective, real-world challenges in the interactions among the collaborating robots need to be understood [6–9]. Research is being done on how detecting an object by an agent can be learned by other agents in another location by using federated learning to securely transfer with minimal model parameters.

Further, software interoperability across heterogeneous robotic assets and real-time, 3-D semantic segmentation by transferring and sharing knowledge using federated learning models from distributed remote sites are being investigated. Finally, virtual-physical co-simulation is a novel experimentation approach that could be used toward scaling the number of agents to advance various robotics simulations in emerging application domains.

Acknowledgments

This research is supported by the U.S. Army Grant #W911NF2120076, Office of Naval Research Grant #N00014-23-1-2119, National Science Foundation (NSF) Research Experiences for Undergraduates (REU) Site Grant #2050999, and NSF Computer and Network Systems (CNS) EArly-concept Grants for Exploratory Research (EAGER) Grant #2233879.

The authors would like to acknowledge the following contributions:

- ARL researchers—Dr. Niranjan Suri, Dr. Adrienne Raglin, Dr. Carl Busart, Dr. Stephanie Lukin, Dr. Claire Bonial, and Dr. Felix Gervits.

- UMBC and UMCP faculty—Dr. Anuradha Ravi, Dr. Abu Zaher Faridee, Dr. Zahid Hasan, Dr. Frank Ferraro, Dr. Cynthia Matuszek, Dr. Aryya Gangopadhya, Dr. Derek Paley, and Dr. Carol Espy Wilson.

- UMBC and UMCP students—Masud Ahmed, Emon Dey, Jumman Hossain, Saeid Anwar, Indrajeet Ghosh, Gaurav Shinde, Snehalraj Chugh, Sadman Sakib, Vinay Krishna Kumar, and Shuubham Ojha.

- Undergraduate student researchers as part of the NSF REU program—Hersch Nathan and Adam Goldstein.

References

- Dey, E., J. Hossain, N. Roy, and C. Busart. “SynchroSim: An Integrated Co-Simulation Middleware for Heterogeneous Multi-Robot System.” The 18th International Conference on Distributed Computing in Sensor Systems, 2022.

- Mohammad Saeid Anwar, M. S., A. Ravi, E. Dey, G. Shinde, I. Ghosh, J. Freeman, C. Busart, A. Harrison, and N. Roy. “CoOpTex: Multimodal Cooperative Perception and Task Execution in Time-critical Distributed Autonomous Systems.” The 21st International Conference on Distributed Computing in Smart Systems and the Internet of Things, 2025.

- Anwar, M. S., E. Dey, M. K. Devnath, I. Ghosh, N. Khan, J. Freeman, T. Gregory, N. Suri, K. Jayarajah, S. R. Ramamurthy, and N. Roy. “HeteroEdge: Addressing Asymmetry in Heterogeneous Collaborative Autonomous Systems.” The IEEE 20th International Conference on Mobile Ad Hoc and Smart Systems, pp. 575–583, 2023.

- Ahmed, M., Z. Hasan, A. Z. M. Faridee, M. S. Anwar, K. Jayarajah, S. Purushotham, S. You, and N. Roy. “ARSFineTune: On-the-Fly Tuning of Vision Models for Unmanned Ground Vehicles.” The 20th International Conference on Distributed Computing in Smart Systems and the Internet of Things, IEEE, 2024.

- Dey, E., M. Walczak, M. S. Anwar, N. Roy, J. Freeman, T. Gregory, N. Suri, and C. Busart. “A Novel ROS2 QOS Policy-Enabled Synchronizing Middleware for Co-Simulation of Heterogeneous Multi-Robot Systems.” The 32nd International Conference on Computer Communications and Networks, pp. 1–10, 2023.

- Hossain, J., A. Z. Faridee, D. Asher, J. Freeman, T. Trout, T. Gregory, and N. Roy. “QuasiNav: Asymmetric Cost-Aware Navigation Planning With Constrained Quasimetric Reinforcement Learning.” The IEEE International Conference on Robotics and Automation (ICRA), 2025.

- Shinde, G., A. Ravi, E. Dey, J. Lewis, and N. Roy. “TAVIC-DAS: Task and Channel-Aware Variable-Rate Image Compression for Distributed Autonomous System.” The 4th IEEE Workshop on Pervasive and Resource-constrained Artificial Intelligence, colocated with the 23rd IEEE International Conference on Pervasive Computing and Communications (PerCom), 2025.

- Dey, E., A. Ravi, J. Lewis, V. Kumar, J. Freeman, T. Gregory, N. Suri, C. Busart, and N. Roy. “DACC-Comm: DNN-Powered Adaptive Compression and Flow Control for Robust Communication in Network-Constrained Environment.” The 17th International Conference on COMmunication Systems & NETworkS (COMSNETS), 2025.

- Hossain, J., and N. Roy. “Learning Optimal Policies With Quasi-Potential Functions for Asymmetric Traversal.” The 42nd International Conference on Machine Learning (ICML’25), 2025.

Biographies

Nirmalya Roy is a professor in the Information Systems Department and director of the Mobile, Pervasive, and Sensor Computing Lab at UMBC; associate director of CARDS at UMBC; and a co-PI on an ArtIAMAS (AI and Autonomy for Multi-Agent Systems) cooperative research agreement from ARL in collaboration with the UMCP. His current research interests include use-inspired AI/ML and human-centric data science with applications to smart health, cyber-physical systems, Internet of Things, robotics, and autonomy. Prior to joining UMBC, he was a clinical assistant professor at Washington State University, a research staff member at the Institute for Infocomm Research in Singapore, and a postdoctoral fellow at the University of Texas at Austin. Dr. Roy holds a B.E. in computer science and engineering from Jadavpur University, India, and an M.S. and Ph.D. in computer science and engineering from the University of Texas at Arlington.

Jade Freeman is the chief of the Battlefield Information Systems Branch at the U.S. Army Combat Capabilities Development Command (DEVCOM), ARL, where she manages research projects on cross-reality technology, large language model, computer vision, and AI resilience and assurance cases. Dr. Freeman holds a Ph.D. in statistics from George Washington University.

Mark Dennison is the information dynamics team lead for the Battlefield Information Systems Branch at DEVCOM, ARL, where he explores how augmented and mixed reality technologies can enhance information visualization and interaction, sharing, and exploitation to enable shared situational understanding across highly decentralized maneuver forces and allow warfighters to collaborate more effectively with robotic systems. Previously, he studied how heterogeneous physiological sensor information can be used to predict motion sickness onset and severity in head-mounted display users. Dr. Dennison holds a bachelor’s degree in psychology, a master’s degree in cognitive neuroscience, and a Ph.D. in psychology from the University of California, Irvine.

Theron Trout is vice president and chief operating officer of Stormfish Scientific Corporation, where he focuses on enabling large-scale, distributed environments interconnecting users in virtual-, augmented-, and mixed-reality technologies to perform military-relevant analysis and decision-making activities. Mr. Trout holds a B.S. in computer science, with minors in physics and mathematics, from Marshall University.

Timothy Gregory is an electronics engineer in the Battlefield Information Systems Branch at DEVCOM, ARL, where he works on various software and hardware projects in robotics, unattended ground sensors, database systems, geographic information systems, communications protocols, and sensor simulation and network systems. He also oversees and manages research experiments and field tests at Army and multinational coalition military exercises. Mr. Gregory holds a B.S. in computer science from UMCP.