Introduction

Mobile robots, equipped with wheels, tracks, or legs, are designed to move from a start location to a goal in various indoor and outdoor environments. A key area of interest is making them capable of autonomously navigating diverse environments.

Within mobile robots, legged robots have been used for many applications in outdoor settings like surveillance [1], inspection in power plants and factories [2], reconnaissance [3], disaster response [4], and planetary exploration [5] due to their superior dynamics, which enables them to traverse challenging outdoor terrains [6, 7]. For these applications, a robot may have to first perceive various terrains and their challenges, different kinds of obstacles (e.g., rocks, trees, tall grass, ditches, etc.), and dimensions and properties (hardness, pliability/bendability, over-hanging objects, etc.). Next, it must make navigation decisions to either avoid nontraversable terrains and obstacles or use locomotion strategies to traverse them stably. Three components—perception (what terrains and obstacles are nearby), navigation (where the robot must walk), and locomotion (how the robot must walk)—form the core of legged robot autonomy.

Legged robots perceive their environments through two possible ways—exteroception and proprioception. Exteroception refers to sensing the environment using visual sensors such as red, green, and blue (RGB)/RGB-depth (RGB-D) cameras, two-dimensional (2-D) or three-dimensional (3-D) light detection and ranging (LiDAR) methods, radars, etc. Conversely, proprioception refers to sensing the environment using the robot’s internal states such as leg joint positions, velocities, joint forces, torques, etc. The robot’s navigation or planner, on the other hand, computes trajectories or velocities for the robot to execute based on the perceived environment complexities.

The work presented in this article deals with local planning, i.e., computing trajectories over a short time horizon based on local sensing (∼ tens of meters). The robot’s locomotion computes its leg poses and contact points on the ground for stable traversal on any terrain or object that the robot can climb over. Given these components, the challenges to legged robot autonomy in unstructured outdoor terrains are discussed in the next section.

Challenges in Unstructured Outdoor Navigation

Unstructured, off-road terrains are characterized by a lack of predefined pathways; uneven terrains; the presence of random natural obstacles like rocks, fallen branches, various vegetation (trees of various sizes, bushes, tall grass, reeds, etc.); and negative obstacles like pits and ditches. The challenges created by these characteristics are briefly discussed next.

Terrain

In unstructured terrains, the robot’s legs could slip, trip, sink, or get entangled in vegetation. Therefore, a robot could crash due to one of the following reasons:

- Poor foothold: This causes the robot’s feet to slip in rocky or slippery terrains like ice because the surface does not provide enough grip or traction for the robot to stand or walk.

- Granularity: This causes the robot’s feet to sink into the terrain (e.g., sand, mud, and snow), leading to incorrect measurements of joint states. This could cause the robot’s locomotion controller to overcompensate to stabilize itself, resulting in crashes.

- Resistance to motion: This is typically caused by dense, pliable vegetation (PV) that can be walked through (e.g., tall grass and bushes) but requires significantly higher effort (motor torques) from the robot to traverse. Additionally, the robot’s legs could get entangled in vegetation, resulting in a crash.

Dense Vegetation

In unstructured outdoor settings, a major challenge arises from different kinds of vegetation. Vegetation can be fundamentally classified into one of the following categories: (1) tall grass of variable density, which is pliable (therefore, the robot can walk through) and could be taller than the robot’s sensor-mounting height, causing occlusions; (2) bushes/shrubs that are typically dense and shorter than the robot and detectable without occlusions; and (3) trees (>2 mm high), which are nonpliable/untraversable and must be avoided.

Navigating through such vegetation, the robot could encounter the following adverse phenomena:

- Freezing: The robot’s planner proclaims that no feasible trajectories or velocities exist to move toward its goal and halts it for extended time periods.

- Entrapment: The commanded velocity by the planner is non-zero, but the robot’s actual velocity is near-zero due to its legs getting stuck in vegetation or other entities.

- Collisions: The robot does not detect a nonpliable obstacle like a bush or tree and collides with it.

Vegetation also poses a major challenge to exteroception due to occlusions and the lack of clear boundaries between different kinds of vegetation. For instance, tall grass could be occluding trees, leading to erroneous detections and collisions.

More complex challenges and potential methods to address them are discussed in the “Future Work” section.

Proposed Solutions

In this section, some proposed perception and navigation algorithms addressing these challenges are discussed.

Perceiving Uneven, Granular Terrains

To address terrain challenges, the robot must first accurately estimate their traversability. Traditional navigation methods relying solely on exteroceptive sensing (e.g., cameras and LiDARs) or proprioceptive feedback (e.g., joint encoders) often fall short in complex terrains or when environmental conditions (e.g., adverse lighting) affect sensor reliability. Therefore, closely coupling exteroceptive and proprioceptive sensing and adaptively utilizing the more reliable mode of sensing for estimating terrain traversability at any instant are proposed.

To this end, Adaptive Multimodal Coupling (AMCO) [8], a novel method that utilizes three distinct cost maps derived from the robot’s sensory data, is suggested. The three maps are (1) the general knowledge map, (2) the traversability history map, and (3) the current proprioception map. These maps combine to form a coupled traversability cost map (shown in Figure 1), which guides the robot in selecting stabilizing gaits and velocities in terrains with poor footholds, granularity, and resistance to motion. In the figure, the input image is passed through a segmentation model to segment the image into different terrain types (left). Then, the AMCO utilizes proprioception and segmented images (center) to generate a couple traversability cost map Camco (right). AMCO’s main components are described next.

![Figure 1. Traversability Cost Map Generation Using AMCO [8], Where Light Colors Indicate High Costs and Vice Versa (Source: M. Elnoor).](https://dsiac.dtic.mil/wp-content/uploads/2025/02/sathyamoorthy-figure-1.png)

Figure 1. Traversability Cost Map Generation Using AMCO [8], Where Light Colors Indicate High Costs and Vice Versa (Source: M. Elnoor).

General Knowledge Map

The general knowledge map represents a terrain’s general level of traversability (e.g., walking on soil is generally stable). It is generated using semantic segmentation of RGB images [9] to classify terrain types and their expected traversabilities. The segmentation process categorizes each pixel into predefined classes (e.g., stable, granular, poor foothold, and high-resistance terrains). This map assigns traversability costs based on these classifications utilizing a model that incorporates the smallest area ellipse derived from principal component analysis of terrain data [10]. This process involves discretizing the segmented image into grids and assigning costs based on the predominant terrain type within each grid (see Figure 1 [right]).

Traversability History Map

The traversability history map reflects changes in terrain conditions that might not be immediately apparent from visual data (e.g., soil felt wet and deformable a few times in another location). It records the robot’s recent experiences on a terrain as proprioceptive signals for a certain duration and adjusts the costs in the general knowledge map dynamically based on the new information. This approach ensures that recent, context-specific data inform the robot’s navigation strategy, improving its adaptability to changing terrain conditions.

Current Proprioception Map

The current proprioception map provides a real-time assessment of the terrain’s traversability based on the robot’s instantaneous proprioceptive feedback (e.g., soil feels increasingly deformable and is nontraversable). The current proprioception map is constructed by extrapolating the robot’s present proprioceptive measurements to predict upcoming traversability along the robot’s trajectory. This map relies solely on the proprioceptive feedback, which is inherently reliable regardless of environmental conditions that affect visual sensors. The traversability cost is calculated based on the distance from the robot’s current location, with a predefined cost for moderately traversable terrain adjusted by the observed proprioceptive signals [8].

Adaptive Coupling

AMCO combines the general knowledge map, traversability history map, and current proprioception map into a final cost map Camco based on the reliability (ξ) of the visual sensor data. This reliability is assessed using metrics such as brightness and motion blur, which impact the accuracy of the semantic segmentation. The coupling mechanism assigns weights to the vision-based general knowledge and recent history maps according to the reliability score and integrates them with the proprioception map to form the coupled traversability cost map.

![]()

Camco (Figure 1 [right]) is used as a robot-centric local cost map that can be used to compute least-cost robot trajectories and gaits using a planning algorithm [11]. It ensures that navigation decisions prioritize the most reliable sensory input, adapting to varying environmental conditions.

Perceiving and Navigating Well-Separated Vegetation

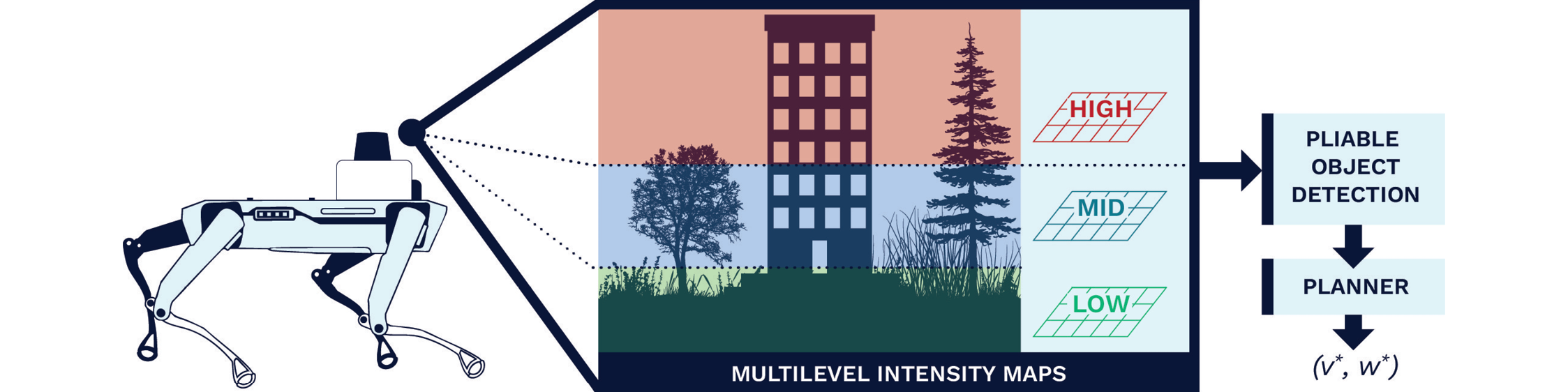

To estimate the traversability of different kinds of vegetation, navigate cautiously under uncertainty, and recover from physical entrapments in vegetation, VEgetation-aware Robot Navigation [VERN] [7] is presented. VERN uses RGB images and 2-D laser scans to classify vegetation based on pliability (a measure of how easily a robot can walk through them) and presents cautious navigation strategies for vegetation. VERN’s key components and how they connect are discussed in the following subsections and shown in Figure 2.

![Figure 2. Overall System Architecture of VERN [7] (Source: K. Weerakoon).](https://dsiac.dtic.mil/wp-content/uploads/2025/02/sathyamoorthy-figure-2.png)

Figure 2. Overall System Architecture of VERN [7] (Source: K. Weerakoon).

In the figure, the few-shot classifier uses quadrants of RGB images and compares them with reference images of various vegetation classes to output a vegetation class and confidence. Multiview cost maps corresponding to various heights are used to assess vegetation height. Using the classification, confidence, and height, the costs are cleared/modified to enable the robot to walk through PV if necessary. Further, if the robot freezes or gets entangled in vegetation, it resorts to holonomic behaviors to reach a safe location that is saved prior to freezing.

Few-Shot Learning Classifier

VERN employs a few-shot learning classifier based on a Siamese network architecture [12] to detect various kinds of vegetation with high accuracy using limited RGB training data. This classifier is trained on a few hundred RGB images to classify vegetation as tall grass, bushes, or trees, each with distinct pliability characteristics. During training, the classifier is fed with pairs of images either belonging to the same or different vegetation class. Thus, the classifier learns to compare images and identify similar types of vegetation. During runtime, the classification process begins by dividing the RGB image into quadrants. Each quadrant is compared with reference images to detect the type of vegetation present. The classifier finally groups vegetation binarily into the following two main types:

- PV: Vegetation that the robot can navigate through (grass of different heights and densities).

- Nonpliable vegetation (NPV): Vegetation that the robot must avoid (trees and bushes).

Multiview Cost Maps

To detect vegetation height and density accurately, VERN uses three tiers of cost maps derived from 2-D LiDAR scans at different heights. These cost maps, represented as Clow, Cmid, and Chigh, have the following properties. Clow captures all obstacles, providing a base map for general navigation. Cmid focuses on medium-height obstacles like bushes and low-hanging branches. Chigh captures tall obstacles such as trees and buildings. Using multiple layers helps distinguish between critical obstacles (e.g., trees and buildings) and noncritical elements like overhanging foliage, allowing for safer navigation decisions.

Integrating Classification and Cost Maps

Using homography, the RGB quadrants passed to the classifier are projected onto the multiview cost maps to align the vegetation data recorded in the image and cost map and create a vegetation-aware traversability map (CVA). This map guides the robot’s navigation decisions by dynamically adjusting the navigation costs based on the vegetation’s pliability, height, and classification confidence. For instance, short and high-confidence PV has a lower cost, promoting navigation through these areas. Taller and less PV has a higher cost, encouraging the robot to avoid them. VERN uses the dynamic window approach to navigate different vegetation types by dynamically computing the least-cost robot’s linear and angular velocities based on CVA.

Cautious and Recovery Behaviors

When encountering high-cost or uncertain regions in the traversability map, VERN executes cautious navigation behaviors by limiting the robot’s maximum velocity to prevent collisions. Further, VERN includes recovery behaviors for situations where the robot gets physically entrapped in dense vegetation by storing safe locations as the robot navigates. The robot can extricate itself from entanglements using holonomic movements to the closest safe location.

Perceiving Intertwined Vegetation

When pliable and NPV are highly intertwined with each other, RGB image-based methods such as VERN could produce erroneous classifications that could lead to collisions. To overcome this limitation, multilayer intensity maps (MIMs) [13] are proposed. Although LiDAR point clouds (represented as [x, y, z, int]) scatter in the presence of thin, unstructured vegetation like tall grass, the intensity (int) of the light reflected back to the LiDAR indicates the object’s solidity properties (higher intensities implies higher solidity). MIMs utilize this property to differentiate PV intertwined with nonpliable obstacles, as the reflected intensities would be high.

MIMs

MIMs [13] are composed of several layers of 2-D grid maps (see Figure 3). Each of these layers is constructed by (1) discretizing the x, y 3-D point cloud points into grid locations, (2) summing the intensity of all the points within a grid and height interval, and (3) normalizing the summed intensity and assigning its value to a grid. Therefore, each layer would contain grids with various intensity values at various locations around the LiDAR. This denotes the solidity of the objects, and implicitly, also their height.

Figure 3. MIM Layers Using the Point Clouds Corresponding to Different Height Intervals (Source: K. Weerakoon).

In the figure, the intensities contained in the grids of each layer are compared against a threshold intensity. If the intensities are lower than the threshold, this indicates the presence of a nonsolid or pliable object. This information is finally fed into a planner for navigation.

Detecting Intertwined Vegetation

To differentiate truly solid, dense vegetation (e.g., trees and bushes) from PV like tall grass, three layers of MIMs are used. The three grids correspond to three nonoverlapping height intervals from the ground to the maximum height that the LiDAR can view. The grids of each of these layers are summed, and each grid’s value is checked to see if it is greater than an intensity threshold. If it is, then the grid corresponds to a truly solid vegetation like a tree. If not, then it belongs to either free space or PV like tall grass.

Since MIMs also view different height levels, the reflected higher intensities will allow them to be detected as truly solid obstacles, even if PV is intertwined with taller solid obstacles. Additionally, since MIM layers use a grid map structure, they can be directly used as cost maps for planning low-cost robot trajectories.

Real-World Evaluation

All these methods have been implemented on a real Boston Dynamics Spot robot and evaluated on real-world unstructured terrains and vegetation compared to prior works such as GA-Nav [9] and Spot’s Inbuilt planner (see Figure 4).

![Figure 4. Navigation Trajectories Generated by AMCO [8], VERN [7], and MIM [13] in Diverse Vegetation and Terrain Scenarios <em>(Source: M. Elnoor).](https://dsiac.dtic.mil/wp-content/uploads/2025/02/sathyamoorthy-figure-4.jpg)

Figure 4. Navigation Trajectories Generated by AMCO [8], VERN [7], and MIM [13] in Diverse Vegetation and Terrain Scenarios (Source: M. Elnoor).

Future Work

There are several challenges unaddressed by existing work in navigating unstructured vegetation beyond those discussed in the “Challenges in Unstructured Outdoor Navigation” section. These challenges are discussed next, and a few potential solutions for addressing them are proposed.

Robust Detection of Diverse Vegetation

Although VERN and MIM introduce preliminary methods to detect vegetation pliability, the large diversity of vegetation appearances and other obstacles (e.g., barbed wire fences that MIMs may not be able to detect) necessitates developing novel, robust perception methods. To this end, compact vision language models (VLMs) such as Contrastive Language-Image Pretraining [14] are promising and can be fine-tuned using real-world data of different kinds of vegetation and obstacles to robustly detect them.

Handling Positive and Negative Obstacles

Positive obstacles are referred to here as those that the robot can climb, jump, or leap over instead of circumventing them (e.g., mounds, fallen branches, logs, etc.). Negative obstacles are ditches, pits, and potholes that could destabilize the robot. Traversing environments with such obstacles requires superior perception and locomotion capabilities. Fusing RGB image-based segmentation or classification [9, 15, 16] and LiDAR point clouds could help estimate the types and dimensions of such obstacles when they are unoccluded by entities like vegetation. However, when they are occluded by vegetation, using proprioceptive feedback and novel locomotion policies trained using deep reinforcement learning (DRL) could help detect such obstacles and maintain stability while traversing them.

Handling Adverse Lighting and Weather

Adverse lighting and weather conditions can significantly impact the robot’s exteroceptive perception capabilities. Low light or harsh sunlight can obscure visual data, making it difficult for the robot to detect and navigate obstacles. A few possibilities to address adverse lighting are as follows:

- Altering the lighting in the images used to train compact VLMs for detecting various obstacles.

- Relying more on point cloud and proprioception in poorly lit scenarios.

- Developing probabilistic methods to predict the traversability of terrains ahead based on past elevation, proprioception data.

- Training DRL locomotion policies to exhibit cautious behaviors such as gently bumping into objects using the front legs to assess the upcoming terrain’s traversability.

To address adverse weather, methods to handle occlusions caused by rain droplets or snow on cameras and LiDAR sensors can be developed. This involves assessing affected parts of images or point clouds and relying on unaffected data for perception. Additionally, complementing exteroception with proprioception can ensure reliable traversability estimation under partial observability.

Handling Water Bodies

Compared to wheeled robots, legged robots can traverse still and running water of certain depth (e.g., knee deep) due to their superior dynamics. Still water, apart from exerting resistance to the robot’s motion, could also present occluded, slippery terrains with rocks, pebbles, soil, etc., on the waterbed. Therefore, the primary challenge is to detect these poor foothold and granular challenges using proprioceptive feedback and developing new locomotion strategies to stabilize and walk on such terrains. A possible solution is to use online and offline DRL approaches for training a blind (without exteroception) locomotion policy with trials on simulated pebbles, rocks, and still water (higher resistance to leg motion) first and then fine-tuning the model with real-world data. Suggesting a blind locomotion policy first ensures that the robot can traverse terrains even when all exteroceptive inputs become unreliable.

Running water poses additional challenges due to various resistive forces and slippery, rocky riverbeds. To address this, methods to estimate the overall water flow (direction and magnitude), along with the properties of the underlying terrain using the forces experienced by the robot’s knee and hip joints, can be developed. Next, the feasibility of traversing through the water stream is estimated by transforming the water flow and underlying waterbed’s traversability into the motor torque requirements. If the requirements are lower than the maximum torques that the robot can generate, the stream is considered traversable.

Conclusions

In this article, some of the challenges of navigating a legged robot on unstructured outdoor terrains with a variety of terrain properties and vegetation were discussed. Solutions were proposed using exteroceptive and proprioceptive sensing to perceive a terrain’s properties and adapt the robot’s velocities and gaits for stable navigation. Furthermore, RGB image-based and LiDAR point cloud-based methods accurately detecting vegetation properties like pliability were analyzed.

Outdoor environments pose many more challenges to navigation. Several key problems like the diversity of vegetation, small positive and negative obstacles on the ground, and still/running water were addressed, as well as potential directions for future work in perception and locomotion.

References

- Bruzzone, L., and P. Fanghella. “Functional Redesign of Mantis 2.0, a Hybrid Leg-Wheel Robot for Surveillance and Inspection.” Journal of Intelligent and Robotic Systems, vol. 81, pp. 215–230, 2016.

- Khan, A., C. Mineo, G. Dobie, C. Macleod, and G. Pierce. “Vision Guided Robotic Inspection for Parts in Manufacturing and Remanufacturing Industry.” Journal of Re-Manufacturing, vol. 11, no. 1, pp. 49–70, 2021.

- Matthies, L., Y. Xiong, R. Hogg, D. Zhu, A. Rankin, B. Kennedy, M. Hebert, R. Maclachlan, C. Won, T. Frost, et al. “A Portable, Autonomous, Urban Reconnaissance Robot.” Robotics and Autonomous Systems, vol. 40, no. 2-3, pp. 163–172, 2002.

- Yoshiike, T., M. Kuroda, R. Ujino, H. Kaneko, H. Higuchi, S. Iwasaki, Y. Kanemoto, M. Asatani, and T. Koshiishi. “Development of Experimental Legged Robot for Inspection and Disaster Response in Plants.” The 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE, pp. 4869–4876, 2017.

- Valsecchi, G., C. Weibel, H. Kolvenbach, and M. Hutter. “Towards Legged Locomotion on Steep Planetary Terrain.” The 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE, pp. 786–792, 2023.

- Frey, J., M. Mattamala, N. Chebrolu, C. Cadena, M. Fallon, and M. Hutter. “Fast Traversability Estimation for Wild Visual Navigation.” arXiv preprint arXiv:2305.08510, 2023.

- Sathyamoorthy, A. J., K. Weerakoon, T. Guan, M. Russell, D. Conover, J. Pusey, and D. Manocha. “VERN: Vegetation-Aware Robot Navigation in Dense Unstructured Outdoor Environments.” The 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE, pp. 11 233–11 240, 2023.

- Elnoor, M., K. Weerakoon, A. J. Sathyamoorthy, T. Guan, V. Rajagopal, and D. Manocha. “AMCO: Adaptive Multimodal Coupling of Vision and Proprioception for Quadruped Robot Navigation in Outdoor Environments.” arXiv preprint arXiv:2403.13235, 2024.

- Guan, T., D. Kothandaraman, R. Chandra, A. J. Sathyamoorthy, K. Weerakoon, and D. Manocha. “GA-Nav: Efficient Terrain Segmentation for Robot Navigation in Unstructured Outdoor Environments.” IEEE Robotics and Automation Letters, vol. 7, no. 3, pp. 8138–8145, 2022.

- Elnoor, M., A. J. Sathyamoorthy, K. Weerakoon, and D. Manocha. “ProNav: Proprioceptive Traversability Estimation for Autonomous Legged Robot Navigation in Outdoor Environments.” arXiv preprint arXiv:2307.09754, 2023.

- Fox, D., W. Burgard, and S. Thrun. “The Dynamic Window Approach to Collision Avoidance.” IEEE Robotics Automation Magazine, vol. 4, no. 1, pp. 23–33, March 1997.

- Koch, G. R., R. Zemel, and R. Salakhutdinov. “Siamese Neural Networks for One-Shot Image Recognition.” Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 2015.

- Sathyamoorthy, A. J., K. Weerakoon, M. Elnoor, and D. Manocha. “Using LiDAR Intensity for Robot Navigation.” arXiv e-prints, p. arXiv:2309.07014, September 2023.

- Radford, A., J. W. Kim, C. Hallacy, A. Ramesh, G. Goh, S. Agarwal, G. Sastry, A. Askell, P. Mishkin, J. Clark, G. Krueger, and I. Sutskever. “Learning Transferable Visual Models from Natural Language Supervision.” arXiv e-prints, p. arXiv:2103.00020, February 2021.

- Song, Y., F. Xu, Q. Yao, J. Liu, and S. Yang. “Navigation Algorithm Based on Semantic Segmentation in Wheat Fields Using an RGB-D Camera.” Information Processing in Agriculture, vol. 10, no. 4, pp. 475–490, 2023.

- Wang, W., B. Zhang, K. Wu, S. A. Chepinskiy, A. A. Zhilenkov, S. Chernyi, and A. Y. Krasnov. “A Visual Terrain Classification Method for Mobile Robots’ Navigation Based on Convolutional Neural Network and Support Vector Machine.” Transactions of the Institute of Measurement and Control, vol. 44, no. 4, pp. 744–753, 2022.

Biographies

Adarsh Jagan Sathyamoorthy is a researcher focusing on developing navigation algorithms for mobile robots operating in densely crowded indoor and unstructured outdoor environments. Dr. Sathyamoorthy holds a Ph.D. in electrical and computer engineering from the University of Maryland, College Park.

Kasun Weerakoon is a Ph.D. student in the Electrical and Computer Engineering Department at the University of Maryland, College Park, where he researches developing autonomous navigation algorithms using machine-learning techniques for mobile robots operating in complex outdoor environments.

Mohamed Elnoor is a Ph.D. student in the Electrical and Computer Engineering Department at the University of Maryland, College Park, where he researches autonomous robot navigation in challenging indoor and outdoor environments.

Jason Pusey is a senior mechanical engineer at the U.S. Army Combat Capabilities Development Command Research Laboratory, with 20 years of robotics experience. He is recognized as the U.S. Department of Defense’s subject matter expert on legged robotics, having led multiple programs advancing the state of the art of legged robotics. Mr. Pusey holds undergraduate and graduate degrees in mechanical engineering from the University of Delaware.

Dinesh Manocha is a Paul Chrisman-Iribe chair in the Computer Science, Electrical, and Computer Engineering Department and distinguished university professor at University of Maryland, College Park. His research interests include virtual environments, physically-based modeling, and robotics. He has published more than 750 papers and supervised 50 Ph.D. dissertations. He is a fellow of Association for the Advancement of Artificial Intelligence, American Association for the Advancement of Science, Association for Computing Machinery (ACM), and Institute of Electrical and Electronics Engineers and an National Academy of Inventors member of the ACM SIGGRAPH Academy. He received the B ́ezier Award from the Solid Modeling Association, the Distinguished Alumni Award from IIT Delhi, and the Distinguished Career Award from Washington Academy of Sciences. Dr. Manocha holds a B.Tech. from the Indian Institute of Technology, Delhi, and a Ph.D. in computer science from the University of California at Berkeley.