SUMMARY

Do we design autonomous systems or systems with autonomy? This question will be explored and developed by first understanding the perspective of autonomy, deconflicting the buzzwords from the reality, and applying a robust and simple framework. This will encapsulate and begin to decompose autonomy, autonomous behaviors, artificial intelligence (AI), and collaborative Manned-Unmanned Teaming (MUM-T) systems for the U.S. Department of Defense (DoD) customer.

This article will progress from conceptualizing autonomy to introducing frameworks to analyzing autonomy and conclude with a synthesized approach to designing autonomy into a system-of-systems (SoS) solution.

INTRODUCTION

Warfighters will find themselves operating in complex battlespaces against highly adaptive, multi-dimensional, and fully automated SoS. Joint all-domain warfare environments and the nascent mosaic warfare concept from the Defense Advanced Research Projects Agency (DARPA) demand system behaviors never experienced before. In these environments, there will be complex, adaptive, emergent, and highly unpredictable interactions, where future conflicts will be data driven, with extremely large numbers of manned and unmanned platforms in the mix. This will make human decision makers highly susceptible to information overload but stressed with vastly-shortened kill chains and decision timelines.

Autonomy-enabled teams of manned and unmanned systems will be a disruptive game changer under these conditions for both the threat and as a force multiplier for U.S. forces and their allies. As with other complex adaptive systems, MUM-T will not just be greater than the sum of their parts, it will be different than the sum of its parts and should be treated as such. The MUM-T combinations and permutations will greatly exceed the performance capabilities of manned platforms alone. Warfare will be transformed and disrupted if the characteristic asymmetries of MUM-T are planned and exploited wisely.

Lockheed Martin established a MUM-T, integrated product team (IPT) of subject matter experts organized to tease apart the collaborative autonomy/ MUM-T problem. The team was tasked with identifying techniques, tools, and methods of operations analysis to show the benefits of MUM-T. They were challenged with the difficult problem of identifying how Warfighters, technologists, and engineers communicate regarding these new and extraordinary capabilities, with a common language and discriminating the technology exhibiting unique operational behaviors that distinguish one autonomous approach over another.

To achieve these revolutionary capabilities, the systems’ designer must first step back and baseline, deconflict, and understand the complex environment of autonomous systems.

CONCEPTUALIZING AUTONOMY

The first obstacle to overcome was to establish a common foundation of autonomy. This required the team establish first principles for autonomy to align definitions, create an ontology, and agree on appropriate semantics to fully conceptualize the problem space.

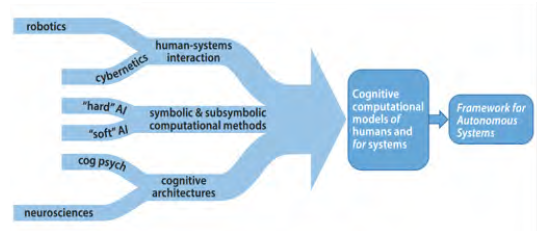

In “Autonomous Horizons – The Way Forward,” the chief scientist of the U.S. Air Force and team captured the complex relationships of the underlying functions and behaviors that autonomous systems achieve. Their graphic, as captured in Figure 1 [1], identifies that the solution space for autonomous systems is, by nature, polymathic, i.e., requiring knowledge that spans a significant number of subjects and the need to draw on complex bodies of knowledge to solve the problems.

Figure 1: Research and Development (R&D) Streams Supporting Autonomous Systems (Source: Autonomous Horizons).

The multi-disciplinary problem was addressed in three ways. First the team looked at consolidating an executable definition for autonomy. Next, the problem space was analyzed from a systems engineering perspective. Last, the conceptualization of autonomy had to be modular and composable into modeling and simulation and MUM-T configurations.

Defining Autonomy

A cursory look at defining autonomy was exacerbated from the perspective of the U.S. government customer, where 10 major organizations (the U.S. Army, Navy, and Air Force; Secretary of Defense; DARPA; National Aeronautics and Space Administration; DoD Joint Procurement Office; National Institute of Standards and Technology [NIST]; Center for Naval Analysis; and the Defense Science Board) identified that not only do they have different definitions of autonomy, but these definitions shifted from year to year.

To begin reducing this problem space, the team performed an analysis on these definitions, visions, and mission statements of those organizations to distill a definition in this environment. This analysis looked for key, encapsulating elements which, by removing, would change the nature of those statements. These elements established a taxonomy of characteristics and a common lexicon for discussion. The team then began mapping the semantic ontology of those characteristics across the different definitions to identify critical elements that maximized alignment to all the stakeholders. This distillation resulted in the following definition of autonomy:

Autonomy is a capability whereby an entity can sense and operate in its environment with some level of independence. An autonomous system may be comprised of automated functions with varying levels of decision making, and it may have adaptive capabilities, but these features are not required for a system to be autonomous.

The team found this definition aligned with a simple heuristic used to conceptualize autonomy:

Autonomy is a gradient capability enabling the separation of human involvement from systems performance.

This corollary, not exclusive of supporting technologies such as AI, reduces the complexity from many of the academic pitfalls the team refers to as the “No True Autonomy Fallacy,” which is based on the No True Scotsman Fallacy.

Conceptualizing autonomy as a capability enabling the separation of human involvement from systems performance transitions autonomy from being the end state to an enabler of an end state. This end state focuses more on improved warfighting capabilities where the human in the loop is a limiting factor. This enabling baseline led the team to the next consideration—systems layers of autonomy.

SoS Approach to Autonomy

The Defense Science Board 2012 Autonomy Task Force Report identified a concern: “Autonomy is often misunderstood as occurring at the vehicle scale of granularity rather than at different scales and degrees of sophistication depending on the requirements” [2]. This insight is critical to the broader concept of autonomy. A self-driving car is a challenging problem to solve with complex sensing, decision analysis, and heavy data processing in a dynamic environment. Imagine, however, if you were to move requirements into the super-system roads. Now, instead of machine vision to identify and contextualize a stop sign, what if the stop sign announced itself and its context with a vision of broader, current conditions in mind? Intersections could control traffic flow, and the system requirements for the vehicle system could be substantially reduced.

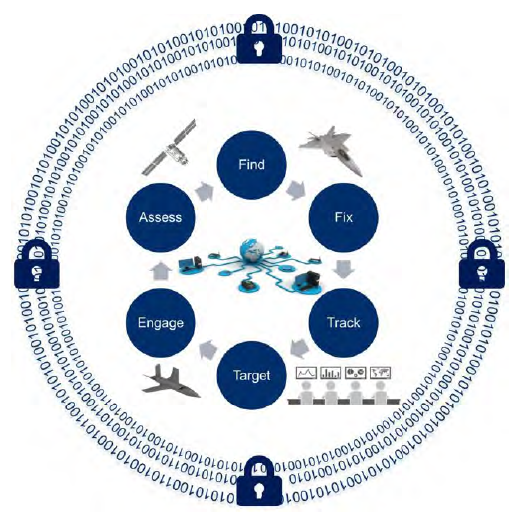

The team considered the SoS approach and identified three layers for consideration—the entity, integrated system, and system security layers (see Figure 2).

Figure 2: Autonomy Layers (Source: Lockheed Martin).

The entity is the vehicular scale. It is the “thing” being produced and focuses on making a system smarter, networked, and collaborative, with improved AI, etc. This level is discrete.

In this example, the integrated system is focused on collapsing the kill chain, where one can achieve highly complex AI mission planning and integration of the kill chain from detection through mission planning to weapon engagement.

System security is considered as technologies become more interconnected. Additional cyberattack surfaces emerge with collaborative weapons, networked sensors, and remote kill chains, with new connections added to legacy equipment. The system security layer is critical to conceptualization. Autonomous technologies create both unique cybersecurity implications and opportunities to apply autonomous technologies to solve the system security concerns, such as real-time monitoring and resilient security through AI-based cyberdefense. This layer focuses on improving trust in autonomous system integrity.

These three SoS layers push beyond the vehicular scale and include the larger implications of the integrated systems and systems security. They become crucial in considering how to measure autonomy in systems design.

The Dimensions of Autonomy

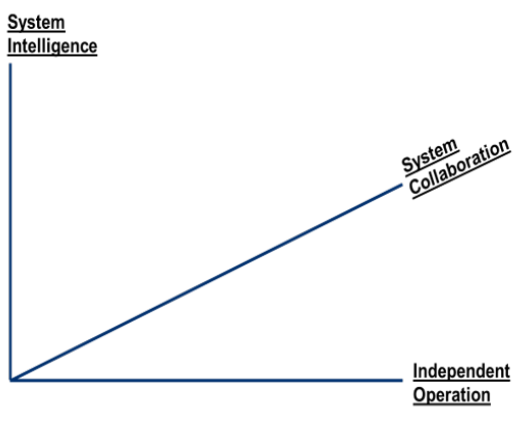

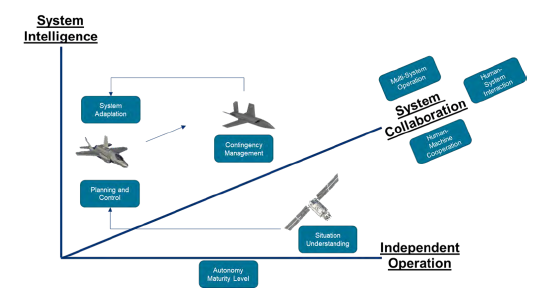

The final consideration for conceptualizing autonomy is tying the definition with the SoS views into a method to measure the autonomy implications of MUM-T. The team initially struggled with identifying the critical concepts to measure to craft systems’ interactions before three axes emerge (see Figure 3).

Figure 3: Three Dimensions of Autonomy (Source: Lockheed Martin).

The first axis is Independent Operation, which captures the degree to which a system relies on human interaction and measures the separation of human involvement from systems performance. This scale can include completely-manual to completely-autonomous systems. It is on this scale that the independence of action of the entity or platform is measured.

The second axis is System Intelligence, which identifies the degree to which a system can process the environment to compose, select, and execute decisions. This also includes concepts referred to as AI and methods like machine learning (ML) that allow a system to perform complex computations and behaviors aligned with cognitive science.

Measures of System Intelligence against Independent Operation can capture ideas like autonomy at rest (high intelligence and low independence) and autonomy in motion (variable intelligence with higher independence). This X/Y scale also captures automated functions (low intelligence and high independence) and allows comparing automation vs. autonomy.

The third and final axis is System Collaboration, which is the degree to which a system partners with humans and other systems. This axis identifies MUM-T behavior considerations and identifies interrelationships between systems and within an SoS view. Key in the development of these dimensions is the ability to map both human and machines on the axes.

Conceptualizing Autonomy Summary

To summarize conceptualizing autonomy, the team applied a definition of autonomy as a gradient capability enabling the separation of human involvement from systems performance. This capability can be applied to an entity, integrated systems, and systems security layering to ensure a holistic, SoS autonomy perspective. This SoS view can be mapped along three dimensions of Independent Operation, System Intelligence, and System Collaboration for further analyses and to design autonomous systems.

ANALYZING AUTONOMY

Based on the foundations established in conceptualizing autonomy, the team developed frameworks to analyze autonomy. This activity was born out of a need for a framework, taxonomy, or structure to study MUM-T operationally. What was needed could not be found in the literature and, apparently, had not been previously done or at least not published. With little theoretic basis on which to formulate and analyze the unique considerations of MUM-T, the team set off to build the structure itself. The goal was to establish the first principles of autonomy and a taxonomy of elements related to all autonomous systems. The structure is scalable and flexible to meet the needs of operational researchers, engineers, and Warfighters seeking to qualify and quantify solutions to problems concerning autonomous development, MUM-T.

The following four frameworks were developed:

- Autonomous behavior characteristics (ABCs): capabilities organic to the autonomous system.

- Operational: visually represents how the system is organized.

- Environmental: captures the context/environment the system operates in.

- Trust: assures autonomy.

The next sections will further detail these frameworks for analysis, their origins, and alignment with the conceptualization of autonomy.

Framework 1: ABCs

ABCs capture mission architecture considerations for how a system processes, interacts, and teams in an environment. These characteristics and associated scales are intended to be used as a heuristic approach vs. an explicitly-quantified relationship. They work to describe the relationships between entities, outcomes of teaming, and intelligence required to achieve customer requirements. These characteristics and associated maturities simplify complex design space inherent in MUM-T.

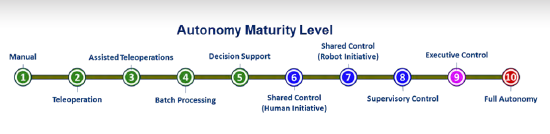

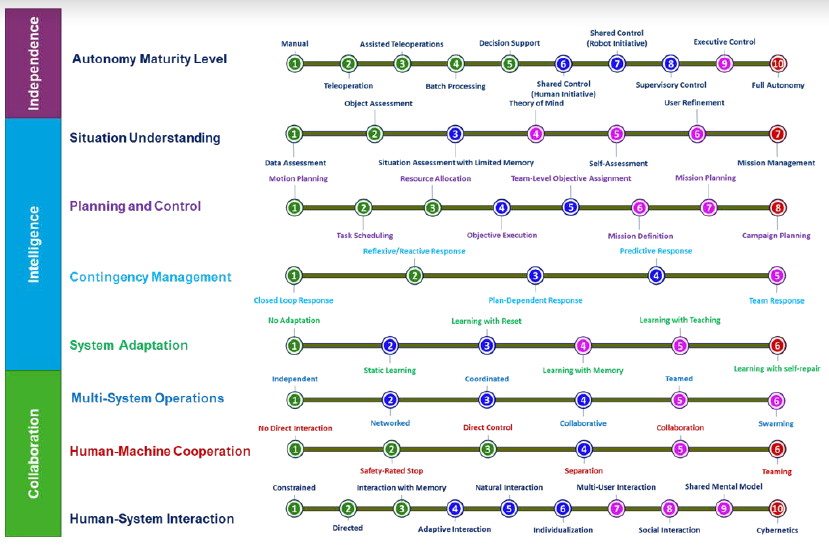

The overall autonomy maturity level, shown in Figure 4, establishes the format for each of the dimensions of autonomy dimensions. The autonomy maturity level is on an ordinal scale where each higher level subsumes and expands upon the capabilities of the level below it. That is, level 1 (manual) where 100% of operations are controlled by a human, has the least autonomous capability, while level 10 (full autonomy) reflects the most. A system having a higher-level number than another system implies that it has more autonomous capability.

Figure 4: Autonomous Behavior Characteristics for Independent Operation (Source: Lockheed Martin).

The colors of the bullets at each level estimate the maturity of implementations of systems at the time of publication—as items mature, the method still applies. Green bullets indicate levels of capability that most fielded systems have demonstrated in general. Blue bullets indicate levels of capability that have not been fielded in most systems in a general way. However, some systems exhibiting that capability have been fielded, and/or fielded systems have exhibited that capability but in a limited or constrained way. Purple bullets indicate levels of capability that have not been widely fielded, but the technology for that level of capability is under development, and the basic principles have been explored and understood. Red bullets reflect levels of capability that have currently not been achieved in a generalized way and whose constituent properties, including behaviors, are not yet fully understood. These levels can generally be compared to technology readiness levels (TRLs).

The ABCs align with the three dimensions of autonomy of Independent Operations, System Intelligence, and System Collaboration (see Figure 5).

Figure 5: Autonomous Behavior Characteristics (Source: Lockheed Martin).

Independent Operations

At the top level, the framework establishes an autonomy maturity level. This top-level view is derived from the Levels of Robotic Autonomy scale developed by Dr. Jenay Beer at University of Georgia and is based on a synthesis of ideas that combines aspects of many previous scales, including the original autonomy levels for unmanned systems (ALFUS) work [3]. This autonomy maturity-level model captures the system’s overall ability to perform tasks in the world without explicit external control.

System Intelligence

System Intelligence contains four behavior characteristics—Situation Understanding, Planning and Control, Contingency Management, and System Adaptation.

Situation Understanding encompasses the sensing, perception, and processing to create a semantic and cognitive representation of the environment, mission, the system itself, and its teammates and adversaries. Situation Understanding goes beyond situational awareness in that it enables action to be taken. This measure reflects the observe and orient components of the observe, orient, decide, act (OODA) loop.

Situation Understanding is the synthesis of two existing models—the data fusion information group’s levels of data fusion [4] and Mica Endsley’s [5] levels of situation awareness.

Planning and Control provides the ability to plan and manage the execution of autonomous actions within a mission implementing the decide and act portions of the OODA loop.

Contingency Management adds the detection and reaction capability to unplanned events that affect mission success. Contingency Management works in parallel with the Mission Planning component to generate an effective response to contingencies either known and anticipated to some degree or unknown altogether. This scale was derived from Franke et al. in 2005 [6], which described the first-developed, holistic approach to contingency management for autonomous systems, referred to as the Lockheed Martin Mission Effectiveness and Safety Assessment [7].

System Adaptation is tightly integrated with the Contingency Management and Planning and Control functions. An autonomous system with adaptive capability responds to environmental changes or changes in its internal functioning and modifies its structure of functionality appropriately to its circumstances. A major assumption in system adaptation is that the system can make the necessary adaptations needed.

In analyzing the ABCs for orthogonality, the team was unable to explicitly separate these behaviors and consolidate or split the behaviors while still retaining a common ontology with the research and academic environments. To that end, there is a natural relationship between the System Intelligence ABCs:

- Situation Understanding can exist on its own.

- Planning and Control must inherit Situation Understanding to develop a plan.

- Contingency Management requires a plan to identify a contingency.

- System Adaptation occurs when contingencies are managed more than the original plan requiring an adaptation down the relationship chain.

System Collaboration

Multi-System Operation measures multi-agent and distributed interactions between unmanned machines, electronic agents, and platforms, such as multiple unmanned and AI systems, including autonomy-at-rest systems. This characteristic measures machine-to-machine collaboration, where autonomous systems can operate together in many ways and seeks to provide a scaling of those interactive capabilities. While an unusual source for a scale related to autonomous systems working together, this scale is adapted from literature produced by the Oregon Center for Community Leadership and later adopted by the Amazon Web Services group, which defined levels 1–5 [8].

Human-System Interaction captures the complexity of direct communication and other interactions between an autonomous system and the human(s) controlling, supervising, or teaming with it. At least three domains contribute to human-system interaction (cognitive science, systems engineering, and human factors engineering), and the scale reflects contributions of each in terms of capability. The IPT derived this scale after determining no scale in the literature captured the depth and breadth of interaction that autonomous systems might support.

Human-Machine Cooperation measures the degree to which the human and unmanned system work together in the same environment. This reflects not only the relationship between the system and the person controlling it, but also between the system and other people in its environment. This scale is adapted from one published by the Nachi Robotic Systems Corporation [9]. At its lowest level, humans and systems do not directly interact, except possibly through a remote interface. At each successive level, the richness increases how the system and humans coexist.

Tying these ABCs together along the three dimensions of autonomy provides the analyst a measurement of SoS implications, interactions, and relationships and allows the autonomy’s analysis to be viewed beyond only the vehicular scale, as shown in Figure 6.

Figure 6: ABCs Along the Three Dimensions of Autonomy (Source: Lockheed Martin).

In this example, a highly independent, yet low-intelligence sensor system achieves Situation Understanding and passes it to a less-independent, more-intelligent battle management system to achieve Planning and Control. High-fidelity mission plans are collaboratively communicated to a weapon system, which is balanced on Intelligence and Independence and empowered for Contingency Management. System Adaptation is achieved by closing the loop from the weapon system back to the integrated system, allowing updates to the data set for later analysis and system configuration modification to current operational and/or environmental conditions. The System Collaboration dimension measures and analyzes the relationship arrows between these three assets from the Multi-System Operation, Human-System Interaction, and Human-Machine Cooperation perspectives.

Our ABCs, layered on the three dimensions of autonomy, create the first, and most important, framework for analyzing customer requirements across a SoS view, with autonomy as an enabler.

Framework 2: Operational

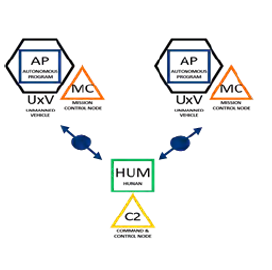

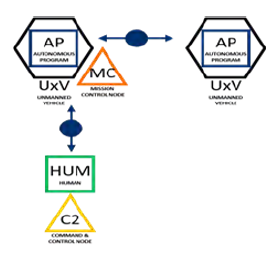

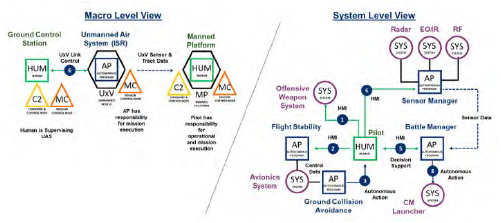

The Operational framework for autonomy provides a tool with which to depict MUM-T configurations assigned to complex warfighting tasks. The Operational framework tool provides the means to build a visual representation of any MUM-T configuration and clearly identify key differences between autonomous systems. The objective with the Operational framework is to provide a method to identify common elements of autonomous system mission configurations and how the parts can be combined to create different operational effects; describe common operational approaches, techniques, and technology requirements of MUM-T; and develop an autonomous systems view that clearly identifies the degree of autonomous operations and where the human decision marker resides in the overall scheme of the mission.

Control elements are represented as color-coordinated squares as human (HUM) or autonomous program (AP). System elements are represented as color-coordinated circles, and command and control (C2) elements are color-coordinated triangles. Vehicles are depicted as control elements enclosed in a black hexagon. Information transfer elements are either solid or dashed, directional arrows with a number for autonomy level. A solid line indicates that a direct link exists between team members representing a common command and control relationship. A dashed line indicates an indirect link exists between team members; while they may be able to share information, they are unable to influence their team members’ operational or tactical orders. Four examples of Operational views are displayed in Figures 7–10.

In Figure 7, members plan and execute separately against separate goals and objectives but coordinate to resolve conflicts. In Figure 8, members plan against common sets of goals but execute against separate objectives. Goals are separated by time or space. Objectives can be transferred from one team member to another but never simultaneously shared. In Figure 9, members belong to one system interdependently. Except for platform control, autonomy exists at the swarm level rather than the individual level. Individual plans do not exist. Decision making is shared and typically by consensus. In Figure 10, members plan and act in a coordinated fashion throughout the mission and can perform tightly coordinated actions together.

Figure 7: Coordinated (Source: Lockheed Martin).

Figure 8: Collaborative (Source: Lockheed Martin).

Figure 9: Swarming (Source: Lockheed Martin).

Figure 10: Teamed (Source: Lockheed Martin).

In addition to modeling specific use cases, the Operational framework also allows users to combine elements to represent complex autonomous designs at two or more levels. The macro level view shows a depiction of the high-level interactions between team members. This view simplifies many of the underlying autonomous elements to focus on the interactions between manned and unmanned platforms and their control stations (see Figure 11).

Figure 11: Macro vs. Systems Views (Source: Lockheed Martin).

Figure 12: Environmental Framework Examples (Source: Lockheed Martin).

The system-level view, on the other hand, provides a more comprehensive and detailed look of the autonomous teams to represent how autonomy is used at the system level within a specific platform and how the subsystems are integrated in the overall configuration scheme.

The Operational framework simplifies discussion with customers. It builds off the autonomous behavior characteristics and visualizes and clarifies the SoS considerations of MUM-T configurations. This framework is scalable from the integrated system down into subsystem considerations and quickly communicates basic functional architecture for determining requirements.

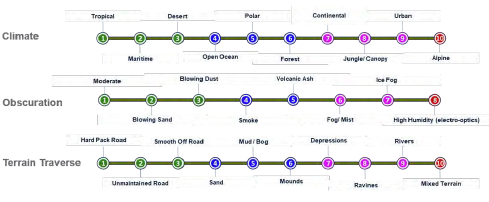

Framework 3: Environmental

The Environmental framework addresses the use of autonomy under multiple environmental conditions, such as weather, location, threats, domains, C2, communications, etc. This framework helps identify the capabilities, constraints, and limitations of specific capabilities across multiple implementations and sets the analysis boundary to successfully determine subsystem characteristics (see Figure 12). It aligns along the DoD’s mission, enemy, terrain, troops, time, and civilian considerations concept and intends to capture the spectrum of activities that have influence to the MUM-T environment.

In concert with the ABC framework, environmental considerations can add complexity to the behaviors needed to achieve system needs. For example, a system traveling less than 2 km on maintained, rural roads in a logistics support region is much less complicated than traveling 50 km on off-road, mixed terrain at the forward edge of battle. These environmental considerations are consistent with traditional systems analysis but help clarify substantial autonomous systems complexity when paired with the ABC and Operational frameworks.

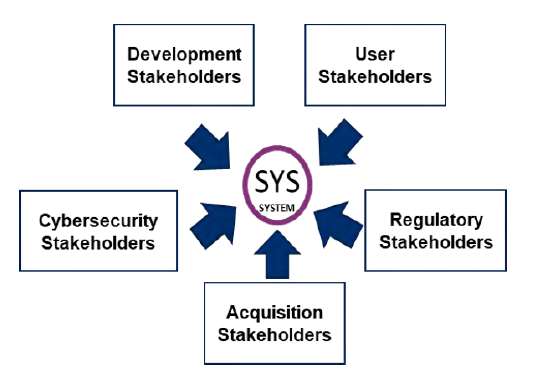

Framework 4: Trust

The Trust framework allows tailoring for each autonomy design application, drives analysis of the unique stakeholder considerations for design, and supports data capture to identify trust trends and consensus between stakeholders. Five major stakeholder groups to consider with this framework emerged—Development, User, Cybersecurity, Acquisition, and Regulatory (see Figure 13). They are as follows:

- Development:

• Does the system do what it was designed to do (verification measures)? - User:

• Will the system do what is expected (transparency/ explanation/usability measures)? - Cybersecurity:

• What are the unique cybersecurity risks with autonomy (system assurance and security)? - Acquisition:

• Does the system do what was requested (validation measures)? - Regulatory:

• Will the system become a menace (ethics/reliability/resilience measures)?

Figure 13: Trust Framework (Source: Lockheed Martin).

The Trust framework allows the autonomy space to be constrained by what is allowable, desirable, and usable by stakeholders. This framework analyzes trust from multiple perspectives to understand design, test, and implementation trade spaces and captures stakeholder expectations and assumptions. The Trust framework focuses on balancing regulatory, human factors, and performance considerations and ties existing trust considerations together, such as the recently proposed DoD principles for AI of being responsible, equitable, traceable, reliable, and governable [10].

Analyzing Autonomy Summary

The four frameworks reviewed allow the analysis of autonomy to govern systems design beginning with the conceptualization of autonomy as an enabler for improved systems performance. The Trust and Environmental frameworks provide additional context for analyzing the design trade space created through applying different applications of the ABCs and Operational framework. Considering all four frameworks together supports a holistic architecture for structuring future systems engineering of autonomous capabilities.

The team developed these frameworks within the same time frame as the chief scientist of the Office of the U.S. Air Force [1]. Comparing the two frameworks finds comfortable synergy and identifies that the major tenets do not conflict. The consistency betweenthe frameworks of two independent efforts confirms the validity of the approach and identifies that tailoring for an organization’s structure and culture is achievable without losing context in the complex and cross-discipline field of autonomous systems.

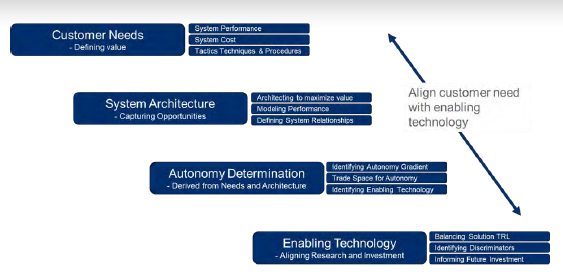

SYSTEMS ENGINEERING FOR AUTONOMY

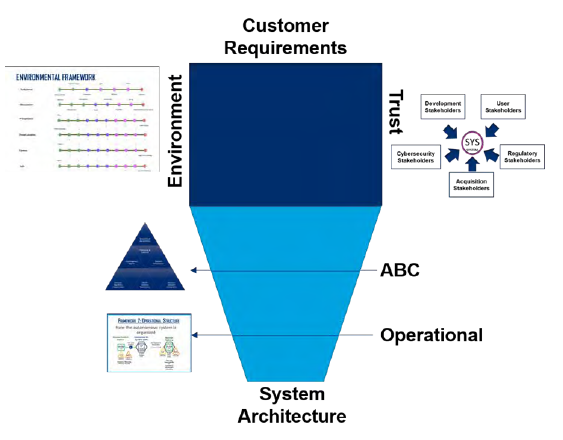

Systems engineering for autonomy begins only when a solid conceptualization and analysis capability is established. Too often, engineering begins before a full understanding of the situation is established. Figure 14 identifies the systems engineering flow, beginning with understanding the customer needs in terms of performance, cost, etc. It moves through the systems architecture, after which time, the actual autonomous capabilities aligning into the enabling technologies are determined.

Figure 14: Aligning From Customer Need to Enabling Technology (Source: Lockheed Martin).

Ensuring this flow connection allows balancing TRLs and identifying discriminators and future investments. The flow back to customer requirements occurs when enabling technologies are out of sync with customer requirements—like schedule and cost—and ensures a balanced, aligned, and deliverable solution.

Executing this flow aligns the four autonomy frameworks, as captured in Figure 15. Starting with the customer requirements, we can bracket the design space by understanding the environment the system is expected to operate in against what the stakeholders will trust the system to do in that environment. Once that space is defined, the SoS analysis of the ABCs against the three dimensions of autonomy allows identifying the initial concepts of operations, systems architecture, and subsystem considerations. Capturing these relationships in the Operational framework allows easy systems and subsystems trades to align technology, reduce complexity, and support initiatives, such as Modular Open Systems Architecture.

Figure 15: Systems Engineering of Autonomy Interrelationships (Source: Lockheed Martin).

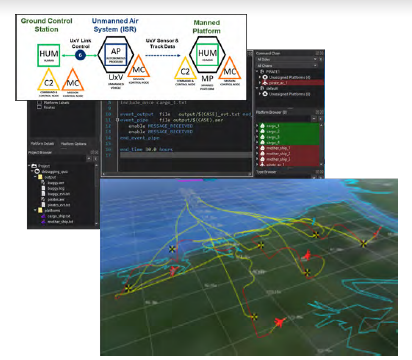

A last benefit of this design flow is the ability to easily translate the Operational configurations into mission-level modeling environments, such as Advanced Framework for Simulation, Integrations, and Modeling (AFSIM), and also into systems modeling languages, such as SYSML, UML, etc. (see Figure 16). By using the Operational framework and leveraging the ABCs levels as trade space brackets, experiments can be conducted where trades between environment, trust, and architecture can be quantified and modeling of enabling technologies can validate design assumptions. Further, these frameworks foster proactive verification and validation (V&V) engagement in the modeling and simulation environment that informs subsequent detailed design, integration, and test.

Figure 16: Operational Framework to AFSIM (Source: Lockheed Martin).

The frameworks and design process outlined here provides a rich set of tools for describing the autonomy of a system. Together, the artifacts produced could establish a potential ninth viewpoint to serve as an adjunct to the DoD Architecture Framework [11]. Current experiments with the design process have defined an autonomy viewpoint (AUV) consisting of the following:

- AUV-1: Intelligence, collaboration, and independence diagram. A three-dimensional view of autonomy configurations in cartesian space reflecting system intelligence, system collaboration, and independent operation (the three dimensions of autonomy). This view can be used to compare canonical examples to new development concepts as a way to measure complexity and relationships.

- AUV-2: Operational framework system view. A top-level operational configuration view showing interaction between platforms, autonomous program(s), humans, and C2/mission control functions.

- AUV-3: Authority allocation. A table listing allocation of task responsibilities between humans and autonomous systems.

- AUV-4: ABC diagram. The eight ABCs describing the level of behavior in each dimension for system mission requirements.

- AUV-5: Operational framework expanded view. A detailed operational configuration showing interaction among subcomponents of the platform, autonomous programs, humans, and C2/mission control functions.

- AUV-6: Environmental view. The N-dimensional environment characteristics that capture environmental context for the system’s operations.

- AUV-7: Trust view. The five-dimensional trust characteristics that describe the level of trust required by each major stakeholder.

These potential autonomy views further demonstrate the applicability of these frameworks to standard systems architecture and systems engineering processes, templates, and tools.

The autonomy systems’ architecture frameworks are designed to be an iterative approach where customer requirements, trust, and environment provide trade space dimensions and the autonomous behavior characteristics and operational framework provide design opportunities. Modeling of the architectures against the requirements, environment, and trust provide feedback for design improvements. Multiple iterations are expected to trade within these frameworks to best balance customer expectations, schedule, scope, and budget of systems designs.

CONCLUSIONS

A clear, concise, and applicable foundation for analysis of autonomous systems emerged, starting with conceptualizing autonomy as a gradient capability enabling the separation of human involvement from systems performance. Building from there, autonomous designs centered on a SoS approach and focused on system security ensured optimal designs. Lastly, collaborative trades can be achieved through the three dimensions of intelligence, independence, and collaboration. From this foundation, the following three analysis steps emerged:

- Applying the four Analysis frameworks for autonomy from the Trust and Environmental to the ABCs and then visualizing it with the Operational framework demonstrates a holistic and exhaustive structure to view the unique interactions and complexities of autonomous relationships.

- Aligning the Conceptualization and Analysis frameworks to the systems engineering process, iterative designs with clarified trade spaces empower analysts to quickly translate design architectures into modeling and simulation programs to perform quantified analysis.

- Leveraging the autonomous system conceptualization, analysis, and design capabilities strengthens the systems engineering toolkit to achieve revolutionary capabilities through stepping back and baselining, deconflicting, and understanding the complex environment of autonomous systems and applying a synthesized approach to designing autonomy into an SoS solution.

Lockheed Martin continues to mature this structure for conceptualizing, analyzing, and designing autonomy into a systems solution. Continued collaboration with the DoD, industry partners, and academic institutions provides opportunities for spiral development of MUM-T solutions to create disruptive game changers for U.S. forces and their allies.

REFERENCES

- Zacharias, G. L. “Autonomous Horizons – The Way Forward.” Office of the U.S. Air Force Chief Scientist. Air University Press, p. 27, March 2019.

- Defense Science Board. “Task Force Report: The Role of Autonomy in DoD Systems.” Office of the Under Secretary of Defense for Acquisition, Technology and Logistics, Washington, DC, p. 21, July 2012.

- National Institute of Standards and Technology. “Autonomy Levels for Unmanned Systems (ALFUS) Framework.” NIST Special Publication 1011-I-2.0, Gaithersburg, MD, 2008.

- Blash, E. “One Decade of the Data Fusion Information Group (DFIG) Model.” U.S. Air Force Research Laboratory, Rome, NY, https://www.researchgate.net/publication/300791820_One_decade_ of_the_Data_Fusion_Information_Group_DFIG_ model, accessed 5 April 2020.

- Wickens, C. “Situation Awareness: Review of Mica Endsley’s 1995 Articles on Situation Awareness Theory and Measurement.” Human Factors, vol. 50, no. 3, pp. 397–403, 2008.

- Franke, J., et al. “Collaborative Autonomy for Manned/ Unmanned Teams.” Paper presented at the American Helicopter Society 61st Annual Forum, Grapevine, TX, June 2005.

- Franke J., B. Satterfield, and M. Czajkowski. “Self- Awareness for Vehicle Safety and Mission Success.” Unmanned Vehicle Systems Technology Conference, Brussels, Belgium, December 2002.

- Hogue, T. “Community-Based Collaborations – Wellness Multiplied.” Oregon Center for Community Leadership, Corvallis, OR, 1994.

- Nachi Robotic Systems Corporation. https://www. nachirobotics.com/collaborative-robots/, accessed 8 April 2020.

- Defense Innovation Board. “AI Principles: Recommendations on the Ethical Use of Artificial Intelligence by the Department of Defense.” Washington, DC, https:// media.defense.gov/2019/Oct/31/2002204458/-1/-1/0/ DIB_AI_PRINCIPLES_ PRIMARY_DOCUMENT.PDF, accessed 3 April 2020.

- U.S. DoD. “DoD Architecture Framework Version 2.0.” https://dodcio.defense.gov/Portals/0/Documents/DODAF/DoDAF_ v2-02_web.pdf, accessed 6 April 2020.