Summary

Anomaly detection, whereby signals are scanned for behavior changes, is a common problem in many applications. As data streams grow in complexity and volume, automated methods for anomaly detection, especially those based on machine learning (ML), are increasingly attractive. However, many real-world defense applications like space domain awareness (SDA) are challenging environments for these algorithms because sensors can be noisy, datasets can be large, and observations can have unpredictable gaps. Furthermore, many automatic detections are limited in their actionable utility because they lack a meaningful measure of uncertainty and confidence. Without uncertainty analysis, it can be difficult for ML models and their recommendations to be trustworthy.

This article will describe a scalable ML algorithm, based on Gaussian processes (GPs), to address many of these concerns. An example from SDA is used that detects and identifies resident space object (RSO) trajectories and behaviors. It shows how the algorithm MuyGPs can accurately identify RSO anomalies from real-world data and provide uncertainty in those detections. While immediately useful for SDA applications, the MuyGPs framework is flexible, fast, and general, making it an attractive option for a diverse set of real-world missions whenever abnormal behavior needs to be identified and acted upon.

Introduction

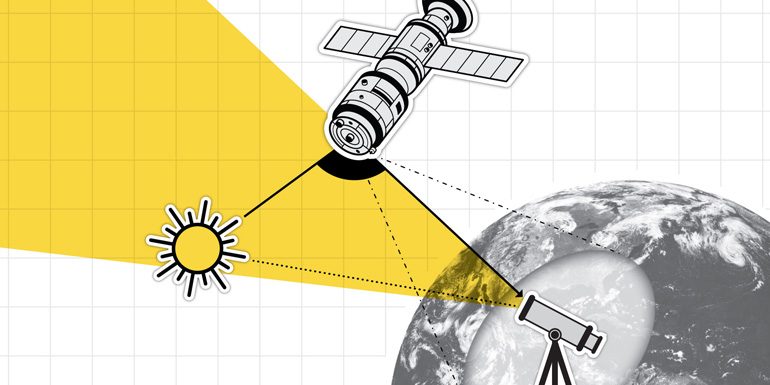

Anomaly detection, the process of identifying unusual patterns or events, is an essential tool for monitoring the vast and dynamic space environment. By effectively detecting anomalies in RSO trajectories or behavior, valuable insights can be gained into maneuvers, malfunctions, or potential threats. However, applications to real-world data face significant hurdles. Measurement noise and gaps in the data from faulty sensors to external phenomena like the weather can mask true anomalies or trigger false alarms. Furthermore, the amount of data expected to grow dramatically in the near future due to the increase in satellite launches and the proliferation of low-cost cameras that can easily collect large samples of data can overwhelm traditional processing techniques, making it difficult to identify anomalies in a timely manner. This motivates a need for automatic methods for detecting anomalies in large, noisy, and potentially sparse data sets.

Light curves of RSOs are time series of observed brightness that provide a wealth of information. The brightness of an RSO depends on several components, including size, shape, reflective material, distance from observer, and solar phase angle. A light curve is also sensitive to dynamic quantities like spin-rate, tumbling motion, or maneuvers. A sudden change in the brightness of a satellite could indicate that it has been damaged or has had its performance degraded. Several studies have successfully demonstrated the use of light curves to infer RSO properties [1–6]. They are also good examples of large, sparse, and noisy datasets, which is why they are used as a realistic benchmark for demonstrating the methods described here.

GPs provide a nice framework for working with such sparse and noisy datasets, as they typically give accurate predictions while requiring less training data than more popular ML techniques and naturally incorporating uncertainty into their predictions. But standard GP models are typically limited to small training datasets (less than ~10,000 observations). To overcome these limitations, a scalable GP model called MuyGPs was employed to efficiently predict large datasets faster and more accurately than competing GP methodology [7]. It is ideal for the future of this SDA problem, where efficient predictions are needed in very short time spans and with minimal computing power.

An approximate GP-based method for SDA anomaly detection and object classification is presented. This expands on the previous results of Goumiri et al. [8, 9], where it was shown that GPs can effectively be used to interpolate and forecast RSO light curves. This article will expand upon these models to demonstrate a method for detecting anomalies in various scenarios and a workflow that can be used to classify unknown RSOs based on their light curves.

The anomaly detection workflow consists of predicting known data using a GP model comparing predictions with actual measurements considering the predicted uncertainty. This model includes a heteroscedastic noise model, wherein each observation has a previous, separate noise derived from on-sensor measurements taken at the time of observation as well as an anisotropic kernel assumption. The details will be described further in the Methodology section.

The RSO classification workflow has two major modeling stages. First, since the training data is collected at sparse and irregularly spaced locations, a model like that in Goumiri et al. [9] is utilized to interpolate light curve observations to a consistent set of observing times over each night. The second major step in this workflow is to utilize these interpolated observations as training data to classify the light curves.

This article outlines the ML workflow useful for future SDA anomaly detection and classification problems based on light curve observations. The Methodology section describes the data and GP methods, including descriptions of the heteroscedastic and anisotropic model features and the reference frame interpolation used for preparing data for classification. The Results section details how these methods work in practice on forecasting and anomaly detection tasks as well as a classification task that requires interpolated data. Finally, the Conclusions section explains the implications of the results and future directions for this work.

Methodology

Light Curve Data

A ground-based, commercial off-the-shelf camera pointed at geostationary orbits can accumulate a large amount of RSO data for relatively little cost. However, there are limitations working from the ground, such as changing lighting conditions, variable sensor availability, and poor or missing observations resulting from bad weather. Light curves from these objects will routinely include regions of noisy data and large gaps that must be addressed if the data are to be used for training ML models.

In this analysis, a dataset provided by Dave Monet is used that consists of 43 known satellites measured from a single camera in Flagstaff, AZ, between September 2014 and September 2018 [10]. This is the same dataset used in previous papers by Goumiri et al. [8, 9]. Each measurement consists of a measured time, position on the sky, and brightness and error in a filter close to the Johnson V-band. There are ~500,000 data points per object, resulting in a dataset of ~6.5 million data points. This analysis was limited to 13 satellites labeled as “Nominal” in the dataset (see the Interpolation With GPs subsection), interpolated to denoise and complete missing data (Figure 1). These were selected because they have data over the full period and appear to be active satellites.

![Figure 1. Nominal Light Curves: Magnitude Is Plotted as a Function of Days (Rows) and Times of Day (Columns) for a Four-Year Duration (Source: Goumiri et al. [9]).](https://dsiac.dtic.mil/wp-content/uploads/2024/11/Goumiri_Figure_1.jpg)

Figure 1. Nominal Light Curves: Magnitude Is Plotted as a Function of Days (Rows) and Times of Day (Columns) for a Four-Year Duration (Source: Goumiri et al. [9]).

GPs

GPs are powerful tools for modeling and predicting noisy data that can be used to learn nonlinear relationships between features and responses. These responses can be a continuous variable, such as the brightness of the light curve at a particular time, or discrete labels, such as the identification of light curve observations with individual RSOs or classes of object or orbital regimes. GPs for regression and classification tasks are used in this work.

A GP parameterizes a collection of random variables such that any subset of these variables is a jointly multivariate Gaussian with a known mean function mϑ(·) and covariance function kϑ(·, ·), where ϑ is a set of hyperparameters that control the behavior of the GP. mϑ(·) is usually taken to be 0, without a loss of generality. As such, the joint probability distribution of any set of GP outputs can be completely specified by its mean vector and covariance matrix, and the conditional (posterior) distribution of predictions can be estimated by applying Bayes’ rule.

GPs naturally incorporate uncertainty into their predictions, and model decisions including the form of kϑ(·, ·) have a natural statistical interpretation. These features are advantageous compared to other popular ML techniques such as deep neural networks (DNNs), although uncertainty quantification and interpretation of DNNs is an active area of research. The covariance matrix of a GP specifies the degree of correlation between different outputs. Being able to quantify the uncertainty of predictions without training specifically for it is important for many applications.

Goumiri et al. initially explored the GP modeling of RSO light curves [8]. That work used isotropic and homoscedastic assumptions in its model, where a covariance was taken to be a function of Euclidean distance in the input space and the noise of each measurement was assumed to be i.i.d. Gaussian. However, the resulting posterior variances were misspecified, as the uncertainties around the relatively stable measurements taken in the middle of the night were the same width as the much noisier measurements taken near dusk or dawn. This analysis attempts to correct these model discrepancies to more accurately measure the uncertainties associated with RSO light curve prediction.

The model in Linares and Furfaro [5] assumed measurement noise for observations was drawn i.i.d. from Ν(0, ε). That is, given time dimensions X = (x1, … xn)T, the response Y (X) is modeled as

Y (X) = (Y (x1 ),…, Y (xn ))T ~ Ν(0, σ2 (Kϑ(X, X) + ϵIn )) , (1)

where N is the multivariate Gaussian distribution, 0 is the n-dimensional zero vector, σ2 is a variance scaling term, In is the n × n identity matrix, and Kϑ(X, X) is an n × n positive definite, symmetric covariance matrix between the elements of X controlled nonlinearly through kernel function kϑ(·, ·) with hyperparameters ϑ.

As noted in Goumiri et al. [8], using a homoscedastic noise model implies that the prior noise is constant over all magnitudes. However, in practice, the data at high magnitudes tend to have more variance than the data collected at lower magnitudes. The model considered in this analysis uses a vector of noise prior variances ε, where εi ~Ν(0, εi) for each observation i. These variance priors are taken from measurements collected from the sensor at the same time as the corresponding magnitude. This changes the prior in equation 1 to

Y (X) = (Y (x1 ),…, Y (xn ))T ~Ν(-0, σ2 (Kϑ(X, X) + diag(ε))) , (2)

where diag(ε) is the diagonal matrix with ε along the diagonal.

The prior model is further generalized by utilizing an anisotropic distance model. It used the isotropy assumption that kϑ(x, z) = pϑ(||x−z||2 / ℓ 2) for some function pϑ(·) and a single length scale parameter ℓ. However, embedding time into multiple dimensions imposed an arbitrary relationship between displacement in time of day and displacement in the day-of-observation interval sensitive to the units of the observations and how the data were normalized. While the initial exploration disregarded this detail, this relationship is modeled here by considering functions of the form kϑ(x, z) = pϑ(Σj=1…d (xj−zj)2 / ℓ2)) for the same function pϑ(·) and separate length scale parameters ℓj.

Explicitly modeling length scales along each feature dimension creates less sensitivity to data normalization choices and makes the covariance scales along each dimension clear.

Interpolation With GPs

Because light curves are often noisy and intermittent and many applications require complete and smooth data, interpolation is key to being able to denoise and further process light curve data. Goumiri et al. [8] demonstrated that GPs were very performant at interpolating light curves provided that the data were properly embedded in a low-dimensional space reflecting their periodicity. Using two-dimensional (2-D) embedding where each data point was replaced by a 2-D vector was described. The first dimension represented the time of day as a real number in the [0, 1] interval. Another dimension represented the day of the observation period (four years) as an integer, starting at zero on the first day of observation, and further normalized to the interval [0, 1]. As was customary, the constant mean was also subtracted from the responses and added back to the predictions.

MuyGPs with a Matérn kernel and a fixed smoothness hyperparameter of ν = 0.2 was used to implement the new models. This smoothness hyperparameter was selected to ensure that predicted curves had the desired degree of smoothness and were fixed to limit the cost of optimizing other hyperparameters. Isotropic models have one additional hyperparameter, the length scale ℓ, that is set to ℓ = 1. Anisotropic models have two length scale hyperparameters, ℓ1 and ℓ2, that are set to ℓ1 = 0.1 and ℓ2 = 1. The median of the measured magnitude error was used as the homoscedastic noise ϵ and a polynomial fit of order 2 of that same measured magnitude error to smooth the heteroscedastic noise ε.

Classification With GPs

To demonstrate an example of a task that requires properly interpolated data rather than raw time series of magnitudes, GPs are used as tools for identifying unseen light curves based on a database of known ones. This classification task maps vectors of magnitudes to light curves labels and hence requires that the magnitudes for all different light curves be interpolated to a common set of time points. Interpolating the light curves using the methods described in the Interpolation With GPs subsection on a regularly-spaced grid of time points (5 min apart) spanning the longest common interval of time to all light curves in the dataset (about four years) was chosen. That common interval guarantees strictly interpolation and not extrapolation since extrapolation is susceptible to larger errors and the use of a regularly-spaced grid for times makes plotting easier (see Figure 1). To incorporate the uncertainty provided by the GPs during the interpolation phase into the classification, one-day chunks from the posterior distributions are sampled, and each sampled chunk of interpolated magnitudes forms a feature vector. Each feature vector is then associated with a one-hot encoded label with zero mean, meaning light curve i has a vector label that is all −1/N except for component i, which is (N−1)/N, where N = 13 is the number of light curves in the dataset.

To make the task realistic, the first three years are reserved for training the classifier, an isotropic GP with homoscedastic noise similar to the one described in the Interpolation With GPs subsection. The fourth year is used as the test data.

The training and test data are interpolated independently. Predictions are then compared to actual measurements to determine the accuracy. This is a realistic SDA scenario since it forecasts the future and can be readily applied given historical data.

Results

The sensors used to capture light curves were calibrated for the night sky. Unsurprisingly, the images produced at dawn or dusk, when the sky became brighter, were noisier than images produced in the middle of the night. Figure 2 shows an example of the magnitude of a light curve for one day of missing data (Day 445 of Sat0019). At the start of night, the captured magnitude is high and noisy. Then, as the night progresses, the magnitude drops and so does the noise. Finally, as dawn approaches, the magnitude gets higher and noisier again.

![Figure 2. A Homoscedastic Model (Left) vs. a Heteroscedastic Noise Model (Right) Comparing GP Prediction (Red) With Measurement (Blue) (Source: Goumiri et al. [9]).](https://dsiac.dtic.mil/wp-content/uploads/2024/11/Goumiri_Figure_2.jpg)

Figure 2. A Homoscedastic Model (Left) vs. a Heteroscedastic Noise Model (Right) Comparing GP Prediction (Red) With Measurement (Blue) (Source: Goumiri et al. [9]).

However, a homoscedastic noise model cannot capture that natural variation of the noise level with the magnitude. The left pane of Figure 2 shows how a homoscedastic model underestimates the noise at dawn and dusk and overestimates it in the middle of the night. The pink-shaded region represents the 95% confidence interval, where 95% of the data is expected to lie. The coverage is about 66%, meaning that 66% of the points are within the shaded region overall. Ideally, the coverage should be close to 95%. Near the edges, many points lie outside the region, visibly more than 5%. At the center, 100% of the points are within the region.

On the other hand, a heteroscedastic noise model can consider the heterogeneity of the variance. While the relation between the noise and the magnitude could be learned, e.g., using a GP, this dataset contained measurement errors obtained from the sensors for each data point, so they were used to fit a quadratic function from the noise model. The right pane of Figure 2 shows how a heteroscedastic model produces a magnitude-dependent variance that properly reflects the uncertainty at any time of the night. The coverage is close to 95% and, as importantly, homogeneously correct throughout the night.

Figure 3 is like Figure 2 but shows the effect of using an anisotropic deformation model. The left pane shows an example of the magnitude of a light curve during a single night as predicted by an anisotropic model with homoscedastic noise. The right pane shows the same prediction using an anisotropic model with heteroscedastic noise. The pink-shaded region is the 95% confidence interval of the prediction. The root means square error is lower (better) using the anisotropic models, although the difference is marginal. This is because the scaling of the input’s two dimensions was carefully optimized to get a reasonably isotropic distribution of nearest neighbors. The effect might be more pronounced in problems with greater scale differences across dimensions of the feature space.

![Figure 3. Anisotropic Models Comparing GP Prediction (Red) to Measurement (Blue) for One Day of Missing Data (Day 445 of Sat0019) (Source: Goumiri et al. [9]).](https://dsiac.dtic.mil/wp-content/uploads/2024/11/Goumiri_Figure_3.jpg)

Figure 3. Anisotropic Models Comparing GP Prediction (Red) to Measurement (Blue) for One Day of Missing Data (Day 445 of Sat0019) (Source: Goumiri et al. [9]).

One important selling point for using GPs in space applications is their ability to predict full posterior distributions, providing insight into the confidence of the model for each prediction. Having an objective measure of confidence can be useful in computing probabilities and help in decision-making. It is also possible to use the posterior distribution to detect anomalies. For instance, GPs can be used to predict known measurements and compare the posterior mean to the measurements given the posterior standard deviation. Points where the difference consistently exceeds the standard deviation can be flagged as anomalies and further analyzed. Figure 4 shows an example of anomalies detected by the GP for a certain period for light curve Sat0024 that can sometimes uncover maneuvers (top). Sat0024 exhibits such anomalies in the fourth year (yellow-shaded regions). Analyzing the corresponding orbital elements obtained from Spacetrack (NORAD ID 29155; bottom) reveals discontinuities consistent with a maneuver (pink-shaded region) that could explain the second anomaly.

![Figure 4. Comparing GP Predictions (Posterior Mean) to Measurements and Normalizing the Difference by the Posterior Standard Deviation (Source: Goumiri et al. [9]).](https://dsiac.dtic.mil/wp-content/uploads/2024/11/Goumiri_Figure_4.png)

Figure 4. Comparing GP Predictions (Posterior Mean) to Measurements and Normalizing the Difference by the Posterior Standard Deviation (Source: Goumiri et al. [9]).

Lastly, to demonstrate a realistic task that requires preprocessing raw light curves, a classifier (also powered by MuyGPs) was built and trained on the first three years of the dataset. This was set up to identify the light curve it belongs to among the 13 trained on from a single day of unseen data from the fourth year. Every single day of the last year of data is classified this way, and the results are reported in Figure 5.

![Figure 5. Classification Results (Source: Goumiri et al. [9]).](https://dsiac.dtic.mil/wp-content/uploads/2024/11/Goumiri_Figure_5.jpg)

Figure 5. Classification Results (Source: Goumiri et al. [9]).

Using the anisotropic model with heteroscedastic noise to interpolate the light curves yields a slightly better classification accuracy. The overall accuracy is 78%, meaning that the provenance of 78% of the 13 × 365 test days is correctly identified. For certain light curves, the accuracy is close to 100%, while for others, it is lower. Each row is a test day, ordered by time and light curve, and each column represents one light curve. The colors represent the one-hot encoded prediction results—vectors of probabilities of belonging to each of the 13 light curves. On top of that, the green and red dots represent correct and incorrect predictions, respectively, and are positioned on the predicted point’s light curve. There are several potential reasons for the misclassifications, but they are mainly due to issues in the interpolation step since a different classifier, a random forest, on the same data was tested and resulted in similar results. What happens in the interpolation phase is that some of the satellites either maneuver or rotate. This causes cyclic variations every couple of days that interfere with the nearest neighbor selection process and lead to jumps in the predicted data. This, in turn, leads to misclassification in the classification phase.

Conclusions

A novel method for efficiently processing and classifying light curve data while inherently quantifying uncertainty was presented. This capability is crucial for SDA, as the volume of data continues to rise due to advancements in sensor technology and the growing number of satellites and debris. While automation offers significant advantages, its effectiveness ultimately relies on the confidence assigned to its results. GPs address this challenge by naturally incorporating uncertainty quantification through their mathematical framework, bypassing the potential pitfalls of learning-based approaches. This inherent trust in GP predictions is further enhanced by the approach that leverages the full posterior distributions generated by the model to create representative training data for the classifier, effectively incorporating uncertainty into the classification process.

Furthermore, the predicted uncertainty serves as a powerful tool for anomaly detection. By identifying data points that deviate significantly from the model’s expected range of magnitudes established through prior observations, these anomalies are flagged as potential indicators of malfunctions or maneuvers. Correlating them with additional signals can further strengthen the case for investigation, enabling either manual or automated follow-up analysis. This approach extends beyond the realm of light curve data; its applicability transcends time-series datasets and can be effectively applied to a broader range of scientific inquiries. By incorporating uncertainty quantification into the analysis pipeline, defense analysts can automate classification and gain valuable operational insights from potential outliers that might hold significant intelligence value.

While light curve data can be inherently noisy and incomplete, this analysis also highlighted the challenges posed by significant variations in the data itself. ML methods rely heavily on training data that accurately reflect the test data. In this case, some light curves exhibited year-over-year or even day-over-day variations due to maneuvers or rotations, which could lead to misclassifications. However, these variations were often captured by the uncertainty inherent in the posterior distributions of the interpolated light curves. Interestingly, comparing GPs and random forest classifiers for the classification task revealed similar performance and error rates. This suggests that the core challenge lies in effectively interpolating the data to a common grid and not in the classification itself.

The MuyGPs method showcases its potential beyond light curve classification. It is particularly well-suited for applications that involve large datasets, often exceeding the capabilities of traditional GPs. But since it is inherently a GP, it also proves valuable when the available data volume might not be sufficient for training other ML techniques such as DNNs. While light curves served as the demonstration here, MuyGPs broadly applies and works best under two key conditions. First, a reasonable overlap between training and test data distributions is crucial for optimal performance. Second, the data dimensionality should ideally be manageable, either inherently (less than 500 dimensions) or through dimensionality reduction techniques like principal component analysis (PCA). As prior work demonstrated, PCA can even enhance accuracy and performance [11].

Looking ahead, one potential area of improvement for MuyGPs lies in addressing the challenges posed by variations in light curve data. Currently, nearest neighbor calculations for interpolation rely on isotropic normalization of day and time of day, which can introduce inconsistencies due to the arbitrary scaling between dimensions. Investigating methods to incorporate anisotropic scaling during neighbor selection or even iteratively updating the nearest neighbor index regarding learned deformation parameters could lead to improved accuracy in handling these variations in future work.

Acknowledgments

This work was performed under the auspices of the U.S. Department of Energy by Lawrence Livermore National Laboratory (LLNL) under Contract DE-AC52-07NA27344. Funding for this work was provided by LLNL laboratory-directed research and development grant 22-ERD-028.

Note

This document was prepared as an account of work sponsored by an agency of the U.S. government (USG). Neither the USG nor Lawrence Livermore National Security, LLC, nor any of their employees make any warranty, expressed or implied, or assume any legal liability or responsibility for the accuracy, completeness, or usefulness of any information, apparatus, product, or process disclosed or represent that its use would not infringe privately owned rights. Reference herein to any specific commercial product, process, or service by tradename, trademark, manufacturer, or otherwise does not necessarily constitute or imply its endorsement, recommendation, or favoring by the USG or Lawrence Livermore National Security, LLC. The views and opinions of authors expressed herein do not necessarily state or reflect those of the USG or Lawrence Livermore National Security, LLC, and shall not be used for advertising or product endorsement purposes.

References

- Dianetti, A. D., and J. L. Crassidis. “Space Object Material Determination From Polarized Light Curves.” AIAA Scitech 2019 Forum, p. 0377, 2019.

- Furfaro, R., R. Linares, and V. Reddy. “Space Objects Classification via Light-Curve Measurements: Deep Convolutional Neural Networks and Model-Based Transfer Learning.” AMOS Technologies Conference, Maui Economic Development Board, 2018.

- Furfaro, R., R. Linares, and V. Reddy. “Shape Identification of Space Objects via Light Curve Inversion Using Deep Learning Models.” AMOS Technologies Conference, Maui Economic Development Board, Kihei, Maui, HI, 2019.

- Jia, B., K. D. Pham, E. Blasch, Z. Wang, D. Shen, and G. Chen. “Space Object Classification Using Deep Neural Networks.” The 2018 IEEE Aerospace Conference, pp. 1–8, 2018.

- Linares, R., and R. Furfaro. “Space Object Classification Using Deep Convolutional Neural Networks.” The 19th International Conference on Information Fusion (FUSION), pp. 1140–1146, 2016.

- Linares, R., M. K. Jah, J. L. Crassidis, and C. K. Nebelecky. “Space Object Shape Characterization and Tracking Using Light Curve and Angles Data.” Journal of Guidance, Control, and Dynamics, vol. 37, no. 1, pp. 13–25, 2014.

- Muyskens, A., B. Priest, I. Goumiri, and M. Schneider. “MuyGPs: Scalable Gaussian Process Hyperparameter Estimation Using Local Cross-Validation.” arXiv preprint arXiv:2104.14581, 2021.

- Goumiri, I. R., A. M. Dunton, A. L. Muyskens, B. W. Priest, and R. E. Armstrong. “Light Curve Completion and Forecasting Using Fast and Scalable Gaussian Processes (MuyGPs).” Proceedings of the Advanced Maui Optical and Space Surveillance Technologies Conference (AMOS), 2022.

- Goumiri, I. R., A. L. Muyskens, B. W. Priest, and R. E. Armstrong. “Light Curve Forecasting and Anomaly Detection Using Scalable, Anisotropic, and Heteroscedastic Gaussian Process Models.” Technical report, Lawrence Livermore National Laboratory, Livermore, CA, 2023.

- Monet, D. Personal communication. Dataset (2014–2018) from Flagstaff, AZ, 2022.

- Goumiri, I. R., A. L. Muyskens, M. D. Schneider, B. W. Priest, and R. E. Armstrong. “Star-Galaxy Separation via Gaussian Processes with Model Reduction.” arXiv preprint arXiv:2010.06094, 2020.

Biographies

Imène Goumiri is a staff scientist in the Computational Engineering Group at LLNL working on applications of Gaussian processes at scales ranging from atomic structures to astronomy. Her expertise includes control theory, plasma physics, and numerical analysis. Dr. Goumiri holds an M.A. and Ph.D. in mechanical and aerospace engineering from Princeton University, an M.Eng. in mathematical and mechanical modeling from the Polytechnic Institute of Bordeaux, and an M.S. in mathematics, statistics, and economics (with a specialty in modeling, computation, and environment) from the Université de Bordeaux 1.

Amanda Muyskens is a staff member in the Applied Statistic Group at LLNL, where she leads the MuyGPs project that has developed novel methods for scalable, nonstationary Gaussian processes for high-performance computing and the Data Science Summer Institute. Her expertise includes surrogate modeling, Gaussian process models, computationally efficient ML, uncertainty quantification, and statistical consulting. Dr. Muyskens holds bachelor’s degrees in mathematics and music performance from the University of Cincinnati and an M.S. and Ph.D. in statistics from North Carolina State University.

Benjamin (Min) Wesley Priest is a postdoctoral researcher at the Center for Applied Scientific Computing, LLNL, focusing on scalable graph analytics, high-performance computing, and scalable statistics and ML. Min has developed novel algorithms for Gaussian process estimation, distributed subspace embeddings and sketching for massive graphs, and published in conferences and journals. Dr. Priest holds a Ph.D. in engineering from Dartmouth College.

Robert Armstrong is a staff scientist at LLNL working on image processing algorithms for large astronomical surveys and focusing on the upcoming Rubin Legacy Survey of Space and Time. His research interests involve using survey data to understand open questions in physics, including dark energy, dark matter, and aspects of the early universe; Bayesian statistics; ML; and high-performance computing. He has performed research for the Dark Energy Survey and the Hyper Suprime-Camera Collaboration.

Jayson “Luc” Peterson is the associate program leader for data science within the Space Science and Security Program at LLNL, where he oversees several data science and space projects. He has worked on modeling and simulation, experimental design, digital engineering, uncertainty quantification, verification and validation, data analytics, high-performance computing, and ML in various applications from nuclear fusion to COVID-19 response. Dr. Peterson holds a B.A. in physics and science, technology, and society from Vassar College and an M.S. and a Ph.D. in astrophysical sciences (plasma physics) from Princeton University.