The Defense Systems Information Analysis Center (DSIAC) was asked for information on techniques and sensors for use on small unmanned aircraft systems (sUAS) for shoreline mapping, coastal beach management, and forestry monitoring. DSIAC did a search of open sources for relevant publications and research projects to determine different UAS methods. The most promising techniques include light detection and ranging (LiDAR), photogrammetry, and multispectral imaging. Therefore, DSIAC compiled a table of some LiDAR sensors designed for sUAS. Finally, DSIAC listed contact information for a DSIAC subject matter expert organization that might be able to further collaborate in this area.

1.0 Introduction

Light Detection and Ranging (LiDAR) is used to create high-resolution digital elevation models with a vertical accuracy as good as 10 cm by means of a laser scanner, Global Positioning System (GPS), and Inertial Navigation System (INS). The laser scanner transmits pulses such that the distance is calculated by the time it takes for the pulse to be reflected off the ground and received back by the scanner. As these sensors need to be airborne to collect such data, the sensor payload is generally mounted on small aircraft [1]. Secluded and dense areas in nature can prove difficult to acquire such data, so the potential of LiDAR equipment being mounted on small unmanned aircraft systems (sUAS) is both desirable and becoming more feasible [2].

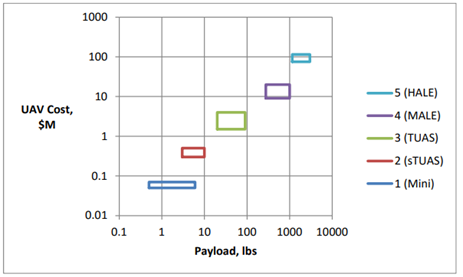

The typical max payload of a sUAS is about 10 pounds, while mini-drones (Class 1) have a payload capacity ranging from less than a pound to about 8 pounds, as is seen below in Figure 1. Typically, sUAS have a weight limit of 55 pounds (25 kg) [3].

Figure 1: Vehicle Cost and payload capacity ranges for Department of Defense UAS Groups [3]

This report includes publicly available reports of sUAS and drone uses of LiDAR, or other sensor payloads, for imaging coastal and forested areas.

2.0 UAS Payloads for Coastal Assessments

Multiple government and environmental agencies have utilized UAS, specifically sUAS, and various sensor payloads for examining coastlines and beaches, as well as the changes that occur as a result of natural disasters. As noted in the National Estuarine Research Reserve System’s (NERRS) roadmap [4], the benefits of sUAS mapping far outweighs the costs involved. From the reports summarized below, the sensor used most prevalently includes LiDAR, though another option is using photogrammetry techniques on aerial images.

The National Oceanic and Atmospheric Administration (NOAA) used LiDAR drone technology to map dense marsh areas to better understand marsh vulnerability to sea level rise in 2018. The detail from the drone imagery provided finer delineations of habitats with spatial accuracy specifications set at 10 cm root mean square error (RMSE) vertically and 15 cm RMSE horizontally [2]. The final report from this work dives deeper into the whole process of imaging and evaluating the data. The project utilized a variety of UAS including the DJI Matrice 600 hexacopter and PrecisionHawk Lancaster 5 fixed-wing with either YellowScan systems integration of a Velodyne Puck and Velodyne Puck VLP-16 based LiDAR system, depending on location being surveyed. The study includes a list of lessons learned that includes adhering to Federal Aviation Administration (FAA) regulations and the possible limited surveying area in single operation of an sUAS due to battery life. NOAA compared the drone data with manned imagery and data collection [5]. A presentation of this work by the contracted UAS users, Precision Hawk and Quantum Spatial, showcases more on the payload sensors and other equipment integrated with the UAS [6]. LiDAR was also used in a United States Geological Study (USGS) project in Alabama for identifying shoreline changes and calculating sand volume changes due to hurricanes [7].

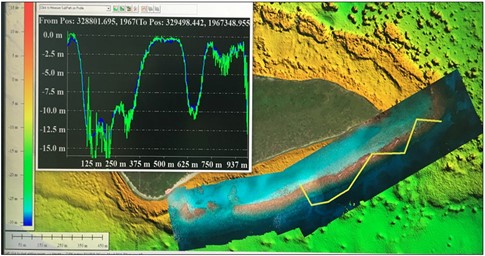

The National Centers for Coastal Ocean Science (NCCOS) funded work that mapped shallow, near-shore areas using inexpensive drones and the Structure from Motion (SfM) software to convert 2D images into 3D habitat maps. The data from the drone software are compared with LiDAR data in Figure 2 below.

Figure 2: Drone photo mosaic overlaid on LiDAR depths for a reef where the yellow transect corresponds to the depth profile where the green line represents the software data and the blue line the LiDAR data (Source: United States Geological Study [8]).

Another method of mapping and tracking beach and coastline elevations and erosion problems can be investigated with a technique called photogrammetry, which uses control points on the ground and aerial photographs to form maps with horizontal resolutions of 5-10 centimeters and vertical precision within 8 centimeters. This process was used by the USGS at the Woods Hole Coastal and Marine Science Center in Massachusetts to study coastal erosion, sediment transport and storm response, habitat classification biomass mapping, and marsh stability. The center has multiple 3DR Solo quadcopters and a Birds Eye View FireFly6 Pro fixed-wing drone, and the resulting images are processed with Agisoft Photoscan and PiX4D processing software packages. Using photogrammetry, researchers can create maps that are comparable in quality to LiDAR surveys at a fraction of the cost. Additionally, drones can be equipped with multispectral cameras to classify vegetation or identify invasive species [8]. Photogrammetry was found to be a more accurate imaging method with several hundred measurements per square meter versus the 1 to 2 points per square meter acquired from LiDAR by Cohen et al. This project utilized a DJI Phantom IV Pro quadcopter with ground control points to obtain very precise geo-references of the beachfront and sand dunes to create a model [9].

The use of post-processing drone images has been used for bathymetric measurements, or measuring water depths, in multiple studies. One such study was performed by Casella et al in which a DJI Phantom 2 drone was equipped with a consumer-grade camera with a modified lens to capture overlapping images that were processed with SfM algorithms. The result was a bathymetric digital elevation model (DEM) that compared favorably with the LiDAR dataset at a lower cost in favorable conditions [10].

3.0 UAS for Forestry Assessments

Just as the combination of sUAS and imaging techniques have been used for coastal assessments, drones have been used with various payloads to map out areas affected by pathogens. In the reports examined below, the UAS were equipped with either multispectral or very near infrared (VNIR) cameras to distinguish between healthy and infected trees of various species.

Potentially the most relevant publication is by Sandino et al. where hyperspectral imaging sensors are mounted on a UAS to detect and segment the deteriorations by fungal pathogens in natural and plantation forests in Australia. This is explored with the case of myrtle rust on paperbark trees in New South Wales. The authors concluded that the combination of UAS, hyperspectral imaging, and artificial intelligence (coding) resulted in detection rates of 97.24% for healthy trees and 94.72% for affected trees [11].

A UAS equipped with a 6-band multispectral camera was used to classify trees by species and identify infections of ash dieback disease and the European spruce bark beetle in Austria. It was concluded that the species classification approach was sufficiently accurate and reliable, though the infection identification could be improved [12].

An investigation in the Czech Republic used drone-equipped VNIR imaging for species and spruce tree health identification. Analysis was based on normalized differential vegetation index (NDVI) and point dense cloud (PDC) raster [13].

4.0 Sensor Payloads for UAS

With the increased desire for UAS imaging for applications in areas such as agriculture, forestry, corridor mapping, topography, resource management, shoreline and storm surge modeling, and digital elevation models, to name a few, it has been important to design and engineer smaller LiDAR sensors specifically to fit drone payload restrictions. A list of LiDAR sensors designed specifically for smaller UAS systems is compiled in Table 1.

Table 1: LiDAR Sensors for UAS

| Manufacturer | Model | Range (m) | Weight (g) | Source |

| LeddarTech | Vu8 | 215 | 75 | [14] |

| Velodyne | Puck VLP-16 | 100 | 830 | [14] |

| Velodyne | Puck Lite | 100 | 590 | [14] |

| Velodyne | Puck Hi-Res | 100 | 830 | [14] |

| Routescene | UAV LidarPod | 100 | 2500 | [14] |

| YellowScan | Surveyor | 150 | 1600 | [14,15] |

| YellowScan | Surveyor Ultra | 340 | 1700 | [15] |

| Geodetics | Geo-MMS | – | – | [14] |

| Geotech | Lidaretto | 100-200 | <1500 | [16] |

In order to receive photogrammetry data a UAS and good camera is needed, as a minimum of 1 photo every 2 seconds is required. A few cameras notable for use on smaller UAS include Canon S110 and SX260; Sony QX1, DSC-RX100 A7R, A7, A7S, NEX-6, NEX-5R, NEX-5T, and A5100; and Panasonic GH3. Specifically, a camera must function well at altitudes above 120 meters to get decent results and be attachable to the drone system, such as DJI systems [17]. The third portion of tech needed is the 3D photogrammetry software, which is further explored in the DoneZon article “12 Best Photogrammetry Software For 3D Mapping Using Drones” [18].

5.0 Subject Matter Expert Organization

A good organization to contact for further subject matter expert (SME) assistance or possible collaboration is the Georgia Tech Research Institute (GTRI).

References

[1] “What is LIDAR and where can I get more information?” USGS. https://www.usgs.gov/faqs/what-lidar-and-where-can-i-get-more-information?qt-news_science_products=7#qt-news_science_products, accessed 3 April 2019.

[2] Vierra, K. “NOAA Evaluates Using Drones for Lidar and Imagery in the National Estuarine Research.” NOAA. https://uas.noaa.gov/News/ArtMID/6699/ArticleID/796/NOAA-Evaluates-Using-Drones-for-Lidar-and-Imagery-in-the-National-Estuarine-Research, 19 November 2018.

[3] Fladeland, M., Schoenung, S., and M. Lord. “UAS Platforms.” White Paper from NCAR/EOL Workshop. https://www.eol.ucar.edu/system/files/Platforms%20White%20Paper.pdf, February 2017.

[4] “The Way Forward: Unmanned Aerial Systems for the National Estuarine Research Reserves.” NOAA. https://uas.noaa.gov/Portals/5/Docs/Library/The-Way-Forward-UAS-for-NERRS-2016.pdf, 2016.

[5] Waters, K. “Unmanned Aircraft System Lidar and Imagery in the National Estuarine Research Reserve System.” Final Report. National Oceanic and Atmospheric Administration. https://uas.noaa.gov/Portals/5/Docs/Projects/FINAL_REPORT–OCM_UAS–08-01-2018.pdf?ver=2018-11-08-145328-793, June 2018.

[6] Faux, R., and M. Coleman. “Small UAS-based LiDAR Acquisition and Processing Considerations for Natural Resource Management.” Quantum Spatial. Presented at Coastal GeoTools, 2017. https://coast.noaa.gov/data/docs/geotools/2017/presentations/Faux.pdf, 9 February 2017.

[7] Ebersole, S., and B. Cook. “Digital Mapping Techniques 2018.” Presented at DMT ’18, Lexington, KY, 20-23 May 2018. https://ngmdb.usgs.gov/Info/dmt/docs/DMT18_Ebersole.pdf.

[8] “Aerial Imaging and Mapping.” USGS. https://www.usgs.gov/centers/whcmsc/science/aerial-imaging-and-mapping?qt-science_center_objects=0#qt-science_center_objects, accessed 8 April 2019.

[9] Cohen, O., Cartier, A., and M.-H. Ruz. “Mapping coastal dunes morphology and habitats evolution using UAV and ultra-high spatial resolution photogrammetry.” Presented at International workshop “Management of coastal dunes and sandy beaches,” Dunkirk, 12-14 June 2018. https://www.researchgate.net/profile/Olivier_Cohen/publication/326059214_Mapping_coastal_dunes_morphology_and_habitats_evolution_using_UAV_and_ultra-high_spatial_resolution_photogrammetry/links/5b35dc29a6fdcc8506db7745/Mapping-coastal-dunes-morphology-and-habitats-evolution-using-UAV-and-ultra-high-spatial-resolution-photogrammetry.pdf.

[10] Casella, E., Collin, A., Harris, D., Ferse, S., Bejarano, S., Parravicini, V., Hench, J.L., and A. Rovere. “Mapping coral reefs using consumer-grade drones and structure from motion photogrammetry techniques.” In Coral Reefs, March 2017, Vol. 36(1), pg. 269-275. https://link.springer.com/article/10.1007/s00338-016-1522-0, 28 November 2016.

[11] Sandino, J., Pegg, G., Gonzalez, F., and G. Smith. “Aerial Mapping of Forests Affected by Pathogens Using UAVs, Hyperspectral Sensors, and Artificial Intelligence.” In Sensors (Basel), April 2018, 18(4): 944. https://www.mdpi.com/1424-8220/18/4/944/htm, 22 March 2018.

[12] Kampen, M., Kederbauer, S., Mund, J-P, and M. Immitzer. “UAV-Based Multispectral Data for Tree Species Classification and Tree Vitality Analysis.” ResearchGate. https://www.researchgate.net/profile/Max_Kampen2/publication/331895337_UAV-Based_Multispectral_Data_for_Tree_Species_Classification_and_Tree_Vitality_Analysis/links/5c921f1a92851cf0ae89d417/UAV-Based-Multispectral-Data-for-Tree-Species-Classification-and-Tree-Vitality-Analysis.pdf, accessed 8 April 2019.

[13] Brovkina, O., Cienciala, E., Surovym, P., and P. Janata. “Unmanned aerial vehicles (UAV) for assessment of qualitative classification of Norway spruce in temperate forest stands.” In Geo-spatial Information Science, Vol. 21(1), pg. 12-20. https://www.tandfonline.com/doi/full/10.1080/10095020.2017.1416994?scroll=top&needAccess=true, 2 January 2018.

[14] Corrigan, F. “12 Top Lidar Sensors For UAVs and So Many Great Uses.” DroneZon. https://www.dronezon.com/learn-about-drones-quadcopters/best-lidar-sensors-for-drones-great-uses-for-lidar-sensors/, 26 January 2019.

[15] “Overview.” YellowScan. https://www.yellowscan-lidar.com/products, accessed 8 April 2019.

[16] “Lidaretto.” sUAS News. https://www.suasnews.com/2019/04/lidaretto/, 1 April 2019.

[17] Corrigan, F. “Introduction to UAV Photogrammetry And Lidar Mapping Basics.” DroneZon. https://www.dronezon.com/learn-about-drones-quadcopters/introduction-to-uav-photogrammetry-and-lidar-mapping-basics/, 10 December 2018.

[18] Corrigan, F. “12 Best Photogrammetry Software For 3D Mapping Using Drones.” DroneZon. https://www.dronezon.com/learn-about-drones-quadcopters/drone-3d-mapping-photogrammetry-software-for-survey-gis-models/, 3 March 2019.

[19] Georgia Tech Research Institute. Chief of Program Development Operations. Personal Communication. April 2019.