Summary

Autonomously detecting tiny, fast-moving objects emitting thermal radiation in the infrared is a challenging technical problem. In addition to being fast, these targets are often dim, small, and in the presence of clutter and occlusions. Conventional detection approaches require large size, weight, and power (SWaP) systems which may introduce substantial latencies. As such, the following will be explored in this article:

- An end-to-end system composed of scene simulation,

- Sensor capture from a novel, highly sensitive micro electromechanical systems (MEMS) microbolometer,

- A readout integrated circuit (ROIC) that uses a unique saliency computation to remove uninteresting image regions, and

- Deep-learning (DL) detection and tracking algorithms.

Simulations across these modules verify the advantages of this approach compared to conventional approaches. The system’s ability is estimated to detect targets at less than 5 s after a fast-moving object enters a sensor’s field of view. To explore low-energy implementations of these computer vision models, DL on commercial off-the-shelf (COTS) neuromorphic hardware is also discussed.

Introduction

The autonomous detection of small and rapidly moving aerial targets is a technically demanding task. Such targets include missiles and airplanes. Missiles are often smaller than airplanes and fly faster; they are thus more challenging to detect. Newer missiles are also more maneuverable than previous missiles, posing a new threat to existing defense systems. Although these objects emit detectable amounts of infrared energy visible far away, their speed makes it difficult to image and track them. At large distances, these objects appear tiny and faint, adding to the task’s complexity. Additionally, these targets are often located in cluttered and occluded environments with other objects, such as slower moving airplanes, the sun, clouds, or buildings. Furthermore, robust detection in different environmental conditions, such as day, night, cloudy days, clear days, etc., poses more challenges.

Biological vision systems have become well adapted over millennia of evolution to ignore clutter and noise, detect motion, and compress visual information in a scene. On the other hand, conventional detection approaches may have difficulty with such a scene and would require increased complexity in hardware and/or software to filter out noise and alleviate nuisance factors while increasing target sensitivity with resulting inefficiencies in size, weight, power, and cost (SWaP-C).

There have been several attempts to create bioinspired vision systems. For example, Scribner et al. [1] created a neuromorphic ROIC with spike-based image processing. Chelian and Srinivasa simulated image processing from retinal [2] and thalamic [3] circuits in the spiking domain under the Defense Advanced Research Projects Agency (DARPA) Systems of Neuromorphic Adaptive Plastic Scalable Electronics (SyNAPSE) program for noise suppression, ratios of spectral bands, and early motion processing. (Their work was informed by studies in the rate-coded domain [4, 5].) However, these works do not consider detection or tracking.

Artificially mimicking biological vision systems wherever feasible was explored in the current work to overcome the challenges to conventional systems described above. The imaging system includes everything from the optics taking in the scene to the final processor outputting target reports. The system components that would be implemented in hardware are a MEMS microbolometer, a ROIC which uses a unique saliency computation to remove uninteresting image regions and increase overall system speed, and DL detection and tracking models.

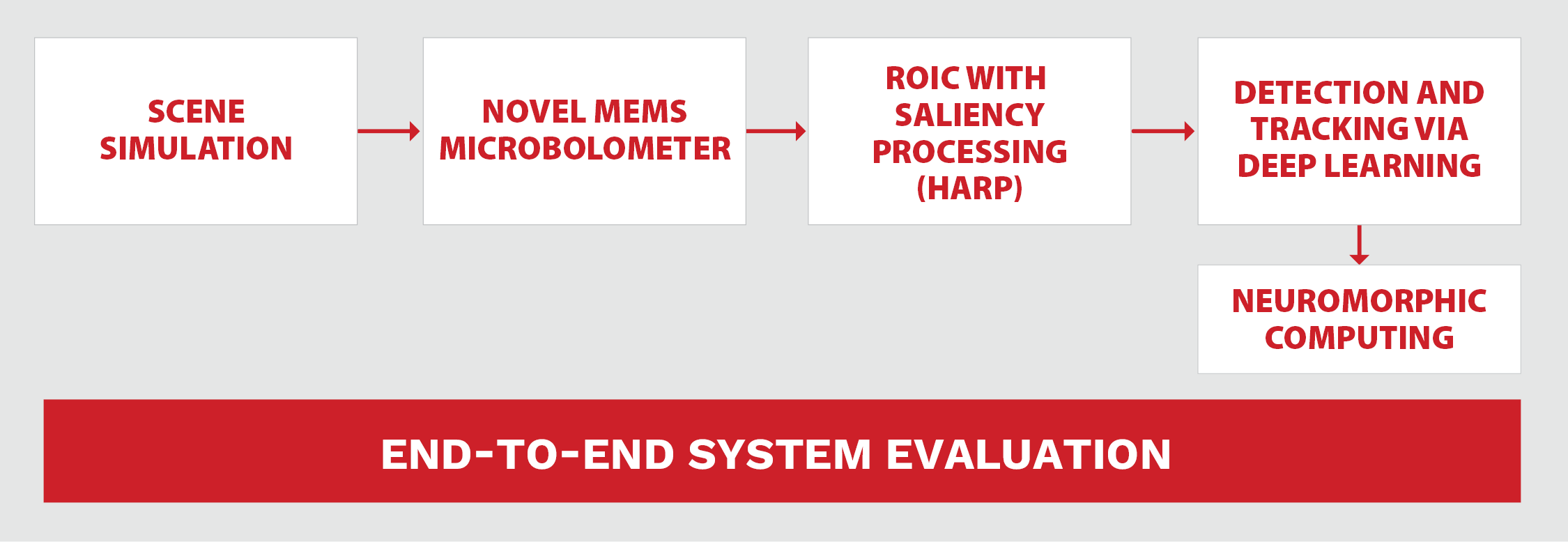

The performance of each component and across the whole system is estimated via tools that include simulated images and videos. The feasibility of COTS neuromorphic hardware to implement DL with less energy than graphical processing units (GPUs) is also described. The bioinspired system’s components are shown in Figure 1.

Figure 1. Bioinspired System for Autonomous Detection of Tiny, Fast Moving Objects in Infrared Imagery. Such a System Would Be Dramatically Smaller, Lighter, Less Power-Hungry, and More Cost-Effective Than Traditional Systems (Source: C. Bobda, Y.-K. Yoon, S. Chakraborty, S. Chelian, and S. Vasan).

Methods

There are five main thrusts to the design effort:

- A scene simulation,

- A MEMS microbolometer,

- A ROIC that uses a unique saliency computation to remove uninteresting image regions and increase detection speed (referred to as Hierarchical Attention-oriented, Region-based Processing or HARP [6]),

- DL detection and tracking models, and

- Neuromorphic computing.

Additionally, end-to-end system evaluation and evaluation of each component are performed.

Scene Simulation

To detect and track tiny, fast objects in cluttered and noisy scenes, training data is needed. However, there is a scarcity of such datasets available to the public. For this reason, harnessing the power of synthetic datasets was started. The work of Park et al. [7], for example, illustrates this approach. Due to the scarcity of real hyperspectral images of contraband on substrates, synthetic hyperspectral images of contraband substances were created on substrates using infrared spectral data and radiative transfer models. For small and rapidly moving objects, a small publicly available dataset was used first because it had single-frame infrared targets with high-quality annotations, which can be used for detection modules. Animating targets for tracking modules was also explored. There are other infrared datasets of aerial targets such as unmanned aerial vehicles, but these tend to occupy more pixels per frame than the dataset used in the present work.

MEMS Microbolometer

According to Dhar and Khan [8], detection ranges are sensitive to temperature and relative humidity variations, and long-wave infrared (LWIR) ranges depend more upon these variations than mid-wave infrared (MWIR) ranges. Because of this, on average, MWIR tends to have better overall atmospheric transmission compared to LWIR in most scenarios. Therefore, in this work, an MWIR MEMS microbolometer sensor that is highly selective in its spectral range was designed. Prior work in this area includes that of Dao et al. [9].

In a microbolometer, infrared energy strikes a detector material, heating it and changing its electrical resistance. This resistance change is measured and processed into temperatures which can be used to create an image.

There are commercially available non-MEMS microbolometers, but they have poorer sensitivity because thermal isolation is not as good as MEMS-based implementations. This is because in a MEMS device, there is a physical (e.g., air) gap between the detector and the substrate. The MEMS-based approach can increase the effective absorbing area of the sensor with complex structures and increase its responsivity. Yoon et al. [10] demonstrated multidirectional ultraviolet lithography for several complex three-dimensional (3-D) MEMS structures which can be used to create a MEMS microbolometer (Figure 2).

![Figure 2. Previously Demonstrated Complex 3-D MEMS Structures Which Can Be Used for Microbolometers (Source: Yoon et al. [10]).](https://dsiac.dtic.mil/wp-content/uploads/2024/11/Bobda_Figure_2.png)

Figure 2. Previously Demonstrated Complex 3-D MEMS Structures Which Can Be Used for Microbolometers (Source: Yoon et al. [10]).

Hierarchical Attention-Oriented, Region-Based Processing (HARP)

Event-based HARP is a ROIC design that suppresses uninteresting image regions and increases processing speed. It was developed by Bhowmik et al. [6, 11]. The work draws inspiration from and is a simplified abstraction of the hierarchical processing in the visual cortex of the brain where many cells respond to low-level features and transmit the information to fewer cells up the hierarchy where higher-level features are extracted [12].

The main idea is illustrated in Figure 3a. Figure 3b shows an architecture diagram. In the first layer, a pixel-level processing plane (PLPP) provides early feature extraction such as edge detection or image sharpening. Several pixels are then grouped into a region. In the next stage, the structure-level processing plane (SLPP) produces intermediate features such as line or corner detection using a region processing unit (RPU). For a region, processing is only activated if its image region is relevant. Image relevance is computed based on several metrics, such as predictive coding in space or time, edge detection, and measures of signal-to-noise ratio (SNR). The RPU also sends feedback signals to the PLPP using an attention module. If image relevance is too low, pixels in the PLPP halt their processing using a clock gating method. Thus, like the Dynamic Vision System (DVS) [13], uninteresting image regions like static fields would not be processed and would save energy and time. On the other hand, unlike the DVS, HARP directly provides intensity information and could differentiate between extremely hot targets and moderately hot targets.

![Figure 3. Previously Demonstrated Complex 3-D MEMS Structures Which Can Be Used for Microbolometers (Source: Yoon et al. [10]).](https://dsiac.dtic.mil/wp-content/uploads/2024/11/Bobda_Figure_3a.png)

![Figure 3. Previously Demonstrated Complex 3-D MEMS Structures Which Can Be Used for Microbolometers (Source: Yoon et al. [10]).](https://dsiac.dtic.mil/wp-content/uploads/2024/11/Bobda_Figure_3b.png)

Figure 3. HARP Illustrated as (a) a Conceptual Diagram and (b) an Architecture Diagram. HARP Is Used to Remove Uninteresting Image Regions to Find Targets Faster (Source: Bhowmik et al. [11, 12]).

Finally, at the knowledge inference processing plane (NIPP), global feature processing such as with a convolutional neural network (CNN) is performed. NIPP implementations are described in the next subsection. Because only interesting image regions are processed—not all pixels—speed and power savings advantages are realized. Savings can be up to 40% for images with few salient regions. Because the hardware is parallel, latency is more or less independent of image size.

Detection and Tracking

Detection DL models such as You Only Look Once (YOLO) [14] and U-Net [15] were tried. Parameter searches over the number of layers, loss function (e.g., Tversky and Focal Tversky), and data augmentation (flipping and rotating images) to increase performance were also conducted.

For tracking, a fully convolutional Siamese network was chosen [16] on the videos derived from the previously mentioned, publicly available dataset. Siamese networks are relatively simple to train and computationally efficient. One input of the twin network starts with the first detection, and the other input is the larger search image. The twin network’s job is to locate the exemplar image inside the search image. In subsequent frames, the first input is updated with subsequent detected targets.

Neuromorphic Computing

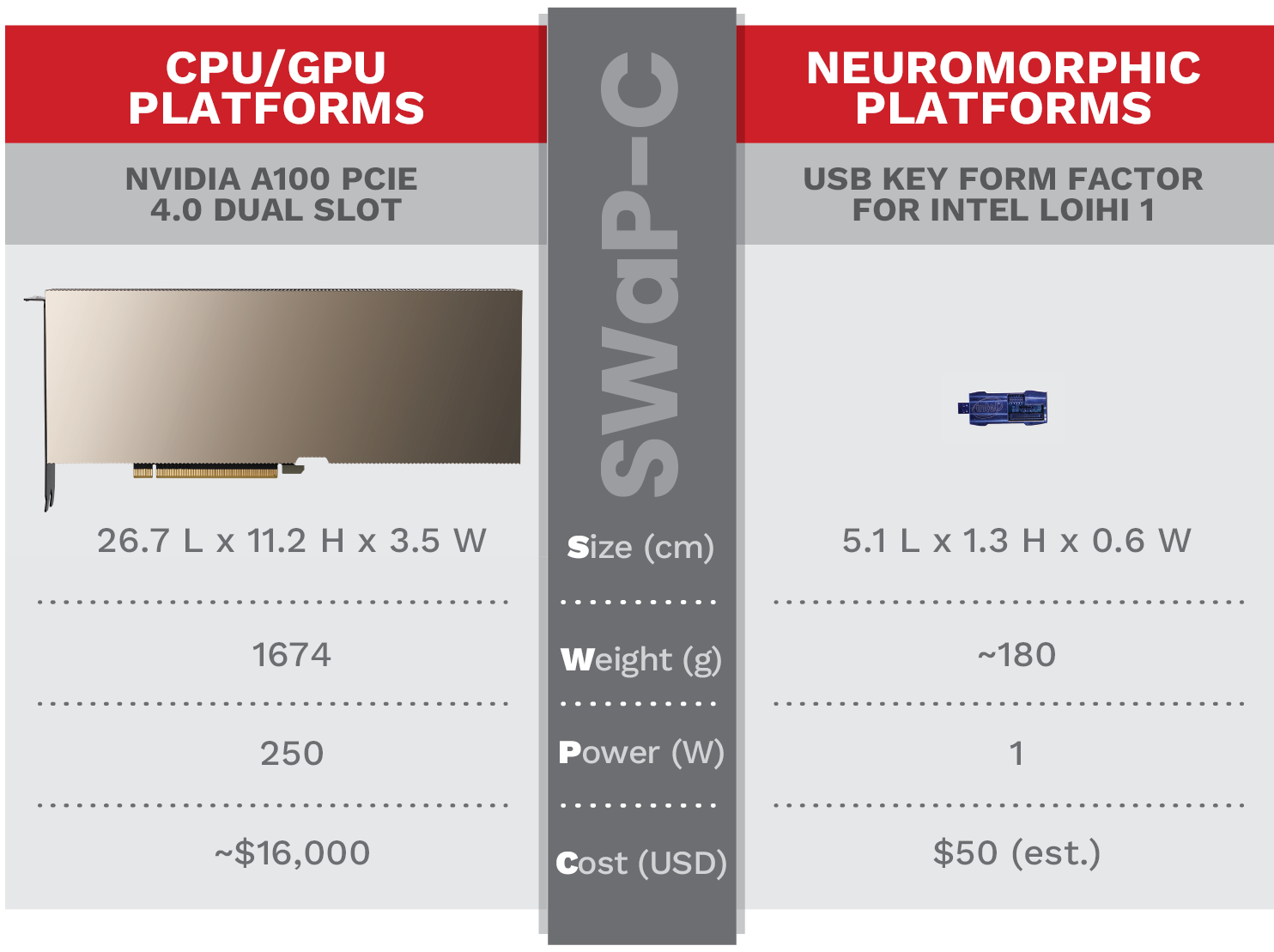

Neuromorphic computing offers low SWaP-C alternatives to larger central processing unit (CPU)/GPU systems. DL detection and tracking modules can be run on neuromorphic computers to exploit these advantages. The alternatives are compared in Table 1.

Table 1. Comparison of CPU/GPU and Neuromorphic Compute Platforms. Neuromorphic Platforms Offer Lower SWaP-C (Source for Left Image, Wikimedia; Right, Intel)

Neuromorphic computing has been used in several domains, including computer vision and cybersecurity. In a computer vision project, classification of contraband substances using LWIR hyperspectral images has been demonstrated in a variety of situations, including varied backgrounds, temperatures, and purities. Full-precision GPU and the BrainChip Akida compatible models gave promising results [7]. In a cybersecurity project, accurate detection of eight attack classes and one normal class was demonstrated in a highly imbalanced dataset. First-of-its-kind testing was chosen with the same network on full-precision GPUs and two neuromorphic offerings—the Intel Loihi 1 hardware and the BrainChip Akida 1000 [17]. Updates have since been made to this work, such as a smaller, more accurate neural network and the use of the BrainChip chip (not just a software simulator) and Intel’s new DL framework Lava for the Loihi 2 chip [18, 19].

End-to-End System Evaluation

For end-to-end system evaluation, speed was the primary focus; however, power consumption was also estimated based on novel simulations or previous work. Each module had their own metrics, e.g., noise equivalent temperature difference (NETD) for the MEMS microbolometer or intersection over union (IoU) for detection and tracking.

Results

Results from the five primary thrusts—scene simulation, MEMS microbolometer, HARP, detection and tracking, and neuromorphic computing—and end-to-end system evaluation are given. The system’s ability is estimated to detect targets at less than 5 s after a fast-moving object enters a sensor’s field of view. The system design would have a dramatically smaller SWaP-C envelope than conventional systems.

Scene Simulation

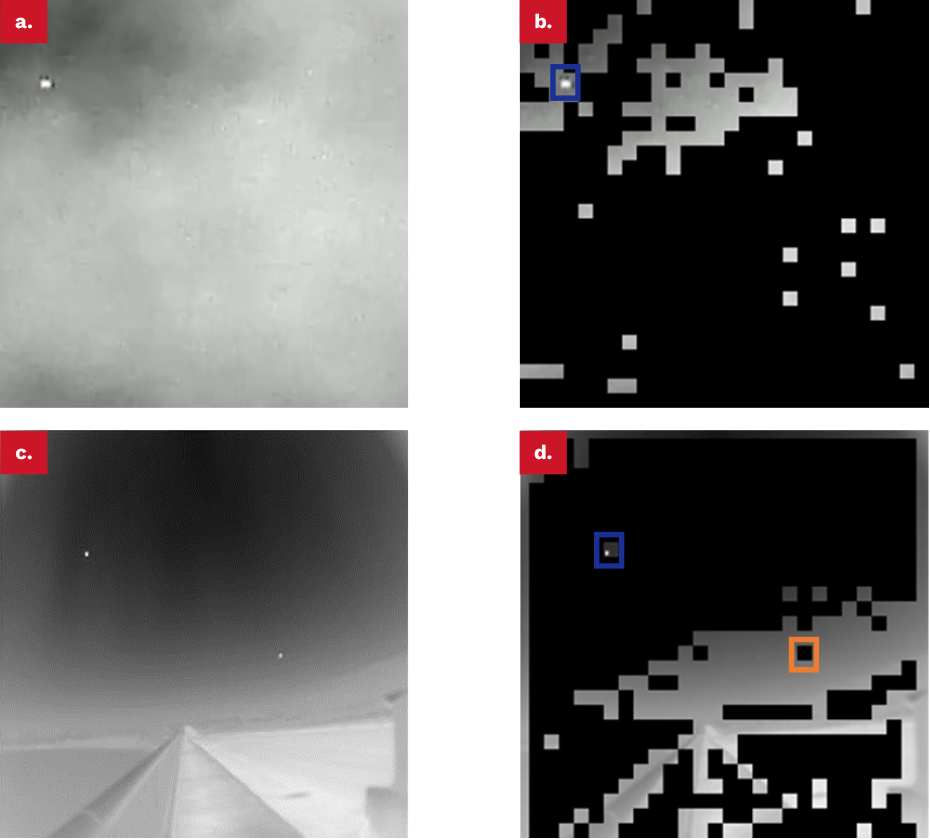

Images from a publicly available dataset were used for detection and tracking. Separate images were used for training vs. testing. Example images are shown in Figures 4 a and c. These infrared images have tiny targets in cluttered scenes; thus, they are a good starting point for the current application domain.

Figure 4. Infrared Input Image Regions (a and c) and Salient Regions (b and d) (Black Regions Are Removed by HARP). Image (a) Has a True Positive, and Image (d) Has a True Positive and False Negative (Blue Bounding Boxes Are Accurate Detections, and Orange Is a Missed Region) (Source: github.com/YimianDai/sirst).

MEMS Microbolometer

COMSOL was used to simulate the microbolometer. A 10× improvement in NETD over existing vanadium oxide (VOx) microbolometers was achieved, and response times were approximately 3× less! This is because of the better thermal isolation of the MEMS microbolometer from its support structures and the unique choices of materials.

HARP

HARP was able to remove uninteresting image regions to decrease detection speed. In Figure 4, input images from a publicly available dataset and salient regions are shown in two pairs. The top pair retains the target—the bright region in the upper left. In the bottom pair, HARP retains one target in the upper left but omits another one to the right of the center of the image. This initial testing was carried out at a preliminary level. Further parameter tuning is necessary for more refined target extraction.

Detection and Tracking

For detection, U-Net worked better than YOLO networks probably because targets were tiny. U-net detected targets in different sizes and varied backgrounds. Across dozens of images, the average probability of detection was 90% and probability of false alarm was 12%. Further hyperparameter tuning will improve these results.

For tracking, a fully convolutional Siamese network method tracks targets in different sizes and varied backgrounds. Across about a dozen videos, the average IoU was 73%. Further hyperparameter tuning will lead to improved performance.

Neuromorphic Computing

In addition to prior work in neuromorphic computer vision to detect contraband materials [7], recent work from Patel et al. [20] implemented U-Net on the Loihi 1 chip. They achieved 2.1× better energy efficiency than GPU versions, with only a 4% drop in mean IoU. However, processing times were ~87× slower. Their code was not released, so it was not possible to use their implementation with the current dataset. The release of the more powerful BrainChip and Intel Loihi chips combined with other optimizations will decrease processing times. For example, preliminary pedestrian and vehicle detection experiments were conducted with a YOLO v2 model on the BrainChip AKD1000 chip using red-green-blue color images. With a test set of 100 images from the PASCAL Visual Object Classes dataset, there was a small decrease in mean average precision of 4% compared to full-precision GPU models, but processing times were ~3× slower.

End-to-End System Evaluation

The detection time was estimated to be 4 to 5 s once a faraway object entered the sensor’s field of view. The following times were included: (1) microbolometer response and readout is estimated at 50 ms via COMSOL; (2) HARP at less than 1 s [12] (estimated at 8 µs to 12.5 ns, with an application-specific integrated circuit [ASIC] or field-programmable gate array [FPGA], respectively); (3) detection and tracking at 2 s (DL algorithms operate at 30 fps or more); and (4) other latencies at 1 s.

For power, the system’s estimated power draw is less than 10 mW for the microbolometer via COMSOL and 2 mW to 7 W for HARP with an ASIC or FPGA, respectively, based on Yoon et al. [10]. For detection and tracking, a tradeoff between power and speed is apparent. For targeting applications, GPUs are a better choice and would draw ~250 W, per Table 1. With a 50% margin of safety, the system would draw ~375 W. For less time-critical applications, neuromorphic processors are adequate and would draw ~1 W. With a 50% margin of safety, the system would draw ~1.5 W.

Discussion

Overall, a bioinspired system to autonomously detect tiny, fast-moving objects in infrared imagery such as missiles and aircraft was presented. Detecting and tracking tiny fast-moving objects is vitally important to several U.S. Department of Defense customers. As threats continue to evolve, existing systems need updating and/or replacing. Unlike existing systems, the approach here offers considerable SWaP-C advantages of bioinspired computing in several stages. Civilian applications include tracking launch or reentry vehicles, which is of interest to the National Aeronautics Space Administration (NASA) as well as private sector companies. The current design encompasses every aspect from capturing the target through optics to the final processor outputting target reports. The system design effort has five main thrusts: (1) scene simulation, (2) a novel highly sensitive MEMS microbolometer, (3) HARP—a ROIC which uses a unique saliency computation to remove uninteresting image regions, (4) DL detection and tracking algorithms, and (5) neuromorphic computing. This system can detect targets within 5 s of a fast-moving object entering the sensor’s field of view. Further enhancements are possible, and some improvements that can be realized in future work are described next.

Scene Simulation

More realistic trajectories like those approximated by piecewise polynomial curves or physics-based models could be used here. Furthermore, the movement of several objects in the same scene could be simulated. This would present a significant challenge to tracking algorithms.

MEMS Microbolometer

Simulating and fabricating 10×10 modular pixel array samples with a 20-µm pitch would provide valuable performance characterization information. The geometry of the microbolometer and the fabrication processes can be optimized for performance. For example, thinner, longer legs would yield better sensitivity but must be compliant with manufacturing and operational constraints. Some studies in this area are referenced—Erturk and Akin [21] illustrate absorption as a function of microbolometer thickness, and Dao et al. [9] model resistivity as a function of temperature and fabrication process. Additional fabrication parameters include temperature, curing steps, and deposition speed. Simulation tools like COMSOL can be used to theoretically optimize design characteristics; however, an initial fabrication run is necessary to validate these tools.

Lastly, even though microbolometer technology provides uncooled infrared thermal detection, microbolometer performance is generally limited by low sensitivity, high noise, slow video speed, and lack of spectral content. Ackerman et al. [22] and Xue et al. [23] have demonstrated fast and sensitive MWIR photodiodes based on mercury telluride (MgTe) colloidal quantum dots that can operate at higher temperatures, including room temperatures. With sufficient maturing, this work may be used as the sensing device in lieu of microbolometers. Cooling the microbolometer is another option to increase sensitivity.

HARP

Work with FPGAs and simulated ASICs is expected to continue. Furthermore, to better deal with dim moving objects, SLPPs can be tuned to be more sensitive to motion than contrast differences. Because saliency is the weighted sum of several feature vectors, this would make the weight of the former larger than the latter. This will also help disambiguate targets from clutter, such as slower moving airplanes or the sun.

Detection and Tracking

Single-frame detection techniques are used here. Alternatively, multiple frames can be leveraged to produce more accurate results, although it will also add latency. Another strategy could be to increase the integration time of the cameras so that targets would appear as streaks. These streaks would be larger features to detect and track.

Neuromorphic Computing

Work in the domain continues using BrainChip and Intel products in cybersecurity and other domains. BrainChip and Intel have released the second generation of their chips—seeing what improvements in speed can be gained from these products will be interesting.

End-to-End System Evaluation

More work would be helpful. Examples include calculating the SNR of the microbolometer; tabulating the results from HARP across several different images; and characterizing detection performance by scene type (e.g., day vs. night and cloudy vs. clear). For tracking, track length and uncertainty quantification can be explored. Furthermore, power, speed, and interface elements of hardware components could be further detailed.

Conclusions

By leveraging prior experience and the current work in synthetic data generation and developing MEMS devices, specialized ROICs, DL, and neuromorphic systems, this work can continue further and achieve new heights in MWIR imagery and autonomous detection and tracking in infrared imagery. This includes fast-moving pixel and subpixel object detection and tracking. Bioinspired computing can produce tremendous savings in SWaP-C, as was illustrated in Table 1 and described throughout this article.

In the short term, the microbolometer can be tested with pixel array samples, the HARP ROIC can be implemented on an FPGA, and the detection and tracking systems can be implemented on GPUs. In the long term, the microbolometer and ROIC can be bonded together as a flip chip and the GPUs replaced with neuromorphic ASICs. This will yield improvements in power and latency, allowing this system to be deployed on large or small platforms.

References

- Scribner, D., T. Petty, and P. Mui. “Neuromorphic Readout Integrated Circuits and Related Spike-Based Image Processing. ” The 2017 IEEE International Symposium on Circuits and Systems (ISCAS), IEEE, pp. 1–4, 2017.

- Chelian, S., and N. Srinivasa. “System, Method, and Computer Program Product for Multispectral Image Processing With Spiking Dynamics.” U.S. Patent 9,111,182, 2015.

- Chelian, S., and N. Srinivasa. “Neuromorphic Image Processing Exhibiting Thalamus-Like Properties.” U.S. Patent 9,412,051, 2016.

- Carpenter, G. A., and S. Chelian. “DISCOV (DImensionless Shunting COlor Vision): A Neural Model for Spatial Data Analysis.” Neural Networks, vol. 37, pp. 93–102, 2013.

- Chelian, S. “Neural Models of Color Vision with Applications to Image Processing and Recognition.” Boston University, https://www.proquest.com/openview/41ac5c06c80fffd0631e94c26245f00d/1, 2006.

- Bhowmik, P., M. Pantho, and C. Bobda. “HARP: Hierarchical Attention Oriented Region-Based Processing for High-Performance Computation in Vision Sensor.” Sensors, vol. 21, p. 1757, 2021.

- Park, K. C., J. Forest, S. Chakraborty, J. T. Daly, S. Chelian, and S. Vasan. “Robust Classification of Contraband Substances Using Longwave Hyperspectral Imaging and Full Precision and Neuromorphic Convolutional Neural Networks.” Procedia Computer Science, vol. 213, pp. 486–495, 2022.

- Dhar, V., and Z. Khan. “Comparison of Modeled Atmosphere-Dependent Range Performance of Long-Wave and Mid-Wave IR Imagers.” Infrared Physics & Technology, vol. 51, pp. 520–527, 2008.

- Dao, T. D., A. T. Doan, S. Ishii, T. Yokoyama, O. H. S. Ørjan, D. H. Ngo, T. Ohki, A. Ohi, Y. Wada, C. Niikura, S. Miyajima, T. Nabatame, and T. Nagao. “MEMS-Based Wavelength-Selective Bolometers.” Micromachines, vol. 10, p. 416, 2019.

- Yoon, Y. K., J. H. Park, and M. G. Allen. “Multidirectional UV Lithography for Complex 3-D MEMS Structures.” J. Microelectromech. Syst., vol. 15, pp. 1121–1130, 2006.

- Bhowmik, P., M. Pantho, M. Asadinia, and C. Bobda. “Design of a Reconfigurable 3D Pixel-Parallel Neuromorphic Architecture for Smart Image Sensor.” The 2018 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 673–681, 2018.

- Bhowmik, P., M. Pantho, and C. Bobda. “Bio-Inspired Smart Vision Sensor: Toward a Reconfigurable Hardware Modeling of the Hierarchical Processing in the Brain.” J. Real-Time Image Process., vol. 18, pp. 1–10, 2021.

- Lichtsteiner, P., C. Posch, and T. Delbrück. “A 128×128 120 dB 15 μs Latency Temporal Contrast Vision Sensor.” IEEE J. Solid-State Circuits, vol. 43, no. 2, pp. 566–576, 2008.

- Redmon, J., S. Divvala, R. Girshick, and A. Farhadi. “You Only Look Once: Unified, Real-Time Object Detection.” The 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 779–788, 2016.

- Ronneberger, O., P. Fischer, and T. Brox. “U-Net: Convolutional Networks for Biomedical Image Segmentation.” The 2015 IEEE 18th International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), pp. 234–241, 2015.

- Xu, Y., Z. Wang, Z. Li, Y. Yuan, and G. Yu. “SiamFC++: Towards Robust and Accurate Visual Tracking With Target Estimation Guidelines.” Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, no. 7, pp. 12549–12556, 2020.

- Zahm, W., T. Stern, M. Bal, A. Sengupta, A. Jose, S. Chelian, and S. Vasan. “Cyber-Neuro RT: Real-Time Neuromorphic Cybersecurity.” Procedia Computer Science, vol. 213, pp. 536–545, 2022.

- Zahm, W., G. Nishibuchi, S. Chelian, and S. Vasan. “Neuromorphic Low Power Cybersecurity Attack Detection.” The 2023 ACM International Conference on Neuromorphic Systems, 2023.

- Zahm, W., G. Nishibuchi, A. Jose, S. Chelian, and S. Vasan. “Low Power Cybersecurity Attack Detection Using Deep Learning on Neuromorphic Technologies.” Journal of the Defense Systems Information Analysis Center, to be published, 2024.

- Patel, K., E. Hunsberger, S. Batir, and C. Eliasmith. “A Spiking Neural Network for Image Segmentation.” arXiv preprint arXiv:2106.08921, 2021.

- Erturk, O., and T. Akin. “Design and Implementation of High Fill-Factor Structures for Low-Cost CMOS Microbolometers.” The 2018 IEEE Micro Electro Mechanical Systems (MEMS), pp. 692–695, 2018.

- Ackerman, M., X. Tang, and P. Guyot-Sionnest. “Fast and Sensitive Colloidal Quantum Dot Mid-Wave IR Photodetectors.” ACS Nano, vol. 12, pp. 7264–7271, 2018.

- Xue X., M. Chen, Y. Luo, T. Qin, X. Tang, and Q. Hao. “High-Operating-Temperature Mid-Infrared Photodetectors via Quantum Dot Gradient Homojunction.” Light Sci. Appl., vol. 12, 2023.

Biographies

Christophe Bobda is a professor with the Department of Electrical and Computer Engineering at the University of Florida (UF). Previously, he joined the Department of Computer Science at the University of Erlangen-Nuremberg in Germany as a postdoc and was later appointed assistant professor at the University of Kaiserslautern. He was also a professor at the University of Potsdam and leader of The Working Group Computer Engineering. He received the Best Dissertation Award 2003 from the University of Paderborn for his work on synthesis of reconfigurable systems using temporal partitioning and temporal placement. Dr. Bobda holds a licence in mathematics from the University of Yaounde, Cameroon, and a diploma and Ph.D. in computer science from the University of Paderborn in Germany.

Yong-Kyu Yoon is a professor and a graduate coordinator with the Department of Electrical and Computer Engineering at UF, where his current research interests include microelectromechanical systems, nanofabrication, and energy storage devices; metamaterials for radio frequency (RF)/microwave applications; micromachined millimeter wave/terahertz antennas and waveguides; wireless power transfer and telemetry systems; lab-on-a-chip devices; and ferroelectric materials for memory and tunable RF devices. He was a postdoctoral researcher with the Georgia Institute of Technology, an assistant professor with the Department of Electrical Engineering at The State University of New York, Buffalo, and an associate professor at UF. He has authored over 200 peer-reviewed publications and was a recipient of the National Science Foundation Early Career Development Award and the SUNY Young Investigator Award. Dr. Yoon holds a Ph.D. in electrical and computer engineering from the Georgia Institute of Technology.

Sudeepto Chakraborty has over 20 years of diverse experience in science and technology, including CNNs, image processing, computational electromagnetics, and meta-optics. He has worked on a wide variety of projects at Hewlett-Packard, Broadcom, Stanford, and Fujitsu labs. Dr. Chakraborty holds a Ph.D. in electrical engineering from Stanford University.

Suhas Chelian is a researcher and machine-learning (ML) engineer. He has captured and executed projects with several organizations like Fujitsu Labs of America, Toyota (Partner Robotics Group), Hughes Research Lab, DARPA, the Intelligence Advanced Research Projects Agency, and NASA. He has over 31 publications and 32 patents demonstrating his expertise in ML, computer vision, and neuroscience. Dr. Chelian holds dual bachelor’s degrees in computer science and cognitive science from the University of California San Diego and a Ph.D. in computational neuroscience from Boston University.

Srini Vasan is the president and CEO of Quantum Ventura Inc. and CTO of QuantumX, the research and development arm of Quantum Ventura Inc. He specializes in artificial intelligence (AI)/ML, AI verification validation, ML quality assurance and rigorous testing, ML performance measurement, and system software engineering and system internals. Mr. Vasan studied management at the MIT Sloan School of Management.